One of the most wonderful parts of my job, when presenting my dear readers with a new topic, is to cut through the layers of obscurity and useless nonsense the madding crowds add to specific issues.

Today, we shall whet our blades in the sticky blood of the AI gods and their magic-weaving marketing prophets who have tried to blind our eyes with various wizardry, if they are able, to convince us that this AI stuff is far beyond us.

We will grasp “Agentic AI” and pull it down from its towering throne, dragging it through the dust and grime in which we daily find ourselves wading.

I’m sure you’ve heard these words, Agentic AI, thrown around by your CTO, boss, or LinkedIn peddler over the last few months. It’s hot, you know … all the cool kids are doing it.

Let’s do the unthinkable and ask AI what it thinks Agentic AI is.

"""

Agentic AI refers to artificial-intelligence systems built as autonomous “agents” that can decide, plan, and act in pursuit of a goal with only limited human supervision. In practice, that means the software can:

- Form or accept a high-level objective, break it into sub-tasks, and sequence those tasks (iterative planning and reasoning). NVIDIA Blog

- Take real actions—calling APIs, writing code, moving money, booking tickets, composing e-mails—rather than just returning text. UiPath

- Monitor results, learn, and adapt its plan on the fly, often coordinating multiple specialized agents in a larger workflow.

"""

- ChatGPTThe Background of Agentic AI

Truthfully, there is no magic when it comes to “what is Agentic AI.” It’s simply a regurgitation and re-imagination of the classic workflow where someone interacts with a “single” LLM.

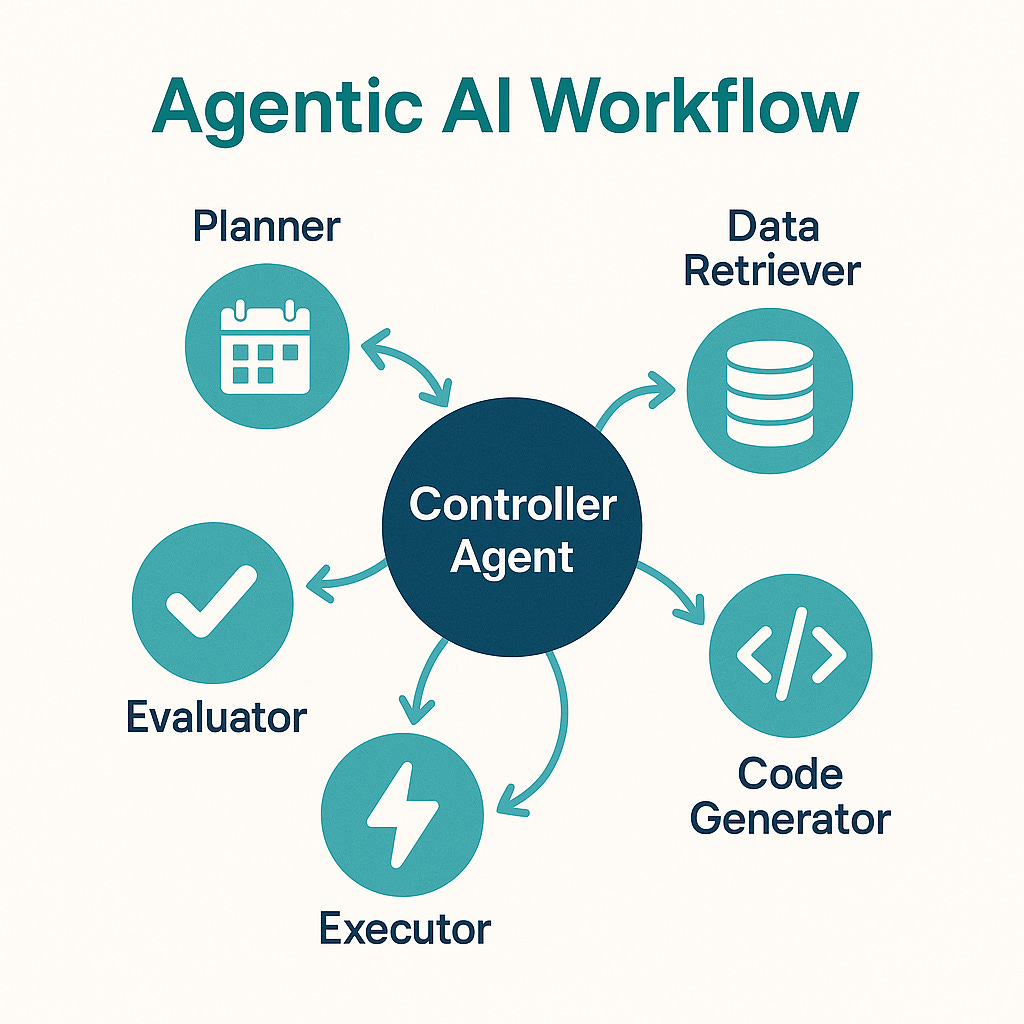

Generally speaking, Agentic AI is the approach of developing multiple “task-specific” Agents that can be combined together to provide an overall better experience for specialized tasks.

A visual of this conceptual “Agentic AI” workflow can be seen below.

You can think of it as RAG 2.0. (although RAG might now just be one of many agents added to an Agentic AI workflow). What I mean by RAG 2.0 is that, in the not-so-distant past, the prevailing wisdom was that to increase the accuracy and usefulness of AI generally, RAG was required, wherein specific content was embedded and made available to be integrated into the normal AI workflow.

That didn’t last long, did it?

The race for the “perfect AI sidekick.”

It helps to think about where we have come from so we can better understand why “Agentic AI” is even a thing. When ChatGPT first took the world by storm, quickly followed by a slew of competitors, the race was on to build the perfect model that could do and answer everything.

A single model that could do whatever we wanted at a moment’s notice. As the adoption of AI began to infiltrate every single business and job, two things became apparent: where AI was lacking.

Being good at everything means you’re not great at very specific things.

- Being good at writing a book means you might not be the best at making art for the cover.

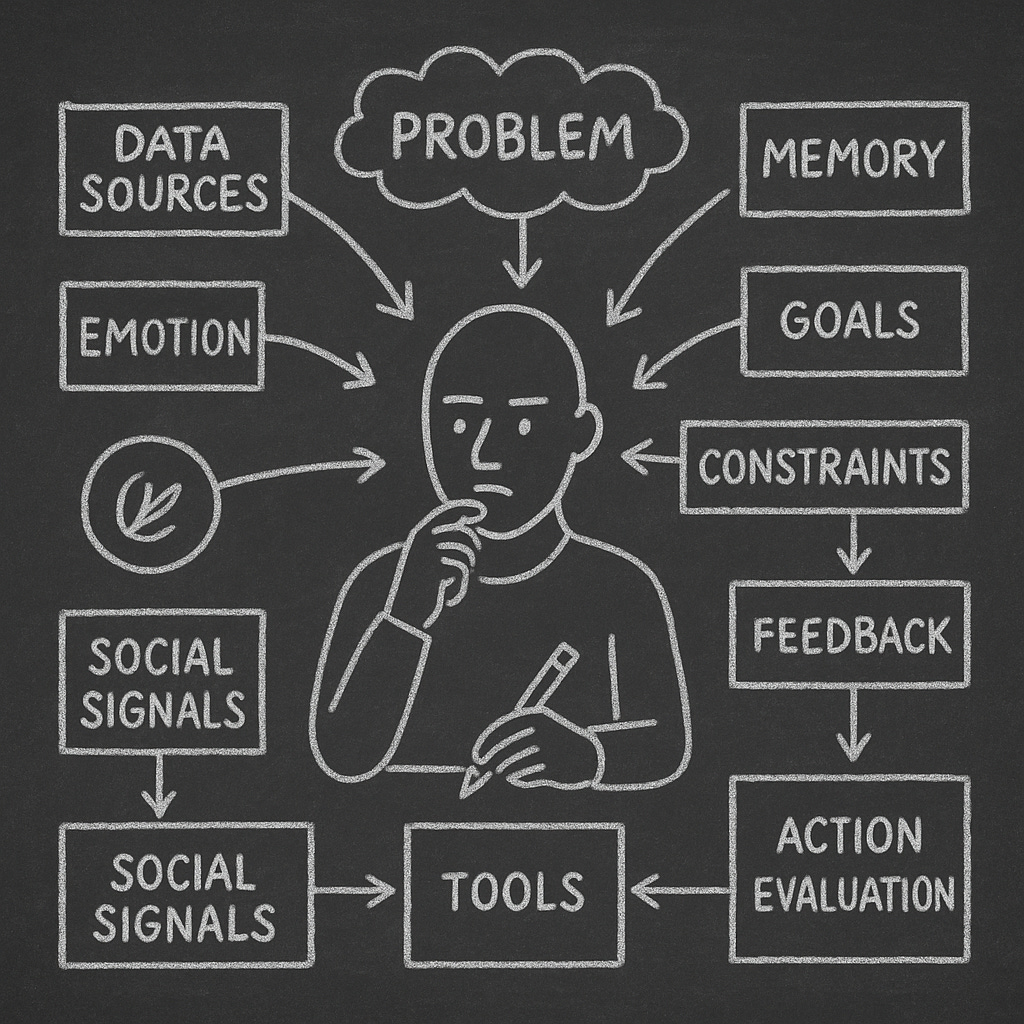

- Complex human-centric decision-making processes use lots of relevant context.

Go look up this information, pull the data, compile it, make a decision, etc.In essence, Agentic AI is tech’s way of making AI perform better, based on the currently existing architecture. Instead of having a single LLM attempt to solve a specific problem, perhaps we can insert “agents” (other AI bots) into the workflow, whose sole task is a specific one.

This is all fed back into a single context to achieve the best possible result, incorporating all relevant information and data.

It probably functions more like how we humans solve problems, gathering a lot of specific data based on our experience, and piling it all together to make a decision.

So what does that mean in real life?

In real life, this Agentic AI approach is built to be sort of “job” or “problem space” specific. It’s really about solving complex problems in a real-life way.

An agent might …

pull data from a database

go read a website

look through a company knowledge base

peak inside a vector database

some other specific task

etc, etc.

All the results can be pulled together and run through a controller LLM/AI that can now give a more accurate “result” based on the work of the other agents.

An Agentic AI workflow is just another Data Pipeline.

I do hate the burst the bubble of the endless stream of SaaS vendors who are running down the long hallways of LinkedIn trying to sell you the next magic AI assistant built using “Agentic AI.”

What they are trying to say is that some under-appreciated engineer spent a weekend “stitching together” a few different “functions” and wrapped it all inside a single AI “call.” (which I will do for you soon … on a weekend)

If you’ve been struggling to follow me so far, thinking of an Agent as a “method” or “function” is fine with me. Of course the implementation details will vary widely, depending on the agent itself, but in the end …

input » output » pass it along.

input » output » pass it along.

input » output » pass it along.

>>> put it all togetherJust some question(s) being feed into the LLM, that question or “task” being broken up into parts, being feed to agents, who in turn do a thing and return a thing … than their fearless AI context controller will munge it all together and spit it back out.

It won’t be long before there are DAGs for building Agent workflows if I had to guess.

Because we can, and to prove to you that Agentic AI is not magic, but just the hard Engineering work of defining specific Agents to do a thing, and tying it all together, let’s just create our own Agentic AI workflow.

Building an Agentic AI system.

Ok, so we are going to use LangGraph + LangChain and OpenAI to build a multi-agent system workflow, just to prove out how these concepts we’ve talked about, work in the real world, and there is no dark arts involved.

All this code is available on GitHub.

So we (me) will be building a self‑contained demonstration of a supervisor‑routed multi‑agent workflow built with LangGraph + LangChain. Dang, that sounds fancy doesn’t it? Maybe Meta should offer me a few million dollars to do this.

This GitHub project freely available to you spins up (via Docker Compose):

Postgres (seeded sample

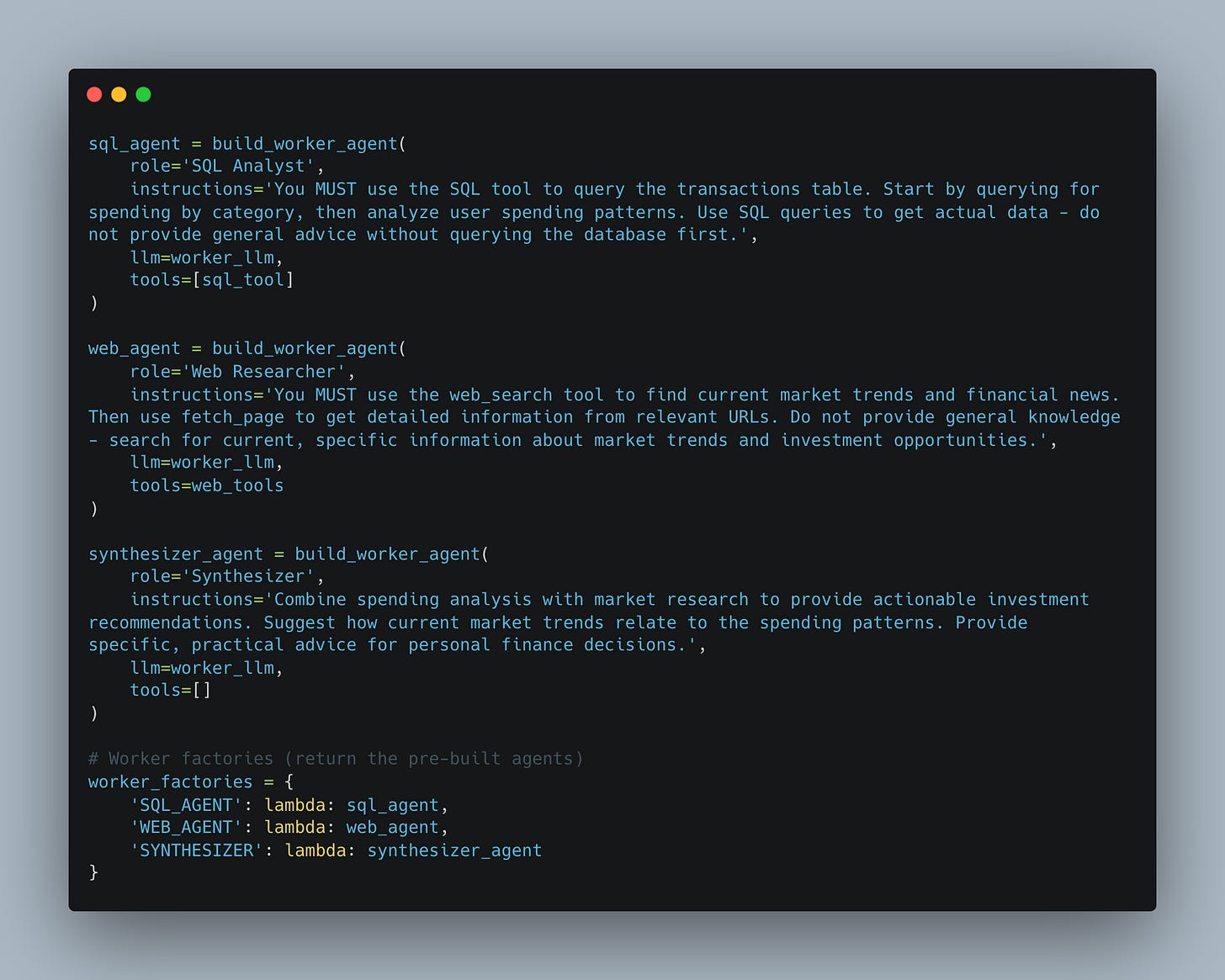

transactionstable)App container running a LangGraph graph with three worker agents:

SQL_AGENT– queries Postgres through an LLM‑generated SQL toolWEB_AGENT– performs DuckDuckGo search + optional page fetchSYNTHESIZER– composes final answer

A Supervisor (controller) node that decides which agent acts next and when to finish.

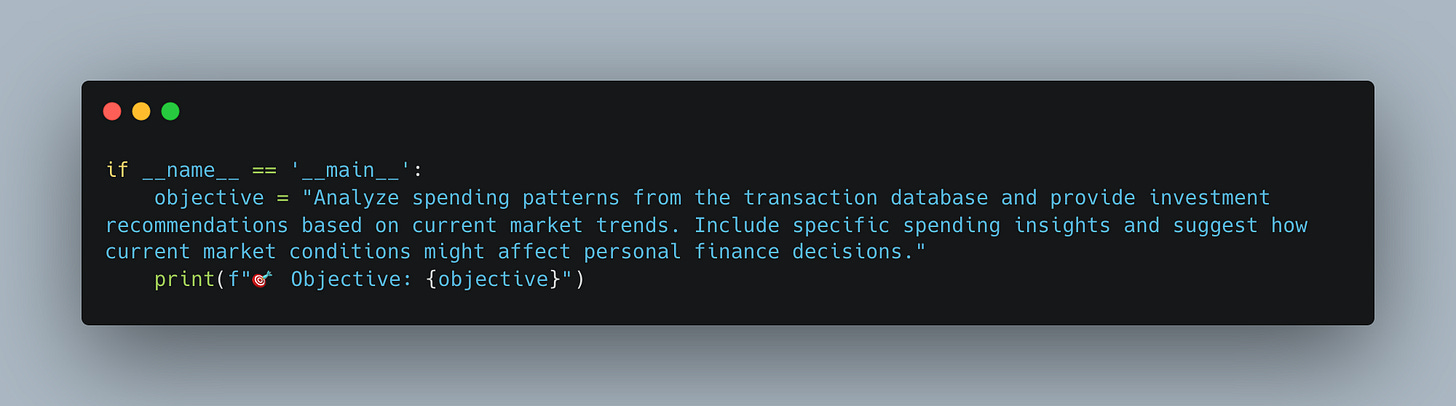

The example objective (modifiable) asks for structured spending insights + a current external headline, forcing cross‑agent collaboration.

Here is a visual of how this multi-agent (Agentic AI) workflow looks conceptually.

+------------------+

| SUPERVISOR |

| (decide next) |

+---------+--------+

| (route: SQL_AGENT / WEB_AGENT / SYNTHESIZER / FINISH)

+-------------+--------------+

| |

+-----v------+ +------v------+ +--------------+

| SQL_AGENT | | WEB_AGENT | | SYNTHESIZER |

| (DB SQL) | | (search+fx) | | (final blend) |

+-----+------+ +------+------+

| |

+-------------+--------------+

|

(back to)

SUPERVISOR --> FINISHThis makes sense right? Add your own context here that works for you. We basically want a few different agents to do specific tasks, and then bring it all together at the end so we can “get the best result possible.” Maybe you business context would be something different, use your imagination.

The directory of the repo looks as follows. I just want to point out there is a lot of “boiler plate” and “routing” code. It’s about weaving the spaghetti dinner together more than some new novel AI thingy.

├── docker-compose.yml

├── app/

│ ├── Dockerfile

│ ├── requirements.txt

│ ├── config.py # Environment & model settings

│ ├── tools.py # SQL & web tools

│ ├── agents.py # Worker agent constructors & prompts

│ ├── memory.py # Lightweight conversation state

│ ├── graph.py # LangGraph build (state + nodes + edges)

│ └── run.py # Entry point (sets objct & streams graph)

└── postgres/

├── Dockerfile

└── init.sql # Schema + seed dataFor the concepts we are talking about today, multi-agents, let’s just focus on the agents themselves, what they are doing and how they work together.

I suggest you go browse the code, but here is a an overview.

Graph-Based Workflow (LangGraph)

The system uses LangGraph to create a directed graph where:

- Nodes: Represent agents or decision points

- Edges: Define the flow between agents based on conditions

- State Management: Maintains conversation history and context across agent interactions

- Recursion Control: Prevents infinite loops with configurable recursion limitsTool Integration

Each agent has access to specialized tools:

- SQL Tool: Connects to PostgreSQL database for transaction analysis

- Web Search Tool: Searches for current market trends and financial news

- Fetch Tool: Retrieves detailed content from web pagesHow It Works

Supervisor Entry – Graph entry point is

supervisor, which inspects conversation state + objective.Decision – Supervisor LLM outputs either an agent name or a termination marker (interpreted as FINISH).

Worker Execution – Selected worker runs via its

AgentExecutorwith only its allowed tools.Conversation Update – Worker output appended to shared state.

Loop – Control returns to supervisor until it selects

SYNTHESIZERand then finishes, or decides it already has enough context.

The

SYNTHESIZERagent integrates prior SQL + web outputs into the final narrative.

Let’s just give a quick overview of what is inside each of the Python files that make up this Agentic AI workflow.

They all work together to make up the entire system.

1. Agent System

Supervisor Agent: Uses a structured prompt to decide which worker agent should handle tasks

Worker Agents: Each has a specific role, instructions, and access to relevant tools

Prompt Engineering: Carefully crafted prompts ensure agents use their tools effectively2. Graph Workflow

Node Creation: Dynamically creates nodes for each worker agent

Conditional Edges: Routes between agents based on supervisor decisions

State Management: Maintains conversation context and user objectives3. Tool System

SQL Database Tool: Executes SQL queries against PostgreSQL

Web Search Tool: Performs web searches for current information

Content Fetch Tool: Retrieves detailed content from URLs4. Memory Management

ConversationState: Tracks all agent interactions and outputs

Message History: Maintains chronological conversation flow

Context Preservation: Ensures agents have access to previous insightsAs you can see, it’s mostly about setting up a workflow and “pipeline” so our Agentic AI system can call the agents correctly and pull all the data together.

Honestly, most of this stuff is boilerplate and would stay the same throughout any AI Agent workflow we made, it would be more about swapping out different agents that we might want for other things, like looking inside a vector database, going through our Google docs, hitting some API, whatever.

So enough with that stuff, does it work?

git clone git@github.com:danielbeach/agenticAIagent.git

cd agenticAIagent

export OPENAI_API_KEY=sk-... # your key

docker compose up --buildYou can see the question I hardcoded into this example.

I will save you the pain of giving you the entire output, I suggest you clone the repo, export our OPENAI_API_KEY and run docker compose up to see what happens yourself. Here is a little bit of what is printed to STDOUT.

Basically … it works. It might seem overwhelming, but not really, once you step through the code, it’s not that many lines. We are build a few agents, chain them together in a graph, etc, etc.

The sky is the limit at this point as you can see. You could, for whatever your use-case is, build specific agents to do other things, look over StackOverflow, read DuckDB docs, go read some database, etc, etc.

The obvious thing here is that that you can make an “Agent” out of whatever your little heart desires. This sort of thing really can make AI better at solving specific tasks.

Just a whole lotta for-loops and coordination between tasks.

Closing thoughts.

I hope I was able to demystify “Agentic AI,” stripping away most of the hype to show it’s just a little engineering:

orchestrating multiple narrow, tool‑using AI agents instead of leaning on one monolithic LLM.

Rather than magic, it’s a familiar data / ETL pipeline pattern: break a high‑level goal into sub‑tasks, route work to specialized components, gather outputs, synthesize a final answer.

We used LangGraph + LangChain and built a Supervisor node that iteratively decides which worker to invoke next (SQL agent for Postgres queries, Web agent for search + fetch, and a Synthesizer to compose the final response).

Each agent has tightly scoped tools and prompts; shared state (memory) preserves intermediate results; the graph enforces control flow and prevents loops. Most of the code is reusable boilerplate—swap in other agents (vector DB lookup, internal docs crawler, API caller) as needed.

“Agentic AI” is just structured decomposition + routing + tool execution + aggregation. Treat agents like functions: input → output → pass along, repeated until the supervisor judges the objective satisfied.

In the end, Agentic AI is an extensible pattern for scaling specificity and reliability—essentially a programmable workflow wrapped around LLM calls—promising better, more context‑rich results through modular specialization.

Key Takeaways

Not magic: it’s modular orchestration of specialized LLM-powered tools.

Supervisor pattern routes tasks; workers stay narrowly focused.

LangGraph models the workflow as nodes (agents) + conditional edges.

Memory/state glue the steps; prompts + tool limits enforce role discipline.

Easy extensibility: add/replace agents for new data sources or functions.

Think “data pipeline / DAG” applied to AI reasoning and action execution.

No noise, no hype just pure value..thanks for the great writing

Straight to the point, awesome as always man!