Apache Iceberg on Databricks

even if it is a bad idea

At this point, I’m not sure if the news that Databricks has full support for Apache Iceberg is just a token, an offering to appease the rambunctious rabble and data gods, a way to quiet the complainers and keep lightning from falling from the sky.

Not sure. Maybe all of the above.

On the other hand, it can be seen as a testament to Apache Iceberg's enduring strength, as well as its widespread acceptance in the Lake House ecosystem as a tier-1 option. However, I find it inferior to Delta Lake, primarily because it lacks full support in the broader Data Engineering tooling landscape, such as DuckDB.

It is what it is.

Thanks to Delta for sponsoring this newsletter! I personally use Delta Lake on a daily basis, and I believe this technology represents the future of Data Engineering. Content like this would not be possible without their support. Check out their website below.

What, you think it’s funny a newsletter sponsored by Delta Lake is writing about Apache Iceberg???!!! That should tell you something about the shifting landscape of the Lake House.

Today, we shall be the seekers of truth; we shall plumb the depths of Apache Iceberg on Databricks to see if the truth will set us free. Can you read and write Iceberg data with third-party tooling, both inside and outside the Databricks compute platform?

In theory, this should be possible thanks to Unity Catalog and the new Iceberg REST API.

“First, you can now read and write Managed Iceberg tables using Databricks or external Iceberg engines via Unity Catalog’s Iceberg REST Catalog API.” - announcement

I’m not sure if this will slowly melt the ice-cold (pun intended) hearts of all those angry Apache Iceberg trolls who’ve been ticked at Databricks for not supporting Iceberg more.

Setting the Stage.

Lucky for us, those nerds over at Databricks never fail to serve up a pile-o-docs to go along with every new thingy they release, full support for Apache Iceberg being no different.

A few notes that I don’t care about, but that I feel obligated to list.

Apache Iceberg in Databricks support can be done via two routes.

Unity Catalog managed Iceberg

“Foreign Catalog” table.

You can create Iceberg tables via normal SparkSQL with DBR runtime, or with an external engine.

This sort of external engine + Databricks Iceberg access requires you to throw salt over your left shoulder, speak the secret incantation in Latin, and meet a wizard under a tree at midnight.

Enable External data access for your metastore

Grant the principal configuring the integration the

EXTERNAL USE SCHEMAprivilege on the schemaAuthenticate using a Databricks personal access token

Aka … they want you to get tired of such things and use Delta Lake … (not going to lie, that’s what I think you should do).

Trying it out with code … and Polars + PyIceberg.

To make sure we can do what Databricks says we can, let’s use Polars + PyIceberg (because Iceberg sucks) and on our local machine, seeing if we can reach out and …

create a Unity Catalog Iceberg table

write data to that table

read data back from table

Again … this will be done with compute and tooling OUTSIDE of the Databricks environment. I mean this is the whole point behind that great announcement after all.

Let’s start by using the Divvy Bike Trips open source dataset.

Should be simple enough to turn this data into a Databricks Iceberg table with PyIceberg and Polars. If you run something like the following in a Python:latest Docker image … this will get you up and running.

docker run -it python:latest /bin/bash

>> apt-get update

>> apt-get install vim wget

>> wget https://divvy-tripdata.s3.amazonaws.com/202505-divvy-tripdata.zip

>> unzip 202505-divvy-tripdata.zip

>> vim test.py # copy code in here

>> pip3 install polars pyiceberg pyarrow

>> python3 test.pyAnd the code …

Notice how straight forward this is, all being done in a Docker container on my machine, reaching out to my Databricks Unity Catalog setup …

Well, this is interesting. Every time I run the code I get some conflicting errors from pyiceberg/Databricks.

I’m getting both NotAuthorized, but all TableAlreadyExistsError. The TableAlreadyExistsError must not be true because I tried random and different table names and the same error came through. Must be something with permissions.

I did create a brand new PAT (token) just for this, so that’s not it.

External data access is turned on … see below

I mean I guess I can grant myself, the Admin, more permissions explicitly?

GRANT EXTERNAL USE SCHEMA ON SCHEMA confessions.default TO `dancrystalbeach@gmail.com`Well, that did the trick. Maybe I should just follow my own instructions in the first place.

Look-ye-thar.

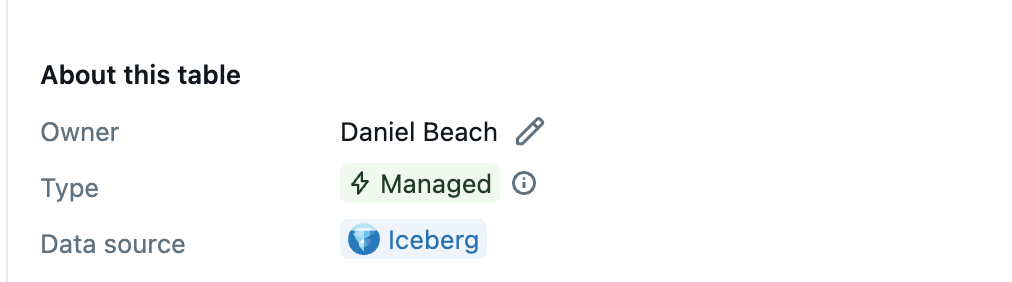

A nice Iceberg table appears in my Databricks Unity Catalog … and I wouldn’t even know it was an Iceberg table.

Of course, we can interact with this Apache Iceberg table in a Notebook with Databricks SQL like we would with any other Delta Table or whatever.

What about reading this data from our local machine with Polars?

and the results …

There you have it, Databricks didn’t lie to use. All hail the new Thanos of Catalogs. Unity Catalog wins all.

To be honest, you can setup a Databricks account and run it for basically no cost (I do), and now you have an catalog you can use to manage all our Apache Iceberg tables???

No setting up a third party catalog and managing it, you get all the benefits of Databricks … AND an easy to use and bullet proof Apache Iceberg Catalog (Unity), that works with Delta Lake too??? The future is here my friend.

Why the crap would you use anything else is beyond me.