Are Data Contracts For Real?

Or Just More Snake Oil?

I’m just as surprised as you that I haven’t gotten to them yet. Data Contracts. I felt like 2023 was the year of Data Contracts, at least for anyone in the data space cruising around Linkedin or Twitter, or other Substacks for that matter.

Sometimes I feel like the guy in the dark corner at the party around the witching hour of midnight, more interested in watching and listening to the blithering mumble jumble of the intoxicated masses screaming in each other’s ears about their new awesome gadget.

Sometimes you stumble onto an interesting topic, not sure what to make of it, maybe it’s the next hot thing, maybe not, hard to tell. That’s Data Contracts for me.

Thanks to Delta for sponsoring this newsletter! I personally use Delta Lake on a daily basis, and I believe this technology represents the future of Data Engineering. Check out their website below.

Just a quick word on the topic of tooling for Data Engineering.

We must sit down, look each other in the eyes, and tell the truth. Be honest with yourself about the Modern Data Stack. Real talk now.

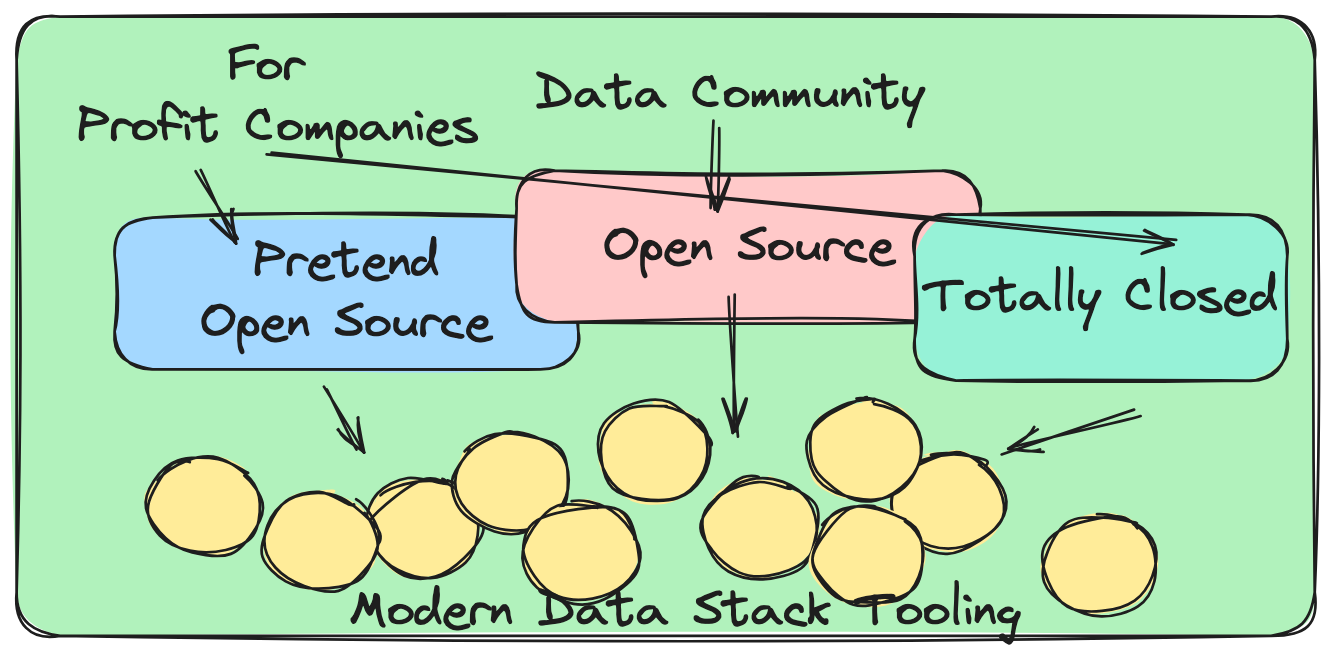

Where do innovations come from in the Data Engineering field? Generally speaking …

Springs up from the open-source community (sometimes from the overflow of a business … that wasn’t in that business).

Think Apache Airflow.

A for-profit company selling X product in the Data Space (could be on top of open-source, or brand new).

Think Snowflake, Databricks, etc.

It can be kinda hard to decifer where things come from and who the Wizard of Oz is behind the curtain.

Some companies can get it right, some can’t.

Does this matter? Yes and no.

Typically if you have something like Apache Airflow that gets super popular because it solves a real problem … you know that tool is legit because the community gets behind it and a wellspring of development and usage happens. You also then get companies improving on that model, and selling something that fills the “gaps” in that product.

Also, you get brand-new things. Think Snowflake. They see an opportunity in the Data Warehouse and Data Lake world, maybe Redshift sucks, and they say, “We can do better”, and they build something better.

This is where it gets complicated.

And then there is the last section of products that I try to be the most careful of.

That is, companies who try to sell something first, make a piece or part of that product open-source, push that new “idea,” and inevitably their product, onto the wider Data community, wether they want it or not.

Does it work out sometimes? Yes. Does it not work out sometimes? Yes.

Who decides? The Data community decides. They either, regardless of marketing, find real value in the tool and ideas, and adopt them at large, or they do not.

Maybe just a small subset of glassy-eyed believers run around in circles frothing at the mouth and screaming “Do this or die.”

Data Contracts in the Wild.

This is where we get to the topic of Data Contracts. Hear me out. I will try to give them a fair chance. Let’s hear what The Great Ones have to say.

But, here is how I’ve seen the Data Contract discussion come about.

One or two “popular” people start bringing up the topic out of nowhere.

Start doing lots of footwork, meetings, talks, and content, all about this topic.

Start a company.

While still trying to act like the “topic” is core to the success of the Data community, and hide the fact “they” are selling something.

But, who am I to throw stones? I like America. You can build what you want and sell to whoever you want. It’s the market that will decide.

I just can’t figure out if the Data Community has “decided” or “had the final word” on Data Contracts. ARE THEY IN, OR ARE THE OUT???

I took my curiosity to r/dataengineering to see what was up, are people talking about Data Contacts much? Not at all.

There are just a few posts over the last few years, and a lot of people wondering about them. Asking about them. No one really using them.

Also, places like LinkedIn have been unusually quiet on the subject. It’s kinda like sticking your finger in the wind, trying to figure out if it’s going to rain tomorrow. Anecdotally though, it appears the Data Contract buzz was small and short-lived.

Why are Data Contracts Dying on the Vine?

I’m going to do my best, to sum up what I see with the problem with Data Contracts. Although, I have to say, the idea of data having a contract and being trustworthy … who can argue with that?? Of course, we like that idea.

So, why are Data Contracts dying on the vine?

The concept can’t be fully explained in a few sentences.

Its content focuses too much on APIs.

Data Quality tools are seen as “good enough” in the Data landscape.

Cost matters now more than it did 2 years ago.

The “implementations” are too “software engineering-ish” for most Data Engineers and teams.

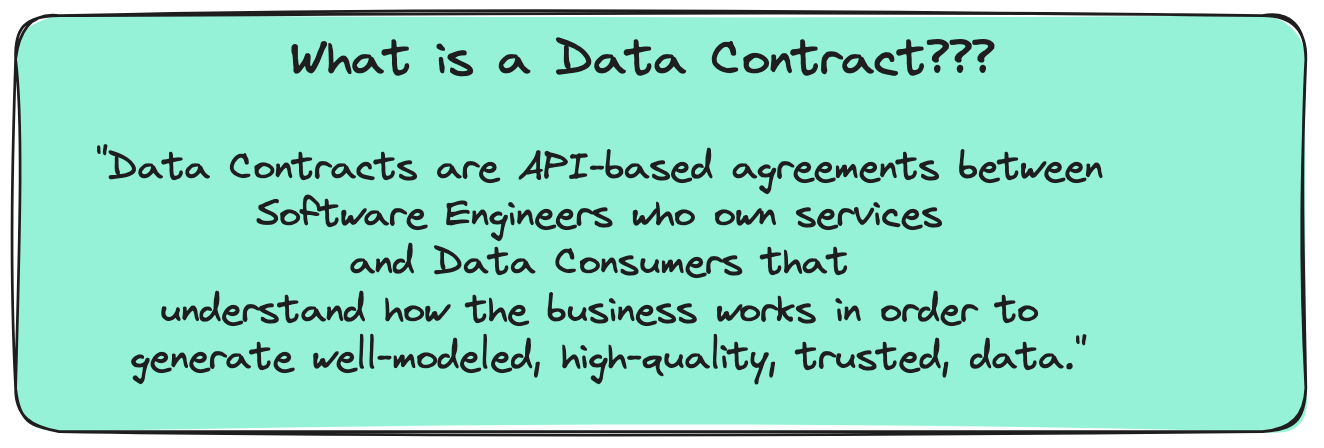

I read through this content to try and understand truly what a Data Contract is.

Well, of course, that sounds ok, also “Data Contracts cover both schemas and semantics.”

The devil is in the details in this one I imagine. Here is a list of the main points the Data Contract folks talk about. These are quoted directly from the horse’s mouth.

Data contracts must be enforced at the producer level.

Data contracts are public.

Data contracts cover schemas

Data contracts cover semantics.

Again, these things are not bad in themselves, but they lack rubber meets-the-road pieces, and they lean heavily toward APIs, which is going to fail in the Data Engineering world at large.

I thought I could get real answers by looking into the “implementation” of Data Contracts. Unfortunately, this is where the trail starts to turn cold.

There are two tools, both of which I am familiar with and have used, which are suggested for the actual implementation and encoding of Data Contracts.

Google's Protocol Buffers (protobuf), and Apache Avro.

It appears to me that Data Contracts are, at a simplified level, an idea of using existing tooling (they picked the wrong one’s), to enforce the “ideal” way to “solve common data problems” by ensuring nothing can “just change.”

There is a real problem being addressed here.

As someone who’s worked around data for decades, I can sympathize with this problem. It’s been around a long time. Data doing whatever it wants, things changing without notice, cascading problems going through the system.

This is true.

I’m just not sold that Data Contracts (and apparently the rest of the Data Community as well), are going to be the silver bullet to kill the werewolf of bad data lurking at the door.

Why aren’t Data Contracts catching on?

From my point of view, this is very easy to spot. Call be biased or jaded, because I am, but people are people.

“Most” of the average Data Platforms aren’t API-centric.

“Most” or “many” producers of data are out of our control … either completely outside our company or might as well be.

Data Quality tooling appears to be a good enough way to deal with this problem. (this Great Expectations, Soda Core, etc.)

They picked technologies Data Engineers simply will not use. (protobuf and avro) (both of which have been around forever and have limited adoption in Data Engineering)

You need SQL and Python to win the hearts and minds of Data folk.

At this point, I’m just feeling bad for Data Contracts.

It’s true. I thought I was going to be able to deride and smash into dust the golden calf of Data Contracts. I can’t quite get myself to raise my heel and strike the blow.

I feel bad for them more than anything. They must be lonely.

I understand the problem that Data Contracts are trying to solve. I think the fumble was not providing a solution that people would get on board with. Simple as that.

Why did Databricks win? Because they did something simple. Packaged Spark and a Notebook in a way that literally anyone could use. Anyone who had used EMR in the past never looked back.

Do you want me to use Data Contracts to control my data? Fine. Sounds good.

You want to talk about APIs, Protobuf and Avro? You want me to chase down people I have no control over? Not going to happen Sunny Jim.

Sure, you will find your pockets of people and teams adopting Data Contracts, probably some massive Engineering teams where someone on the top decided it was a good idea and has plenty of Software Engineers to do the dirty work.

Times have changed, and Data Engineers have not. Is it SQL? Is it Python? You have a chance. Do you want to implement complex new processes, APIs, and technologies that no one uses like Protobuf and Avro? Keep dreaming.

Good idea. Bad packaging.

There was one comment out there on Reddit that suggested getting contracts implemented required playing an elaborate game of politics that blamed upstream teams for warehouse downtime in post-mortems. Good luck doing that most places without getting yourself thrown in the bin...

Awesome write-up. I agree the idea sounds good, and we, as data engineers, have been fighting with bad data for decades. We just called it schema change or evolution.

IMO, Data quality tools integrated into orchestrators are the way. Especially if the orchestrator is data asset-driven in a declarative way. Meaning you can create assertions on top of data assets (your dbt tables, your data marts), not on data pipelines. So every time a data asset gets updated, you are certain the "contract" (assertions) are true.