Daft vs Spark (Databricks) for Delta Tables (Unity Catalog)

... in real life

Daft is one of those tools I just can’t get enough of. I’m not sure what it is. Well, it might have something to do with stuff built in Rust, being incredibly fast, and wonderfully easy integrations with things like Delta Lake.

It never ceases to amaze me what gets popular and what doesn’t. Goes to show marketing matters. Today I want to see if we can swap out PySpark for Daft, on a Databricks workload, read Unity Catalog Delta Tables … and save money … aka make things run faster.

I have written about Daft before, how it is much faster than Polars, an impressive feat.

But I am curious if just pip installing Daft on a Databricks Cluster and running it instead of PySpark can be faster and save money in certain instances (where things could be done on a single node).

The truth is, and we all know it, we use Databricks across the board for all our workloads because it makes things simple and easy, one tool, one platform, it just reduces complexity. But, there is always a subset of workloads that don’t NEED to Spark to run.

Installing Daft on Databricks and reading Unity Catalog Delta Lake tables.

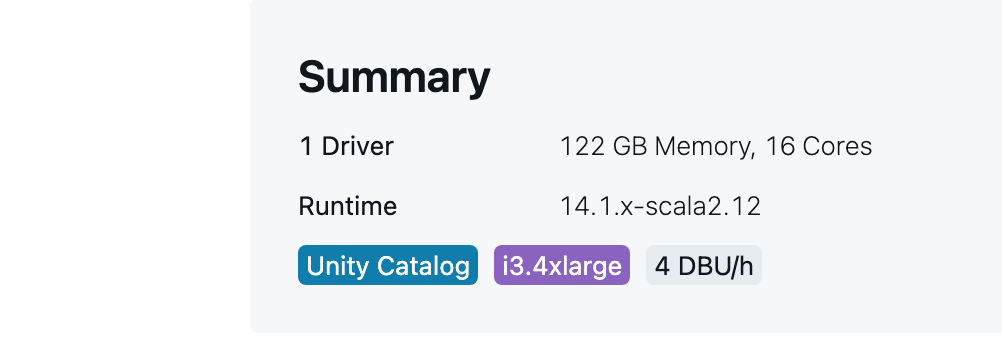

All this code was run on a single node Databricks Cluster, shown below.

Hopefully, this is boringly easy, good for real life, bad for Substack, but whatever. First things first, simply install Daft. You could do this in a Notebook, or you could do it with an `init.sh` script for a Databricks Job.

The init script might look like this …

#!/bin/bash

/databricks/python/bin/pip install getdaft[unity]==0.3.8 deltalakeor in a Notebook (if you’re evil like that) …

%pip install getdaft[unity] deltalake

dbutils.library.restartPython()After that, the code to connect Daft to Unity Catalog is very straightforward and can be found in their docs, you just need your URL for Unity Catalog (your Databricks Account in this case), and a Token (you can generate a personal token via the Databricks UI).

Next, you do the obvious.

At this point, we can see how utterly easy and painless this is, something that should not be lost on you, we should all get up and give a resounding round of applause to Daft, but let’s move on to crunch some data, and compare runtimes to Spark.

Did we speak too soon, first error! It's not that big of a surprise, always something they don’t tell you hiding in the corner. That’s real life.

This error will lead you to some Databricks documentation about “credential vending,” more or less it’s the ability to allow “external engines” from getting at Unity Catalog data.

As a side note, be careful with this, and Databricks is pretty clear about this in the documentation, allowing external data access has a lot of security risks, and they make all the defaults to block this sort of access default for obvious reasons. Proceed with caution.

This process of “credential vending” looks something like this …

Once you have enabled “external data access” (GRANT) to a user(s) this should allow Daft to do the “credential vending” itself. Daft will do this part for you, very nice, rather than having to make the API calls yourself to get the creds.

Don’t be a hobbit, get access to paid posts, the Podcast, and full archives. If you’re spending $20 on your avocado toast, you can throw some coin my way.

Another Error.

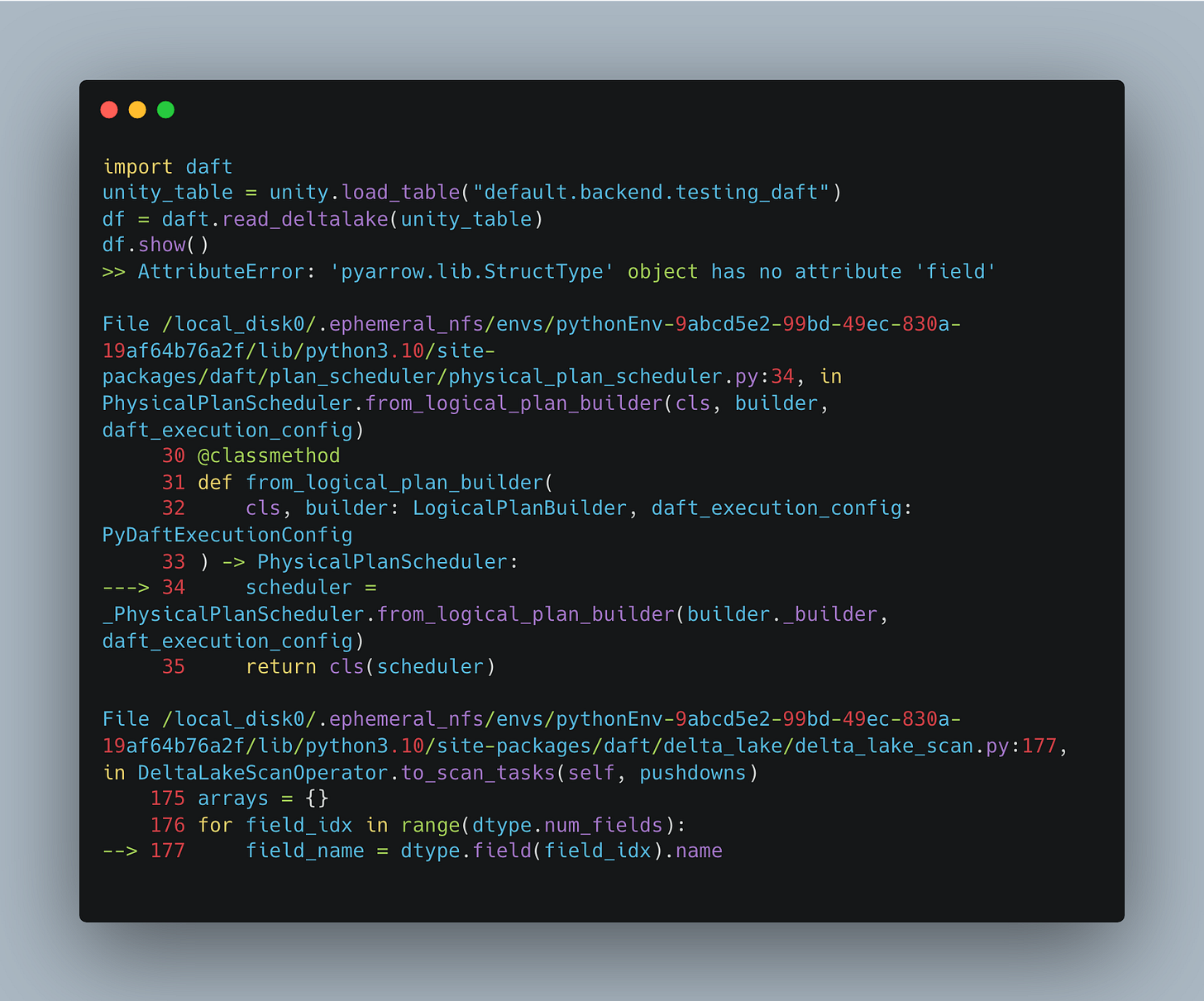

So now that we have solved the credentials/external engine data access … it’s time to look at the next error that popped trying to simply read a Databricks Unity Catalog table with Daft and doing a simple show() on it.

We get an error that is little help and makes no sense at all. “AttributeError: 'pyarrow.lib.StructType' object has no attribute 'field'“ I mean it doesn’t seem to have anything to do with permissions, so we solved that issue, but this seems obscure and related to either Daft or Delta Lake packages … or both.

Typically what I do when this type of error is rewind the versions I’m installing. But, after thinking about it, I had an epiphany, when I pip installed `getdaft[unitycatalog]` and pip installed `deltalake` separately, I probably made the wrong decision, I need the `daft-deltalake` integration.

So to solve this problem I need to do …

Sorta obvious, but sorta not. The error was not helpful that’s for sure.

Now `count()`’ing the Daft dataframe actually works.

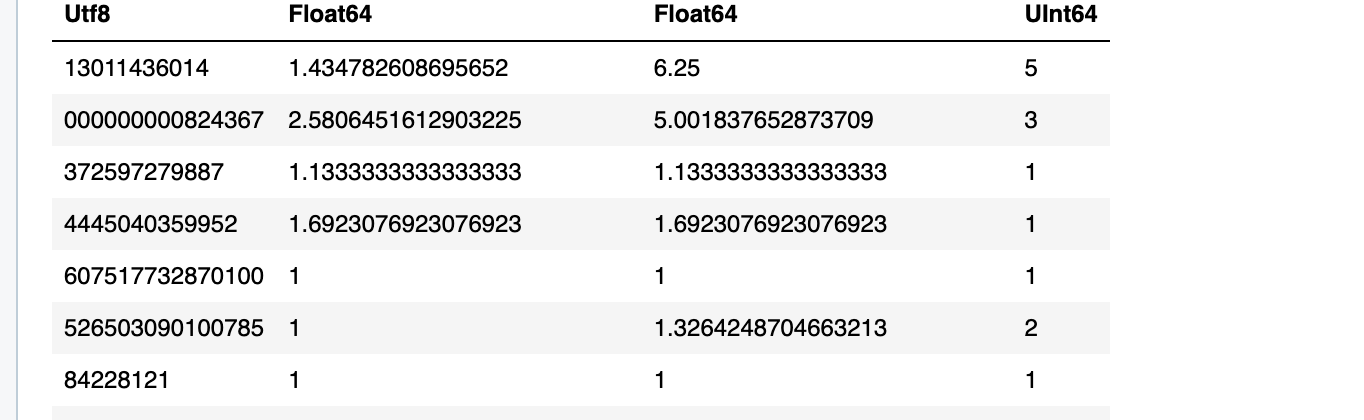

Success, now we have access to our Delta Lake table in Unity Catalog via Daft with 21 million records. This should be enough to run a simple aggregate with GROUPBY with Daft vs PySpark and see what’s shaking.

Spark on Databricks vs Daft (with Delta Lake) runtimes.

Ok, so we’ve done the work to get Daft working with a Databricks Cluster to read a Unity Catalog Delta Table, we got it hooked up to something with 21+ million records.

Let’s do a simple aggregation query and write results to s3 to see who’s faster, Daft of PySpark on Databricks.

You won’t believe the results. Insane.

(Note I had to hardcode my AWS Keys to get Daft to be able to write the results to s3.)

Remember this aggregation was/is happening on 21+ million records.

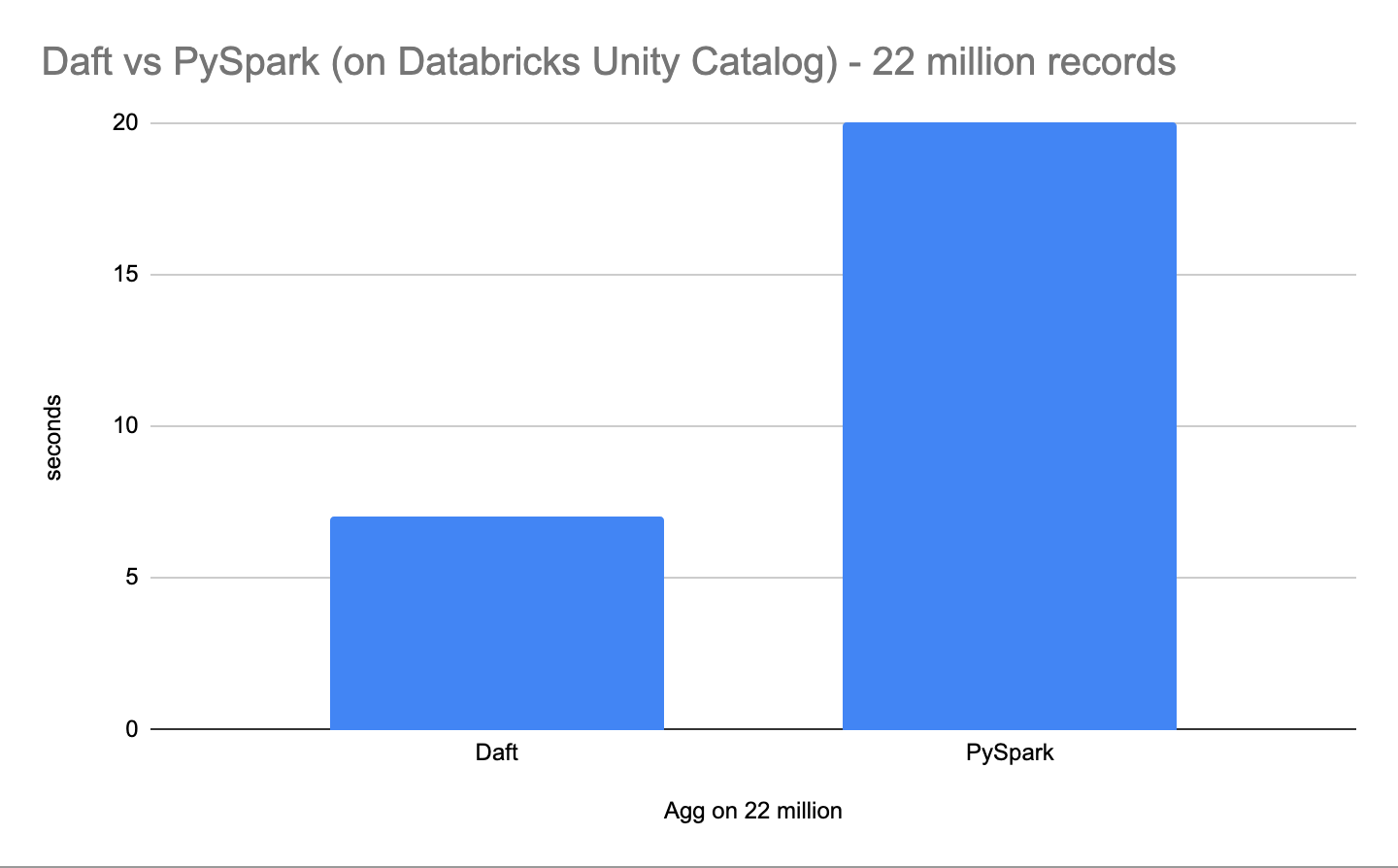

>> 7sThat little bugger ran in 7 seconds. I had to double-check the results in s3 to make sure it actually worked, which it did.

Same thing in PySpark.

Now the moment of truth, let’s run that same code in PySpark on this Databricks cluster and see how long.

And the runtime, you guessed it, a lot longer.

>> 20sDaft is almost 3 times faster. Not bad!

Now I’m sure all the angry Reddit geniuses and Data Engineering savants are warming up their keyboards to write me emails about this and that, you can all send the email to your mom instead. Go spread your genius elsewhere.

I’m not about the science here, I’m about trying things and kicking the tires in real life. It’s not about the queries I wrote, how I wrote them, this or that, it’s about trying out new things, roughly, to see what we can find out.

I have no interest in doing real benchmarks. Leave that for the birds.

What we proved.

Ever since I saw/heard the Daft added support for Unity Catalog on Databricks I’ve been sorta skeptical. More than half the time it’s usually marketing fluff which means nothing is always toy tutorials for milk toast goobers who aren’t doing anything real.

I wanted to see if Daft + Unity Catalog (with Delta Lake) on Databricks was REAL.

I wanted to see what could really be done, if there is a real use case here for real Databricks and Unity Catalogs users. Can we, with a reasonable amount of effort, save real money and compute resources.

Daft (with minimal configuration) can easily read Unity Catalog Delta Lake tables.

Daft is way faster than Spark

Daft can read large-ish tables no problem. (it crunched 22 million in 7 seconds including file IO) (so in theory it could scale up way way past that)

Daft could be deployed as part of your Databricks environment

Daft could save you real money by reducing workload runtimes

What a GOAT.

These results are quite interesting. Daft seems to have the power to replace pyspark even for distributed computing. Atleast thats whats the authors say. And they have made it easy to integrate with ray with Daft Launcher.

Minor Error: Dask is mentioned instead of Daft in multiple places. Both are different libraries.

You could make the access even more generic by reading the URL and AccessToken from the current Databricks-session via dbutils