How Tech Debt, Databricks, and Spark UDFs ruined my weekend.

Lessons in tech debt and vendor funny stuff

It’s been a while since I’ve spent the weekend working … (forced to work that is … because of failures … Engineering failures … my failures). It’s good to be kept humble I suppose. Getting complacent is human nature because when all is well we lower our guards, kick our feet up, start laughing, and congratulate ourselves on how smart and wonderful we are.

Then it all breaks. On a Saturday.

I figured my tale of woe would serve as a good lesson for all of us Data Engineers on how important it is we keep on the offensive, deal with tech debt, and how we can stay humble.

Technical Debt

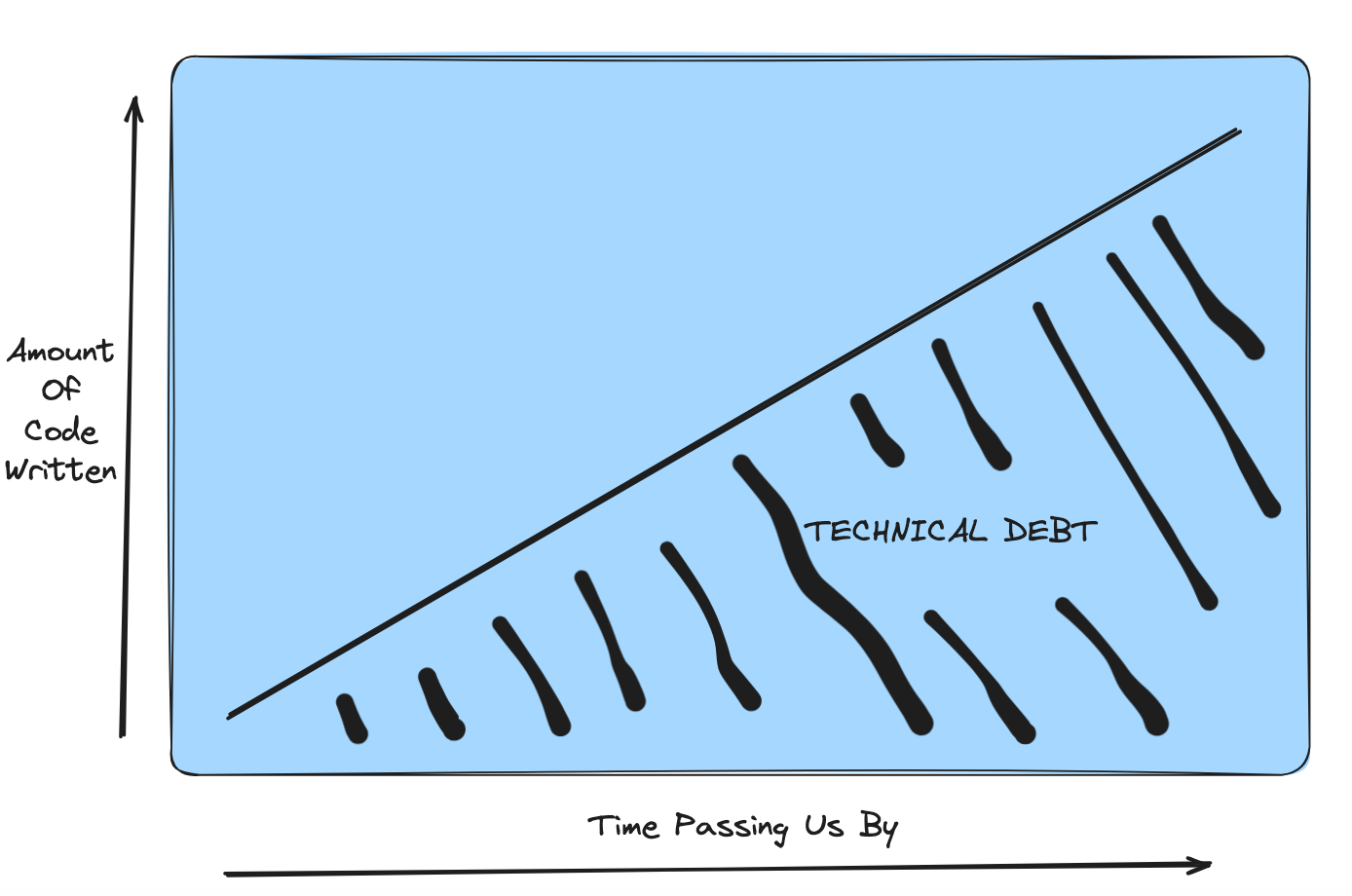

My story of woe starts with the most classic of all Engineering problems … tech debt. I’m not sure why technical debt doesn’t get much focus from the talking heads on the internet, but that isn’t that surprising, it’s sorta a boring topic.

It’s something we all live with, knowingly, we pet it, poopoo it, cuddle it, we simply keep it around.

But what is technical debt? Let’s ask the old AI.

Technical debt in software engineering refers to the concept of incurring future costs due to taking shortcuts or making suboptimal decisions during the development process to achieve short-term gains. It's analogous to financial debt, where borrowing money provides immediate resources but requires repayment with interest over time. In software development, this "debt" can manifest in various ways …

Pretty much agree with that. Technical debt can exist in code and outside of code, it’s a thing with a life, an amorphous blog.

When most of us think of technical debt we think of “bad code,” and this is true to an extent, but not all-encompassing. On the average Data Platform, there are lots of technical debts of various kinds.

Bad or Complex Code

Old Architecture

Vendor Features and Platforms

Data Quality

Data Monitoring and Alerts

Poor CI/CD and DevOps

These things combined can build up over time and create “complex” technical debt that is more than just … “Hey, this method or function is leaky and dirty.”

We are talking REAL technical debt that involves vendor platforms, tooling, etc.

But, technical debt isn’t that simple, there are so many other factors that come into play that cause the existence of technical debt to take place.

Size of the company

Culture of the company

How fast technical team is expected to move.

The truth is we can’t have our cake and eat it too. There are tradeoffs we make every single day, and that is part of being Engineers. We consider what lies ahead and behind, given our tasks, we make decisions.

We accept technical debt in some areas and reject it in others.

Ok, let me spin you a tale of reality, the reality of working with limited resources, where you have to most fast and make numerous tradeoffs on what can and cannot be worked on. Where you have no DevOps Team, Platform Team, or Backend Team … you … you are everything.

Let me paint a picture for you.

It all started at 4:45 AM on a Saturday morning. Of course. Slack Alert. Pipeline failures.

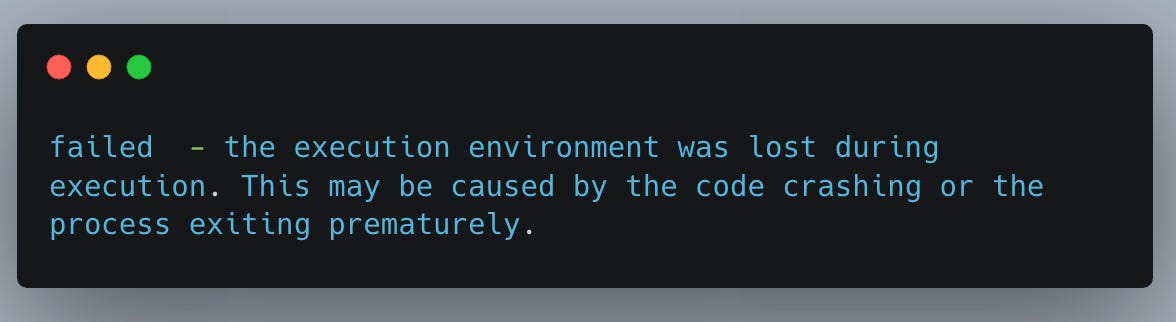

This alert with sent from Airflow from a Databricks Pipeline. The following Spark error appeared in the logs.

Of course, very cryptic, and this particular pipeline had been running for years, it had been recently, say within the last few months migrated from a Standard Databricks account to Unity Catalog.

Anyone who’s worked with Spark and sees this kind of error thinks OOM and Memory issues right away. The Driver Node obviously going poof for some reason.

Suffice it to say I’ve been around Spark a long time, and tricky pipeline errors, with it being a Saturday and having better things to do, I pretty much threw the kitchen sink at the problem, just trying to get the pipeline to run.

For context, we are taking a few hundred billion records.

So far ...

increasing cluster size and drive size doesn't make a difference

tried changing the DBR runtime, but no good

tried persisting the DF immediately prior to CSV write.

it doesn't seem to be OOM on the surface, plenty of 758GB RAM on the driver

looked at data volumes, seem to be stable no big spikes of data recently

Clearly, something funny was going on as the pipeline had been running fine and out of the blue decided to stop for no reason, also the fact that doubling the size of the resources made no difference was very troubling.

Adding some context

Let's take a break from this story of suffering and woe to give you some much-needed context, and take a minute to talk about tech debt.

This story starts about 3 years ago. At that time the Data Platform in question was on its literal last legs.

AWS EMR for Spark + some EC2

AWS Data Pipeline for orchestration

AWS S3 with Parquets for Data Lake.

It's a complex machine-learning pipeline. The code base was unusable, no tests, poorly written code is an understatement, excessive use of UDFs and Loops, a mix of Python and PySpark, and to top it off, no Development Environment.

And that is putting it nicely. Daily errors were the norm and it was taking 18+ hours to run the pipelines end to end.

Classic Technical Debt

It was a clear case of technical debt not being dealt with over many years, at many levels.

Code-level tech debt

Design (problem/business) level tech debt

Tooling tech debt

The combination of these three can lead to serious problems that are hard to overcome without herculean effort.

So that's what happened, a year+ long project to migrate to the new stack.

Databricks to replace EMR

Delta Lake to replace S3 Data Lake

AWS MWAA to replace Data Pipeline

Refactor all code

Focus on CI/CD and DevOps, along with testing and Development.

How do you deal with such large amounts of technical debt? At a certain point after waiting too long, the combination of code and tooling makes the only viable option typically total migrations.

Back to the story.

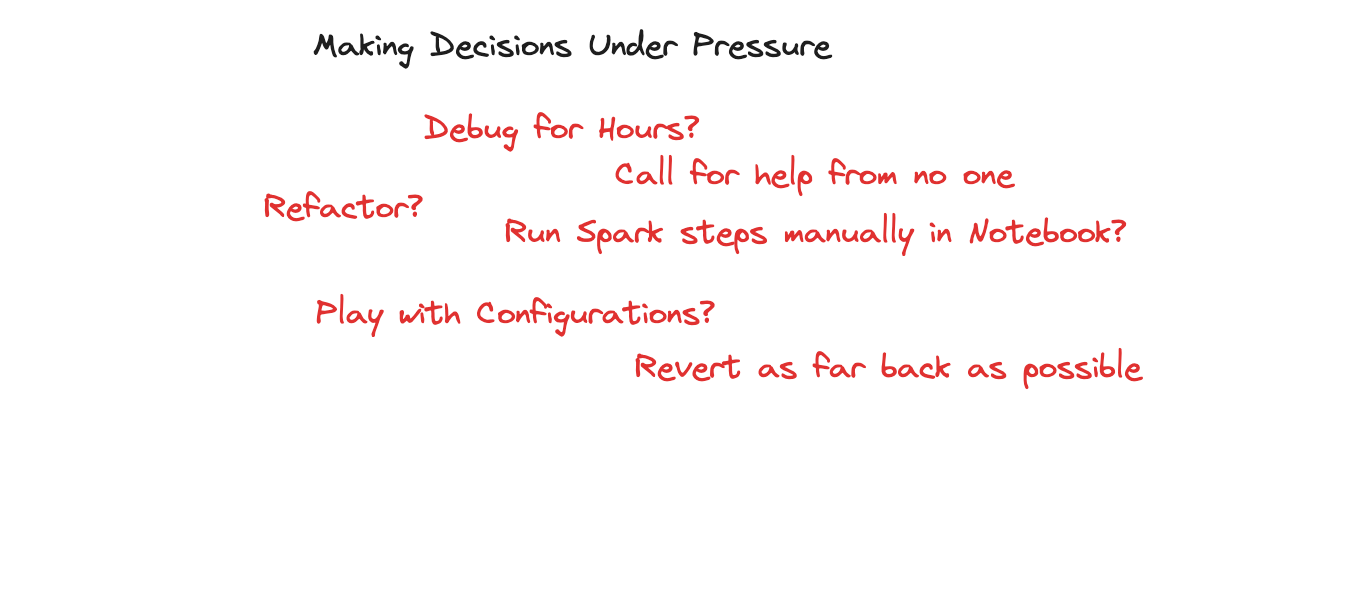

Anyways, back to the problem at hand. It’s a Saturday and the pipeline has been broken since 4:45 AM and it’s about 11 AM with no end in sight.

But, the story behind the story is that this “section” of the pipeline had never been fully rid of all technical debt. During the 1.5-year-long migration process, most all the code had been rewritten and brought into this century, as well as obviously made to run on the new infrastructure. All, expect this bit.

For reasons now buried in the past, this bit of code now failing hadn’t been deemed important enough to take ALL the way over the finish line.

It was a known problem, but because of other priorities had simply been seen as an acceptable risk, but at some point that risk continues to rise over time and eventually the pot boils over.

After playing around with DBR versions and downgrading, the same error was appearing but now referring to UDFs. This code was full of them (well over 25), unnecessary ones, but full of them. The code has never been refactored.

It was clear that something had happened that was out of our control, it’s a well-known problem that classic UDFs don’t play well with Unity Catalog. Something had magically changed on the Databricks side, probably overnight, and was now adding the final straw to the technical debt we hadn’t dealt with.

In the heat of the moment, I almost went down the path of refactoring all the UDFs into normal PySpark functions but although that might be the long-term fix it wasn’t the solution for the moment because …

such changes need rigorous testing

doing things in the heat of the moment is a bad idea

going down rabbit holes when you need a fix now is a bad idea

Getting lucky, being careful with vendors.

This is especially true when you have parts of a codebase that are overwhelmed with technical debt, but relying 100% on a particular vendor, especially anything fairly new, can be a foot gun or a ticking time bomb.

No matter who or what your cloud provider or SaaS product is, even if we are in love with them, you still need to hold them at arm’s length and design your codebase in such a way it doesn’t have a death embrace with any particular “thing.”

In the case of my broken pipeline, I was getting desperate to move along with my weekend plans and stop the bleeding, not to mention the broken pipeline has customer impact.

I got lucky.

We had recently gone through a small migration from Databricks Standard account to Unity Catalog, and with some stroke of luck and a little distrust … we had not torn down the Standard Databricks account yet.

Any Data Engineer who’s been around the block a little knows not to wave the victory flag too soon. Better safe than sorry. It was my weekend’s saving grace.

I moved the pipeline back to the Standard Databricks Account, ran it, and it ran without a hitch.

Lessons Learned

There are a number of lessons to learn during times like this, here are some of them in no particular order.

No pipeline is immune from failure no matter how long it has “been running fine.”

You can’t entirely trust any one vendor never to “get you.”

You need to have some sort of Disaster Recovery plan in case of not only complete vendor failure, but simply things changing and needing a solution NOW, not 48 hours later.

You and your tech debt are your own worst enemy

Tech debt will catch up with you eventually.

There are more types of tech debt than just “bad code.”

The amount of tech debt should stay level or always be declining, not going up.

There are problems with moving fast, eventually that pied piper will come calling for you.

When solving a stressful problem, resist the urge to get complicated, KISS will end up fixing it most times.

What it really comes down to is that getting caught by tech debt is going to happen, and it got me, and it will always happen when you don’t want it too.

We take risks, we decide what is important to work on and what isn’t, we listen to the business, we solve problems, and keep things running. But, we have a responsibility as Engineers to understand the dark corners and edges that we have decided to let slide.

I got lucky with a leftover account from a migration that turned out to be a turnkey answer to my problem. I did spend hours trying to debug the un-debuggable, my own fault.

It’s hard in the moment of pressure to step back and think about all the options, even if you know you could probably “code your way out of it,” and most likely cause another set of problems while doing that.

Sorry that your weekend was thrown for a loop by this! Thank goodness you kept the old account running, eh?! Solid lesson for everyone!