Introduction to MLflow

Machine Learning Workflows

For those of us who’ve been unlucky enough to find ourselves working with Machine Learning as Data Engineers low these many years, one thing is certain, it’s no surprise to us that most ML projects fail.

Data Science has always been the Wild West of data no matter where I’ve worked and whether the team was a dozen or one person, didn’t seem to make much difference. It’s a difficult space to operate in with little to no stability or standards.

No two Machine Learning projects are the same, without even counting the different organizations, ML between different organizations is as varied and colorful as a middle school dance.

MLflow is here to change all that.

You should check out Prefect, the sponsor of the newsletter this week! Prefect is a workflow orchestration tool that gives you observability across all of your data pipelines. Deploy your Python code in minutes with Prefect Cloud.

Machine Learning Problems.

Before we dive into MLflow, let’s talk quickly about ML in general and some of the most common tasks and workflows that exist across most all Data Science and Engineering teams working in the Machine Learning space.

Here are some of the major processes that you will find across most ML pipeline work, most of which are, or should be, built by Data Engineers so they are reproducible and automated.

Building, storing, and managing features.

Training models

Inference (prediction) on models

Managing models

Running and managing experiments

Ensuring reproducibility and automation.

That is high level, obviously a lot of detail hidden away in there. Truth be told, most of the problems that you will find on Data Science and ML projects, reasons why things don’t go as planned or work out, are because of a failure in one of the above steps.

Problems that are solvable if a little rigor is applied. Data Science and Machine Learning, overall, severely lack structure processing for the ML lifecycle, a problem that increases with the increase in …

models

people doing ML

Hence the need for a tool like MFflow. So, after all that, knowing the MLflow is the answer to all our problems and can lead us into the Promised Land of glory, let’s step forth.

MLflow at a high level.

What is MLflow?

ML and GenAI made simple.

Build better models and generative AI apps on a unified, end-to-end,

Open-source MLOps platform

More or less MLflow is supposed to be an “all-in” tool. If you drink the Kool-Aid you need to chug it. It’s meant to make all of the above steps we talked about and wrap it, plus more, into a single tool.

It’s not hard to see, when reading the list of things that have to be done to have “good ML pipelines and workflows, that building those features yourself while not impossible, is improbable you will be able to get everything done yourself with any amount of coverage that comes close to being enough.

It’s also important to note that managed MLflow is provided by Databricks.

MLflow and code.

I want to just reiterate something that may or may not be familiar to you depending on how much you interact with Machine Learning. Every Engineering and Data Science is going to write different code when it comes to training, iterating, and predicting on model(s).

The more people doing the above, the more variations you will have. Take for example my crappy SparkML Pipeline from many years ago.

This is where MLflow steps into the breach. When you are writing “custom” code to iterate, test, and predict on Models, how do you track what you have done and what has happened, or what IS happening?

It’s almost impossible.

How and where do you store model objects?

How do you track and trace between model iterations?

Where does all the metadata go?

How do you collaborate and share workflows etc?

How do you predict on, and serve your models?

This is the single biggest argument for why MFflow. Standardize. By unifying the framework by which we do this work we eliminate a whole series of problems and issues.

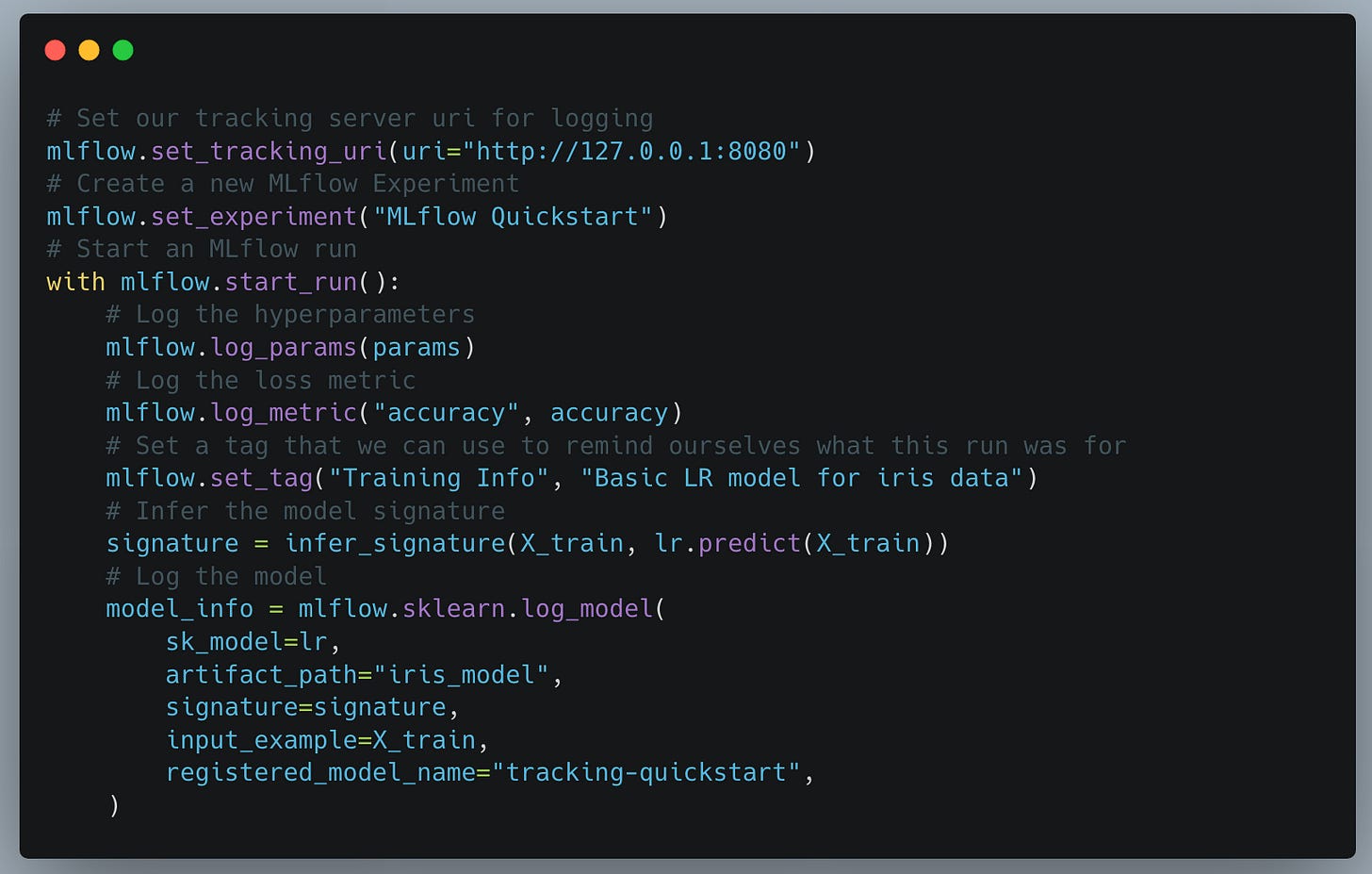

For example, here is an MFflow code snippet from their docs. Cleaner, obvious, standardized, tracking, etc.

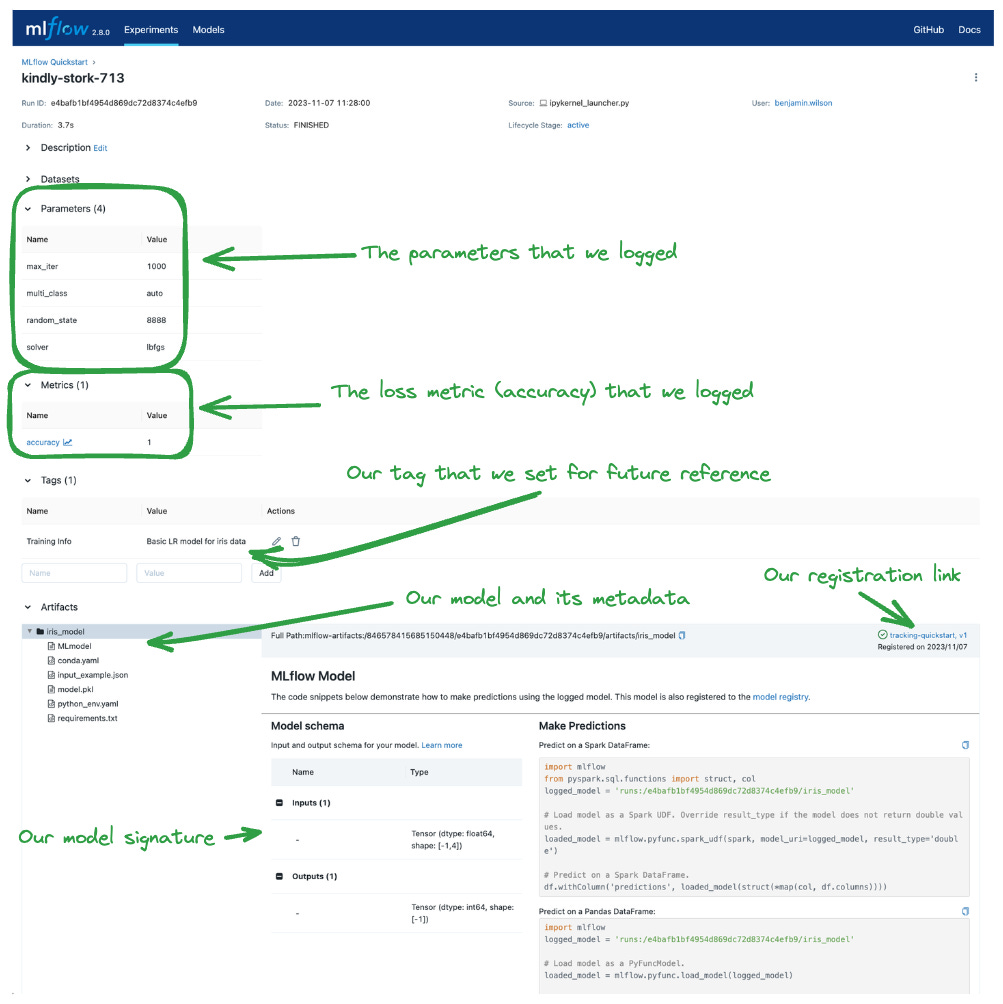

Of course, MLflow comes with a UI, which is a big draw in today’s world. You don’t always have to mess around on the command line. Imagine, all that tracking and work done with a few commands and available at your fingertips. Powerful.

This takes the guesswork out of building, deploying, and managing ML models and lifecycles.

Other MFflow concepts.

There are some other MLflow concepts that you should at least know about in the beginning, it’s more than just writing code. It’s about the whole end-to-end lifecycle.

Projects

“At the core, MLflow Projects are just a convention for organizing and describing your code to let other data scientists (or automated tools) run it. Each project is simply a directory of files, or a Git repository, containing your code.”

MLflow Tracking

“The MLflow Tracking is an API and UI for logging parameters, code versions, metrics, and output files when running your machine learning code and for later visualizing the results”

MFlow Models

“An MLflow Model is a standard format for packaging machine learning models that can be used in a variety of downstream tools—for example, real-time serving through a REST API or batch inference on Apache Spark”

MFlow Model Registry

“The MLflow Model Registry component is a centralized model store, set of APIs, and UI, to collaboratively manage the full lifecycle of an MLflow Model.”

MLflow Recipes

“MLflow Recipes (previously known as MLflow Pipelines) is a framework that enables data scientists to quickly develop high-quality models and deploy them to production.”

I think what becomes clear after diving into all that MLflow has to offer is that it’s more than just another ML toolset. To get the full benefits of MLflow, which are many, you need to standardize on using MLflow for as much of your ML work as possible.

The more you use it for each piece, the more centralized all your ML workflows, development, and processing becomes.

MLflow reduces the chance of project failure just be automating and standardizing what goes wrong most of the time … the small details.

Typically folk focus on training models and then predicting them. They are obsessed with metrics and getting the most accurate model possible. In the process they forget the massive MLOps effort (80% of the work) required to do things correctly and keep things running well in production while iterating.

Do you really want 3 different Data Scientists doing whatever feels right? Saving models here and there, who knows about the metrics, trying to figure out how that model was built 3 months ago, and who is using it now?

Given all these capabilities, I wonder if we can use (abuse) MLFlow for things in the data engineering space like data observability, e.g. defining dataset metrics and tracking them over time.