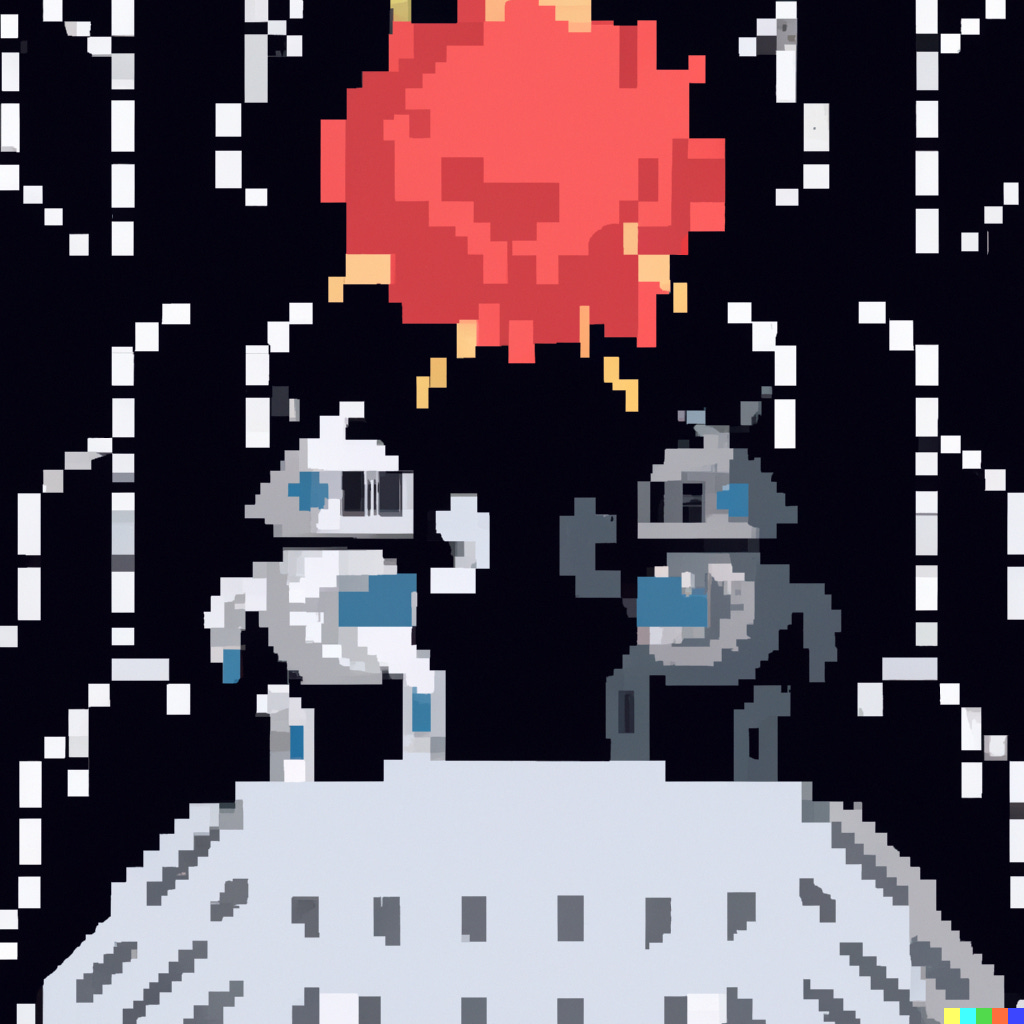

Json -> Parquet. Python vs. Rust.

We know who's going to win (or do we?).

We know all good developers love a battle. A fight to the death. We love our files. We love our languages, and even when we know what the answer will be, it’s still fun to do it.

While this topic of JSON → Parquet may seem like a strange one, in reality, it was one of the first projects I worked on as a newly minted Data Engineer. Converting millions of …