LLMs Part 2 - Fine Tuning OpenLLaMA

For Data Engineers

I figured I should probably take this chance before AI takes my job and I end up like Oliver Twist working in some dirty factory filling bottles full of snake oil. What chance you ask?

The chance to fine-tune an LLM to write like me. (all code on GitHub)

Some months ago I did a Part 1 on LLMs called Demystifying the Large Language Models (LLMs). It was mostly to help Data Engineers who’ve not worked around Machine Learning (ML) much to at least get their feet wet and run an LLM locally on their machine. Take some of the scaries away.

I’ve been personally sorta reticent to dive too deep into the AI and LLM humdrum. This might be due to the fact that I’ve worked around ML for too many years to see the dark side. ML has always been the Wild West, even after all these years.

A landscape littered with little to no standards.

Lacks most good Engineering best practices in many aspects.

Besides the Data Engineering portion … can be wildly boring.

Especially with LLM worlds being so young, new models coming up all the time, a zillion different packages being released that are half-baked… I know in my heart of hearts that trying to go a step deeper … say fine-tune an LLM … would be a heartache and headache.

Let’s Do It Anyway.

Well, I figured even if I didn’t want to do it for myself, I could at least find it in my Grinch’s heart to do it for you, my dear reader.

So, that’s what we are going to attempt to do. In Part 1, we were able to download and interact with the OpenLLaMA model on our laptop. Just to get a feel of things.

What we are going to do today, is fine-tune OpenLLaMA … to be more like me … so it can write all my blogs for me while I galavant off into the woods to live like the mountain man I want to be.

I want to do this (fine-tune an LLM) for a few reasons. Mostly to prove that LLMs can be a boon for Data Engineers … and increase job security.

I hope you will see that …

Just like most Machine Learning … LLMs are data-heavy.

Data munging and wrangling are a major part of the game.

How important DataOps, DevOps, and CI/CD are critical to reliable and repeatable LLM operations.

LLM workflow is mostly a test of Data Engineering skills.

The boring Data Science part can be left to birds to fiddle with.

You need GPUs to do much fiddling with LLMs.

What is Fine-Tuning?

Good question, I’m glad you asked. Fine tuning is the process of taking a pre-trained model, like OpenLLaMA, see the last article, and then doing further training on a small specific dataset, relevant to some domain of interest.

It’s important to note the actual LLM fine-tuning (training) must take place on a GPU compute machine (Probably with at least 24GBs of GPU memory).

The outline of what we are about to do.

Get the model staged.

Get data gathered.

Massage data into format.

Get config and model defaults from someone else.

Set up Cloud resource(s) (GPU) to train the model.

Deploy code and data to Cloud Resource(s).

Retrieve results.

Combine base model with fine-tuned adaptor(s).

Replace me with the LLM to write the rest of the blog.

This is a super high level of what is going to happen. Some things I will skim over, others I will not.

I am no expert but you can pick up on what the steps are by simply reading a few other blogs and articles on the topic of fine-tuning, regardless of what the model is.

One thing you will notice when pursuing the interwebs for details on fine-tuning … is that most of the time, the little rats gloss over the “hard parts”. I will not.

I would say the actual writing of the code to fine-tune a model isn’t the hard part (speaking to a Data Engineering audience), you can find many examples in GitHub of folks doing that for many different LLM models.

The hard part is …

Gather a dataset to fine-tune on.

Figuring out what format to put the data in.

Putting the data in that format.

Figuring out WHERE and HOW the compute will look to fine-tune

Finding somewhere to do it.

Getting the GPU resource prepped with tooling.

Knowing what dials to twist for best results (leave that to the Data Scientist)

Making some decisions upfront.

So, we are going to just pick HuggingFace up front for the Python packages to use for training, and for the dataset format. Why? Mostly because people are very familiar with them as a leader in the LLM space.

Since having data to fine-tune is the most basic task, let’s start there.

Understanding data gathering and formatting for fine-tuning LLMs.

First, let’s get a crash course on gathering and formatting data for fine-tuning LLMs.

Unfortunately, we can’t simply just throw a bunch of data in a pile, point some model at it, and click run. Hence Data Engineers have a bright future in the LLM and AI future.

The data must be …

gathered from some source(s) around a specific subject (the whole point of fine-tuning)

put into a format that makes sense to the LLM so it can be trained on it.

So what does that mean to a Data Engineer? Taking unstructured data and putting it into a semi-structured format.

Take for example the dataset wizard_vicuna_70k_unfiltered on Hugging Face. Below is a screenshot.

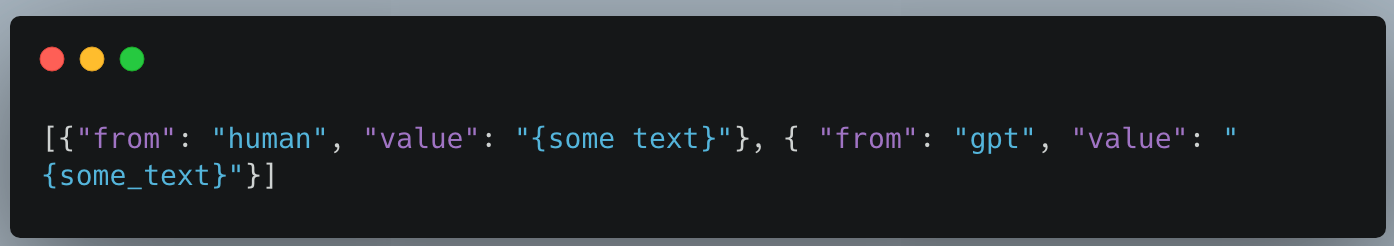

It is basically in the format of …

On the fly, during training, this semi-structured format will be again reformatted into a `string` needed by the model training, but, if we want to store the semi-structured text data in some useful format … we have to pick one.

Real Life example of gathering and formatting data for fine-tuning an LLM.

Ok, so all that is good and well, but how about a real-life example? I said at the beginning I would like to fine-tune an LLM to write my blog posts for me since you won’t know the difference and then I can retire to the mountains to live off the land.

So what data do I need?

I need some of my blog posts … AND, I will need to massage them into something like the above format that the LLM can understand during tuning.

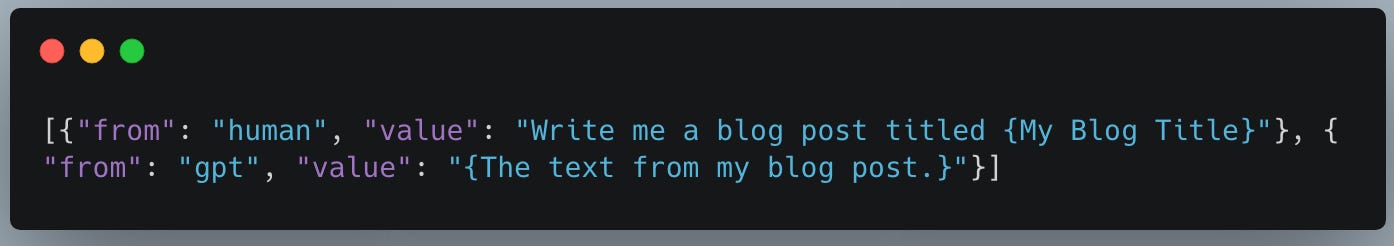

Here is what I’m going to do. I will attempt to export my blog posts from my WordPress website www.confessionsofadataguy.com, pull the Title and Text from each blog, and input them into the below format.

My hope is that the pre-trained model is familiar enough with Data Engineering concepts, I’m sure it is, but what I really want is for the fine-tuning of OpenLLaMA to pick up on my voice.

Munging my data together.

Here goes nothing. I’m a Data Engineer and I can well guess that this is going to be the most painful part of the process. Data always is.

Below is me exporting my blog posts from WordPress.

And then my head exploded. XML. That’s what the mighty WordPress gave me. Go figure.

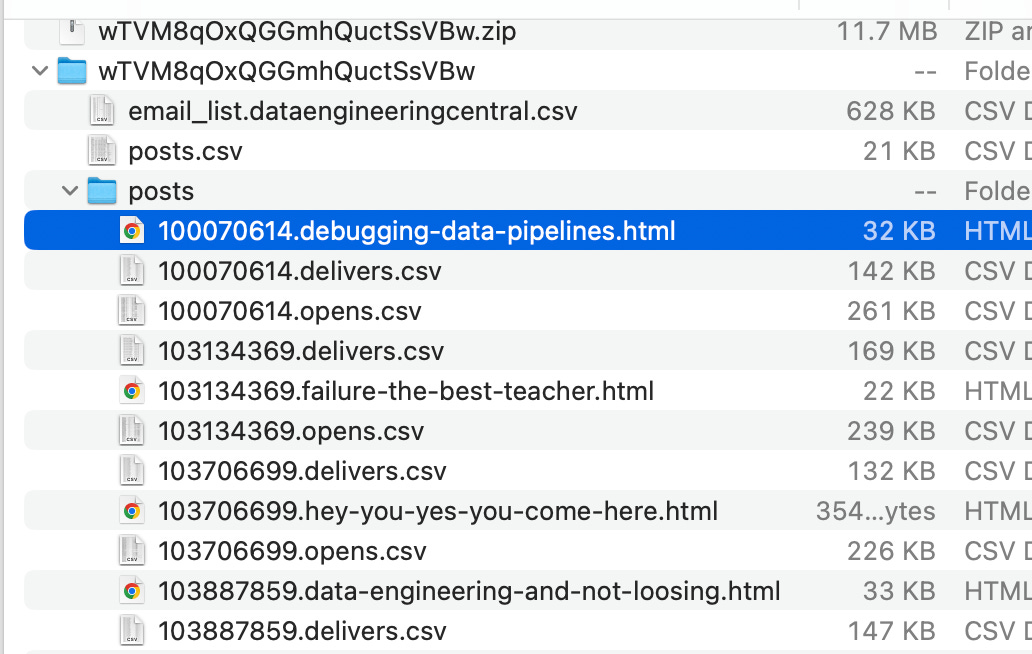

Bugger all. I really don’t feel like pulling out a bunch of crap from XML, time is money. Not cool. I have one other option. This Substack.

Well, the Substack export is a little more promising. The blogs are HTML files with the title as the filename. This is workable. Seen below are the download HTML files of the content with the filename being the title. Just what I need.

Ok, so we can deal with this. We just need to iterate the files and pull the title and the contents with BeautifulSoup.

Of course, while my script is doing this work, it’s also massaging everything into the needed format we saw at first.

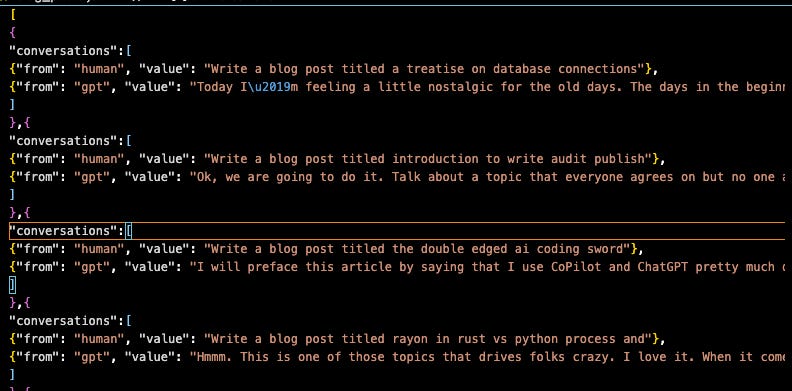

It looks like a mess of code because it is. It’s really just writing out a JSON file with the messages we reviewed before in the correct format that the HuggingFace datasets library is ready to work with. What a pain.

See what we got. Not perfect, but decent enough. We get a title and a bunch of content. (Below you can see the titles and content from the blog posts being pulled).

So we have 100 blog posts that we can extract. Let’s start with this, and get this data into the format we need. Which is below.

Seems to work ok, the text isn’t perfect, but neither am I, so who cares?

This is a screenshot of the file the above script writes out.

I then uploaded this JSON file to s3, so I could get it later on my GPU machine for training.

The Other Hard Part (the code).

So, we’ve got our data sorta ready to go, or at least we’ve learned a lot about what it looks like to prepare a common dataset into some sort of coherent format for ingestion by an LLM.

So. On to the next hard part (maybe)?

This is where we do what any good script kitty would do, and go looking at other examples of people fine-tuning OpenLLaMA models. Since this is such a popular thing, there are many many examples in GitHub.

The GitHub repo I focused on to learn what it looks like to actually write code to load the data and the model and do the actual fine-tuning training is this one. Thank the Good Lord for the people that come before us. We all stand on the shoulders of others.

It’s hard to know what you don’t know, and a great way to learn is to emulate other codebases, read the general flow of what they are doing, and then adapt to your needs.

I’m going to link to GitHub for my code so you can inspect it, but the general flow seems to be this from all the online tutorials I read.

Set configurations and parameters for model and training

Where model sits

What knobs to turn (just trust others on this)

Training data

etc.

Load up the QLoRA training

Save adaptor.

As an FYI, there are ALOT of decisions and important concepts to learn in this code. Things like QLoRA and why you would choose it (reduce memory consumption). But, we will save that for another blog post.

Anyway, we now have data and a code we need to fine-tune. On to the next task.

Getting GPU Hardware to fine-tune.

The next tricky part was finding someplace where I could rent some GPU power at a reasonable cost. I first tried my old trust Linode, but I had to put in a support ticket to get approved to spin up GPU nodes ….? Strange, I’ve been using them for years.

I also ran across vast.ai where you can bid/rent out GPU’s for reasonable money … say like $0.50 per hour. That’s cheap enough for a boy like me who grew up where I grew up.

I have no doubt my first few tries will probably fail. I started out running as much of the code locally on my laptop to try to debug all issues.

Mostly around the munging of the data and loading up the data into the correct format required for training by the Hugging Face datasets library.

Again, it’s very clear to me the data munging part is one of the most difficult. There are very few clear instructions online, and many competing ideas and solutions.

Your data needs to be exact, exactly what I needed and expected to be loaded and sent to the model … otherwise it simply work work.

Oh, and we can’t forget the tooling part.

Another “problem” if you will, is making sure you can quickly stage all your code, model, data, and install all tooling onto whatever GPU machine you get. I mean you’re paying by the hour, so you don’t want to dillydally.

Either containerize your entire setup into some Docker image.

Or, put your code in a repo, and data in s3, or be able to scp copy your data and files, and be able to quickly install the necessary tools to clone and copy everything onto the GPU machine (ex. be able to run git commands, have the AWS CLI etc).

I ended up renting this machine by the hour.

I took the hard route and simply used a blank Unbuntu container for this machine. So I had to of course do a few things …

install a bunch of tools (pip install, few apt-get installs)

get my code on the machine (easy)

get my data on the machine (easy with aws cli)

(BTW, you need sudo apt-get install git-lfs on a Linux machine to get all the big model files)

After that it was simple enough to run my training script, it appeared be working fine.

Except till I checked on it later. It had run out of Disk Space.

Added more, and then back at it. Train, train, train.

Then whammy, training done, and adapter saved.

Not bad! Of course, I saved the adaptors into s3 for later use.

Of course, this was just my first time learning to train an LLM, and in the real world, we would harden the process a lot more and probably use a lot more data.

How do we summarize what we learned from a Data Engineering perspective?

Do we care if it was right? Not really. We got the general steps and flow to work, as well as the adapter to output. We learned a lot. That’s the idea.

Fine-tuning LLMS is mostly a class in …

finding data

massaging data into formats

installing correct tooling (python packages etc.)

getting code correct for training

find a GPU instance to train on

I can tell from simply walking through this basic exercise, even if it’s half wrong, that just like normal ML workloads I’ve worked with for years … LLM stuff is again a lot of Data Engineering.

Gathering and formatting data.

Setting up environments with tooling.

Automating a lot of tasks and workflows so it can be repeatable.

This new age of “AI” and LLM’s is going to favor Data Engineers.

you wrote a bunch of steps and made a lot of inside jokes along the way, but where is the result text generated by the fine-tuned LLM? was it accurate enough? seems like that is the most important part?

so what was the result of the example?