Medallion Architecture. Truth or Fiction?

let's rattle the cage

“What is truth?” Famous words said by an infamous man. When I have a hot take the first thing I do is to pray that the data gods will have mercy, and that I will not regret it, and if I do, may I be living in the woods when they come looking for me.

I've got an axe to grind today, you dear reader, come along with me and together we will pull down false idols from the high places. Today, the so called Medallion Architecture is in the crosshairs.

Before we get into the weeds, whacking and cutting, let me see the record straight.

If you run dbt and Airflow, read this before your next deploy. Cosmos is the OSS bridge between them. With 20M+ monthly downloads, data teams everywhere are turning dbt projects into Airflow DAGs for controllability, observability, and scale.

Join Astronomer’s webinar on September 25 to learn:

How Airflow orchestration unlocks control and visibility for your dbt models

How Cosmos runs dbt Core or dbt Cloud/Fusion as DAGs and Task Groups with just a few lines of code

Best practices and performance tuning for Cosmos at scale

Data Modeling

Data modeling and architecture find their value, worth, and beauty in the eye of beholder. Developers are amazing people, able to spin a web of code to accomplish literally anything.

All that to say, you can run a successful Lake House platform with and without Medallion architecture.

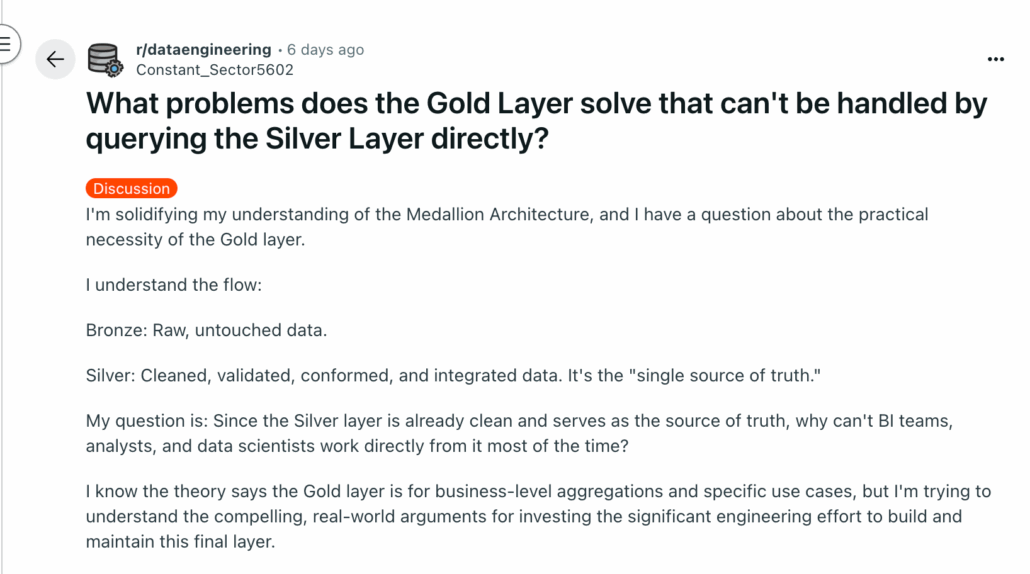

But, a recent r/dataengineering post brought to bare before my angry eyes, the utter barren wasteland such marketing speak can have on a fresh field of engineers who don't know any better.

We live in an age where the line between honest and bonafide technical reality, is blurred and mixed up with marketing and sales engineering content. To the point the numb masses drink from the fountain of poison and become victims of every snake oil hawked on their unsuspecting souls.

Medallion architecture is bordering on the edge of pure malarkey, and proof I will deliver to you.

What is Medallion Architecture and from whence does it come?

The Medallion Architecture is a data design pattern popularized by Databricks. It organizes data into multiple layers (or “medallions”) to improve data quality, performance, and usability as it moves through the platform.

The layers are typically:

Bronze (Raw Layer)

Stores raw, unprocessed data.

Ingested directly from source systems (databases, logs, IoT streams, APIs, etc.).

Often includes duplicates, schema drift, and poor quality.

Purpose: Immutable record of truth – never delete, only append.

Silver (Cleansed Layer)

Data is cleaned, filtered, de-duplicated, and enriched.

Joins from multiple bronze sources may occur.

Business logic starts here (e.g., data quality rules, standard formats).

Purpose: Curated, analytics-ready tables.

Gold (Business Layer)

Data is aggregated and optimized for analytics, BI dashboards, and ML.

Domain-specific data marts (e.g., sales KPIs, fraud detection aggregates).

Purpose: High-performance, business-consumable datasets.

Origins of the Medallion Architecture

Databricks coined the term in the context of Lakehouse architecture (Delta Lake + Spark).

It evolved from traditional data warehouse layering (staging → cleansed → presentation).

The medallion metaphor (bronze → silver → gold) emphasizes progressive refinement and the value add at each stage.

While originally tied to Delta Lake, the concept is widely adopted across modern data LakeHouses (Iceberg, Hudi, etc.).

Visual of Medallion Architecture

Here’s a simple conceptual diagram:

┌────────────────────────────┐

│ GOLD │

│ - Aggregated tables │

│ - KPIs, BI, ML features │

└─────────────▲──────────────┘

│

┌─────────────┴──────────────┐

│ SILVER │

│ - Cleansed data │

│ - Standardized formats │

│ - Joined across sources │

└─────────────▲──────────────┘

│

┌─────────────┴──────────────┐

│ BRONZE │

│ - Raw ingested data │

│ - Unvalidated, append-only│

└────────────────────────────┘

What else do you notice about the Medallion Architecture?

You know what else strikes me about Medallion Architecture, at least compared to other popular data modeling approaches like …

Kimball

Inmon

Star and Snowflake schemas

There is never a discussion about the more technical details of the bronze, sliver, gold layers other than that … “This is raw, this is transformed can cleaned.”

The fine details are essential just ignored, arguments are made for existence, not its implementation.

Let’s get grumpy.

Call me and old curmudgeon, whatever, it’s very possible I’m an old dog that simply can’t learn tricks, if that’s the case, bury me in the prairie. But, one must look the the past, examine what has been accepted and used for decades, things that have worked in Production since before you were born. This must be balanced with a healthy dose of … new things CAN be good and an improvement.

I shall propose to you that this so called “Medallion Architecture” is NOTHING more than Marketing Speak.

The earliest public mention I can find from Databricks is June 2, 2020, in a blog post on monitoring audit logs that references “our medallion architecture.” Databricks

Shortly after, other Databricks posts in June and September 2020 also used the term and diagrammed the Bronze/Silver/Gold flow, confirming the concept was already in use by then. Databricks+1

So: first public reference → June 2, 2020 (Databricks blog).

Let’s be clear about something, Databricks says “OUR medallion architecture.” Keyword our.

Drawing Lines.

That’s what it really comes down to from my perspective. Databricks is the GOAT at building new things, they also have excelled are marketing and communication.

When Databricks brought forth the Lake House, they WANTED an entirely new and catchy set of ideals and concepts to go along with it. Something to sink the hooks into their future Lake House users. What better than to rename same already accepted Data Warehouse ETL patterns as Medallion Architecture.

Can you blame? Maybe, maybe not. But it also has caused a whole host of downstream problems, people confusing things and getting the point where NO ONE even knows what a proper Medallion Architecture is.

All you have to do is read this Reddit post and comments.

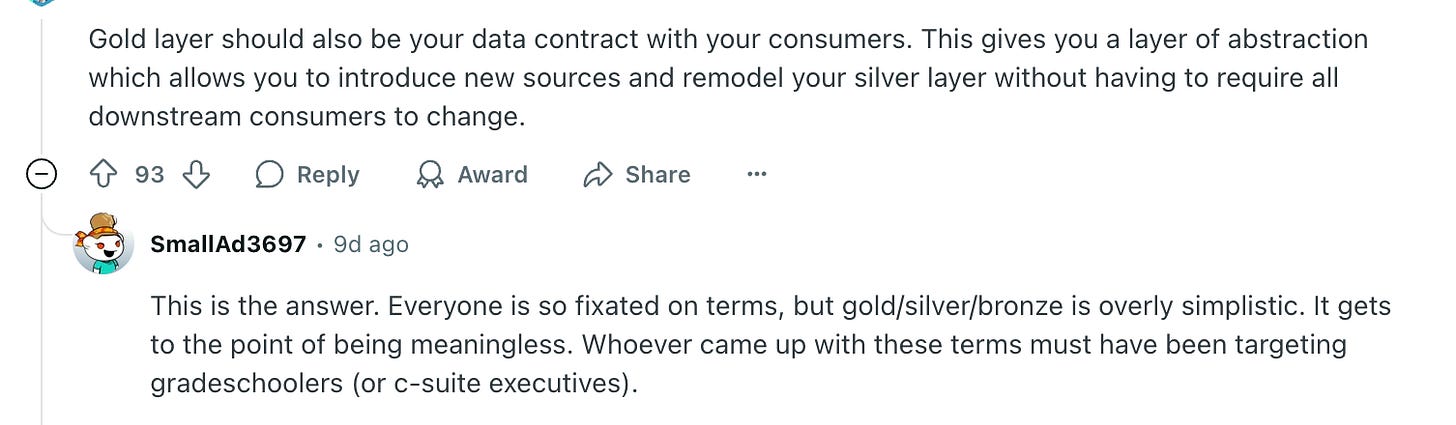

People are conflating Data Contracts with Medallion Architecture. Yes. Data Contracts.

And it continues, more confusion on what to do with this Medallion Architecture.

I mean, the confusion is obvious. People still read Kimball’s Data Warehouse Toolkit, and for the most part, model and talk about data using this tried and true principals.

The minute you decide to adopt the half-baked concepts from a SaaS company, yeah, things are going to get weird.

It’s all about Data Modeling.

The long and short of it is that medallion architecture IS an approach to Data Modeling. From this respect, maybe I should just leave Databricks alone, since Data Modeling is in the eye of the beholder. My problem with the whole thing is that we have lived with the Staging / Fact / Dimension / Data Mart nomenclature since the beginning of the Data Warehouse era.

It was, and is … really a TWO step program, sometimes THREE when called far, but %90 two stepper.

RAW -> FACT/DIMYou load raw data … you transform that raw data into its final state and then write to fact or dimension.

Done.

Oh, you want a Gold layer? It’s called Data Mart to store specific analytics used to feed Dashboards, etc. When it comes to the classic type of Data Modeling, there is all sorts of talk about …

primary and foreign keys

data normalization and do-normalization

the grain of the data

etc.

ETL Concepts in Classic Data Warehousing (Kimball Methodology)

Kimball’s approach to data warehousing emphasizes ETL pipelines that transform raw operational data into star schema or dimensional models optimized for analytics.

1. Extract

Pull data from source systems (ERP, CRM, flat files, logs, APIs).

Capture both historical (full load) and incremental (change data capture).

Sources are diverse and often have inconsistent formats.

2. Transform

Cleanse, standardize, and integrate data.

Apply business rules and conform dimensions (so Customer means the same across systems).

Handle deduplication, null handling, type conversions.

Create surrogate keys and maintain slowly changing dimensions (SCD Type 1, 2, 3, etc.).

Build fact tables with measures (sales amount, order count, etc.).

Classic Warehouse Layers (Kimball vs Medallion)

Staging Layer → Similar to Bronze (raw ingestion).

Cleansed/Integration Layer → Similar to Silver (clean + conformed).

Presentation Layer (Star Schema, Data Marts) → Similar to Gold (business-ready).

Why Kimball’s Approach Mattered

Performance: Optimized schemas for BI tools.

Usability: Business-friendly star schemas (easy for analysts).

Consistency: Conformed dimensions enforce enterprise-wide definitions.

Auditability: Historical changes preserved with SCDs.

┌────────────────────────────┐

│ Presentation Layer │

│ - Star Schemas (Facts + │

│ Dimensions) │

│ - Data Marts │

│ - BI / OLAP Reports │

└─────────────▲──────────────┘

│

┌─────────────┴──────────────┐

│ Cleansed / Integration │

│ - Standardized Data │

│ - Conformed Dimensions │

│ - Business Rules Applied │

└─────────────▲──────────────┘

│

┌─────────────┴──────────────┐

│ Staging Layer │

│ - Raw Extracted Data │

│ - Minimal Processing │

│ - Audit Trail │

└─────────────▲──────────────┘

│

┌─────────────┴──────────────┐

│ Source Systems │

│ - ERP, CRM, Flat Files │

│ - APIs, Logs │

└────────────────────────────┘

Proof people do whatever they want and call it what they want.

In truth, what Databricks was calling the Gold layer was really a “Data Mart,” which was a further aggregation of analytics based on a Fact table. However, in the real world, you would have numerous fact tables that feed all the major reports and downstream consumers, with only a few consumers requiring further aggregation in the form of a Data Mart.

What happened was that the writers of medallion architecture at Databricks simply didn’t have the proper background and experience to understand these nuances. They sold the idea that you HAD to have three layers, Bronze, Silver, Gold for every dataset (You do realize that this required more storage and compute, which goes directly into the pockets of Databricks, right??)

You should model your data in your Lake House the same way it was modeled in the Data Warehouse. It works quite well and is not confusing, and based on marketing material, about about three decades of proven use.

load raw data

transform raw data into Fact or Dimension,

create Data Marts of aggregation where needed.

What, you think this IS medallion architecture?

Well, you hobbit, which came first, Kimball or Databricks? All Databricks managed to do was mislead a generation of developers and confuse them, which is front and center in this Reddit post.

I still can’t get over the fact that people are now conflating Data Contracts with the medallion architecture (data modeling). This is hilarious. They deserve each other and the misery each of them bring to the other.

Look, beauty is in the eye of beholder.

At the end of the day people are going to model data however they want, mostly using what they find on the internet, YouTube, etc. It’s not like they teach you much data modeling in school.

If you’re lucky someone will teach you normalization, but that’s about it.

What, you think Databricks was the first SaaS company to co-opt some well accepted and popular concept, twist it to fight a narrative, and move on with life, be-damned the consequences?

In the Modern Data Stack world, this is an every day occurrence. I think the real lesson here is to tread lightly and carefully when following lemmings off a cliff. You might end up like a Redditor thinking that Medallion Architecture is about Data Contracts.

Apot on now if we can make Data Vault the default model approach for Silver/confirmed, I will be happy.

I agree with the sentiment. Medallion in my mind has always been about the “quality” of each particular dataset. The terminology created a lot of frustration within Nike as well. This is due in part to Data Mesh coming along around the same time as Medallion was popularized. Now you have “golden” data products that are fit for purpose (which can be argued as gold-layer “data mart” with data contracts).

My big pet peeve is that most of the design patterns skip “accountability” and when people read about the various layers (be it with traditional ETL -> raw/cleansed/normalized->X) then they can go so far in the wrong direction as hiring teams to manage “layers” - teamB does “silver”….

Cheers