Microservices for Data Engineering

Dream Come True or Nightmare?

You know, looking back on it, I kinda figured by now all of us Data Engineers would be building microservices for this and that, and Batch ETL would have long lain dead under a pile of leaves in the backyard.

I mean I remember when microservices became a thing. I mean it was literally all everyone talked about. You were labeled a knave of the worst kind if you didn’t at least pretend to be on board and be in the know.

But, here we sit. Still pounding on the keyboard, hacking out the same old scripts and batch pipelines day after day, like smashing rocks in gulag.

Could Microservices work in a Data Engineering context? Could they replace our Batch ETL pipelines? Would it make our lives easier? Is it our Savior?

You should check out Prefect, the sponsor of the newsletter this week! Prefect is a workflow orchestration tool that gives you observability across all of your data pipelines. Deploy your Python code in minutes with Prefect Cloud.

Can Microservices work for Data Engineering?

This is a very interesting question to ponder. It goes beyond the question of streaming vs batch to something much larger, who should Data Teams be approaching the architecture and solutions they build?

For the last many decades, with no end in sight, Batch ETL has been the bread and butter of most data pipelines. Today, %85%+ of daily data pipelines are still batch in nature.

What this usually looks like is mono-repo style designs.

Most all the business logic to do data transformations, of all kinds, is usually tied up into a number of utilities or class files and methods that are hopefully shared and imported through different ingest scripts.

# some utils.py file

def read_csv():

....

def write_parquet():

....and used …

from utils import write_parquet

# some ingest scriptThis sort of method usually works fine. Write methods and functions that can be unit-tested and shared throughout many different ingestion scripts and pipelines.

The only problem with this approach is that over time, especially on large and complex teams and projects … it starts to get overwhelming … simply because TOO MUCH code and logic in a single repo.

Even if you are good about creating many different Classes or Utility files … at some point you run into the same problem again. Too much stuff.

What’s the option?

So then what? Some people start to make their own libraries … say Python pip installable packages hosted on something like AWS artifactory for example.

Could microservices be the answer?

This brings us to the question at hand. Why don’t more teams embrace the classic microservice architecture popular in other Software Engineering spaces?

I have a theory that many Data Engineering teams are made up of a lot of non-software-engineering background folks. Data Engineering in general has been well known to lag behind SE best practices. Maybe most Data Teams simply don’t think of microservices as an option.

Depending on your point of view … microservices could make things more complex or less. It’s probably more about hiding the complexity pea under a cup, which cup are you going to choose?

This sort of microservices approach to break up specific logic has become more of a reality with the adoption of Rust as an acceptable programming tool, with support from the likes of AWS for Rust-based Lambdas etc.

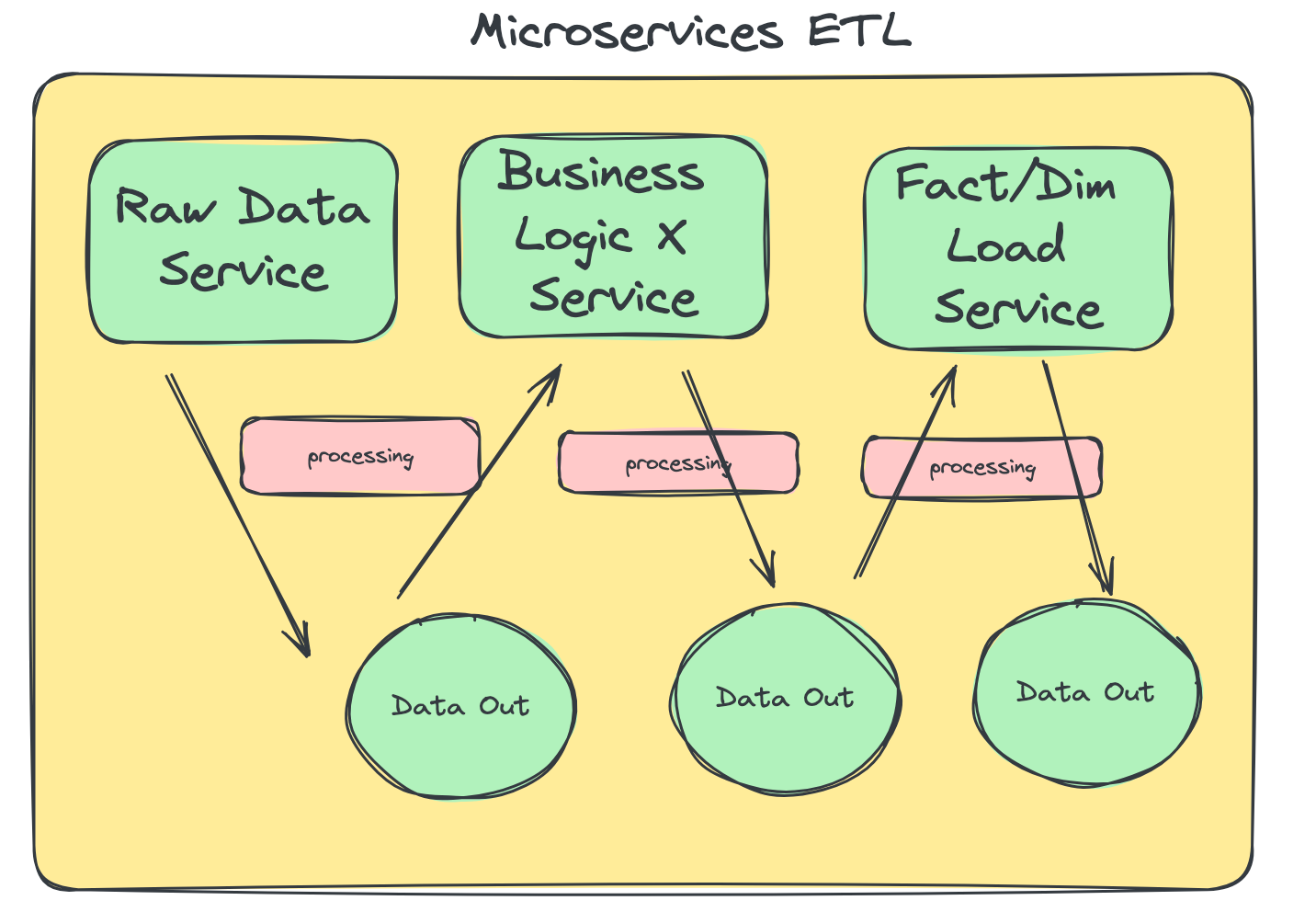

Example.

As a simple example, what if we had a common use case of converting fixed-width flat files to tab-delimited flat files as part of a raw ingestion?

Typically a very normal Data Team on Databricks for example, would just add some code to their mono-repo to do this simple work of reading fixed with flat file and writing back out some tab-delimited text files in processing the raw data in preparation for ingestion into a Data Lake.

But, what if we wanted to take a simple step towards a microservices printed architecture without jumping all the down the rabbit hole?

We could write a standalone Rust-based lambda that triggers on a file hitting a s3 bucket, does the work, and pushes the results back to s3 again.

Very small, encapsulated logic in one spot that does a very specific thing only. Easy to manage and debug.

Here is an example of doing just that in one of my GitHub repos.

Now there is nothing really earth-shattering about writing Rust and putting it inside a Lambda. But, we can probably safely say that there are very few Data Teams that are approaching classic ETL problems in this manner.

With this new design, we move from slow, long-running classic Batch ETL that isn’t very scalable probably … to … an AWS Lambda approach with Rust that is extremely fast, self-contained, and scalable.

In the same way, we could break up a lot of logic inside classic mono-repos into smaller “services” where our logic is …

contained

scalable

fast

easier to debug and reason about

Of course, this means a complete mind shift and probably a whole new set of skills for many Data Teams. Shifting from batch SQL and Spark pipelines on Snowflake or Databricks managed in mono-repos to more of a broken up “micro-services” approach is probably not an easy or overnight change.

Conclusion

What do you think? Are microservices something that holds a future in the broader Data Engineering community? It’s hard to say. I would think it depends on the Data Team themselves.

Change is hard for everyone.

I think it will be some time before we see any movement away from Batch ETL in mono-repos, old habits die hard. But, I think it’s an interesting area to start exploring. Especially, with this infiltration of Rust and Rust-based Python packages.

With tools like AWS Lambda in combination with some creativity … it’s possible to throw the chains of the old off and try on something new.

Very interesting, But how about Observability / Monotoring?

In the case of you example adding the change in the existing Batch infra allow easy monitoring without changing much.

How do you think we can still have centralized observability & monitoring with Microservices in DE contexts.

Maybe we should create a PoC to shock the community