I’m not sure how many of you ne'er-do-wells who gave your poor mothers heartburn in your youth, now work in the ML (Machine Learning) space, but this one is for those few weary and battered souls who have been given that sad task.

Recently I spent some time evaluating mflow, managed Databricks mlflow that is, and I have some reports to deliver to you my dear readers.

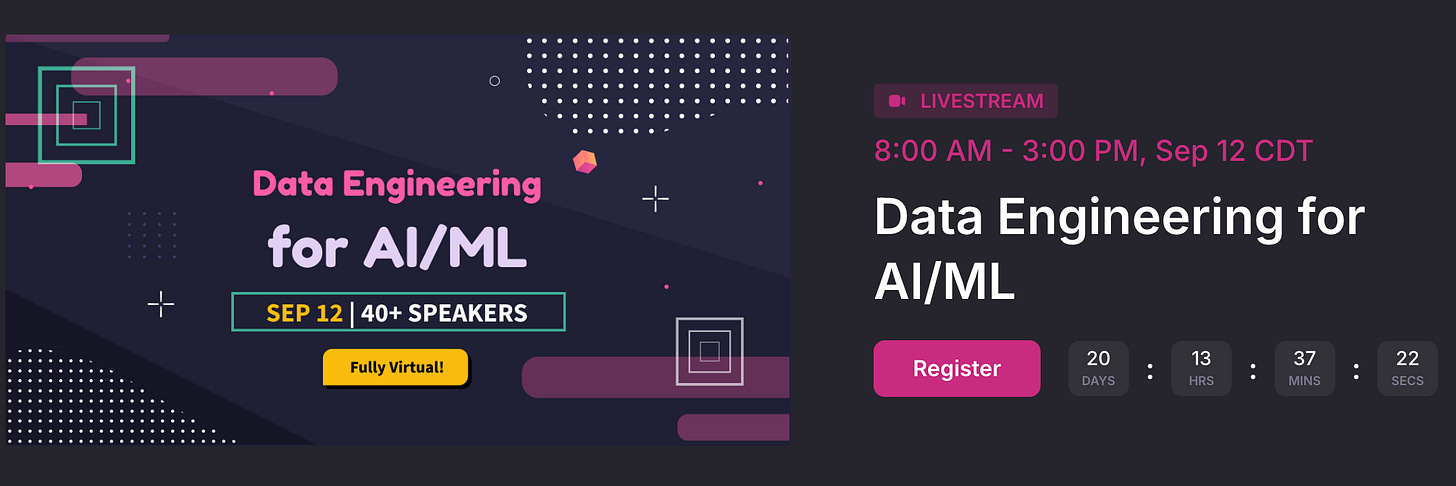

Check out this fully virtual upcoming Data Engineering for AI/ML conference! The perfect place to learn the intersection of Data and AI. There is a stellar lineup with 40+ incredible speakers. I have been a fan of the MLOps Community Virtual Conferences because of the quality talks and funny break activities.

Managed MLflow with Databricks.

So, back to the topic at hand. mflow. MLflow is an open-source platform designed to manage the complete machine learning lifecycle. It provides tools to track experiments, package code into reproducible runs, and share and deploy models

Think of MLflow as a tool that tries to tame the Wild West of Data Science and manage the Data Engineering aspects of what it takes to do the Machine Learning Ops (MLOps) portion of that job.

I’ve been working with Machine Learning for about … hmm … 6 years maybe? And I’ve learned a few things in that time.

Namely, that Data Science is an R&D-heavy job, and when you have a lot of experimentation going on, and custom code being written to …

gather and transform data

create features

run models

save results

validate results

etc.

Those steps can look vastly different depending on the TYPE of Machine Learning model(s) being worked on, and what exactly the DATA looks like.

This is the essence of why MLOps is still a relatively new field that has yet to find it’s feet on solid ground yet.

I’ve worked with image data, tabular data, and everything in between when preparing data for ingestion into Machine Learning models. The data is as varied as the people doing the work.

The Ops part of MLOps.

One of the surprising parts of Machine Learning to those new to the discipline is when they crack Pandora’s Box open and see what it actually takes to put models into Production …

it isn’t the actual training of the model that is difficult

it isn’t the exercise of prediction part of that model that is difficult

For example … it usually goes something like this.

Of course, things get a little more complicated, but you get the point. No black magic or rocket science going on here.

Nay, this isn’t the difficult part. Just like the writing of any code for Data Engineering rarely becomes a problem.

It’s the processes and tracking of what is going on that becomes the problem, it’s getting some consistency and reproducibility in the process that becomes the problem.

Ok, so enough beating around the bush, let’s get back to the issue at hand, managed MLflow on Databricks, what it does, and why it is useful.

Managed MLflow with Databricks.

So after my long-winded discussion above on what actually makes Machine Learning and MLOps difficult and important, let’s do the 10,000-foot view of MLflow on Databricks and I think it will jump out at you why it’s an important tool.

Here are the key concepts to understand about MFflow on Databricks.

Tracking

Models

Projects

This picture sums it up well.

So what can you do with MLflow on Databricks?

You can have a Project, which helps encapsulate all the code and configurations needed to run a particular Machine Learning experiment. Super helpful for consistency, fast iteration, and having multiple people working on the same or similar projects.

YAML files are used for these Projects.

Of course, if you are familiar with Databricks, you know you can clone Git repos up there, as well as those dreaded Notebooks … think about a Project as all that stuff, your code, and configs that will actually run … all in one spot.

Experiments and Tracking

Of course, once you have whatever code you want to run, the next obvious that is typically hard from bespoke MLOps operations is the Tracking and Monitoring of an “Experiment” … aka the Data Scientist or Machine Learning Engineer will probably …

run the code many times over tweaking parameters and datasets

save the results from those many runs

want to review everything they tried and share those results

be able to find different “artifacts” from the experiments (datasets, graphs, charts, configs, params, etc).

This is also where Managed MLflow on Databricks shines. Below is some sample Databricks code, you can note the Tracking and Logging of the Experiments.

It’s hard to overstate how beautiful of a thing this is, speaking from personal experience. Imagine with me, a team of 12 Data Scientists, all working on the same or a few different models.

How do you ensure a similar workflow? How do track what everyone is doing? How to reproduce it? Where do you store it? How do you display it and make sense of it??

With MLflow on Databricks these simple API calls above can do all that like it’s nothing. This is a game changer and will absolutely make the ML lifecycle much quicker and less error-prone.

No more Wild West.

Models.

The last important piece we have not talked about, that can be a pain for MLOps … is managing all those nasty little Model Objects themselves.

It’s a pain to, in a bespoke way, manage …

storage of model objects

model object versions

serving of those model objects

pulling models in and out of production

monitoring those models

etc

You get the drift. Once you have the model, that’s only half the battle … the real war starts when you have to manage those models.

This can all be done with Managed MLflow on Databricks via the UI or of course many different API calls from code.

Conclusion.

I know we glossed over pretty much everything very quickly, no detail is found here, but what I really wanted to do was communicate at a high level the difficulties of MLOps and how Managed MLflow by Databricks provides solutions for those problems.

This is the solid ground that all those shakey MLFlow feet have been looking for all these years.

Hopefully, in coming articles, we can dive deeper into each one of these MLflow components and start to learn their inner workings.