_internal.DeltaProtocolError:

The Databricks and Delta Lake lie (the mighty have fallen)

First, a note from me before we chomp on them meat and taters.

Today I want to bring on along for ride down the long winding lane of an average day in the life of just another average Data Engineer. No glorious writing about the wonders of AI and how digital rainbows and unicorns leap from the ends of my fingers while building Data Platforms that never break and cost nothing.

Not today.

If that’s where you find yourself, you’re not trying hard enough. Interesting work and life is found in turning over rocks and looking to see what’s underneath. Stubbing the ole’ toe.

Today? We are going to stub our toe on some Databricks + Delta Lake + Polars + DeltaLake (python) + Daft.

The best laid plans of men and mice you know.

I’m going to give you my list of frustrations out the gate. So if you want some LinkedIn fodder, I will make it easy for you.

Nothing is ever as it seems

I smell the beginnings of troubles that aren’t easy to reverse

Your tech stack isn’t as open as you think

Devils in the details

🔄 Off the beaten path.

For most of my life I’ve found the most interesting path to walk is not the well trodden one. Of course I know it’s important to have balance in all things Data Platform. We want …

reliability

large community of support

stability

… etc

Yet, in an effort to bring value and push the boundaries of what is possible, we must at times stray from the safe path. Of course, the minute we stray from the well trodden way, we can expect trouble around every corner.

I for one, think that difficulty in all parts of life tends to harden and make one more resilient.

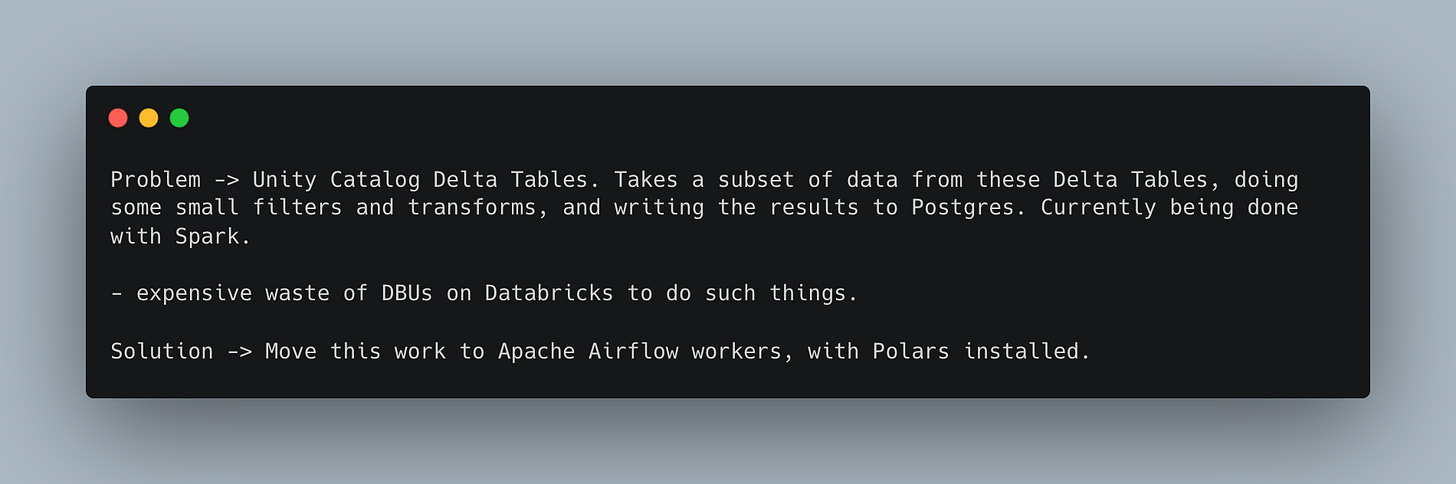

Ok, so let’s just walk through the problem I recently faced.

Turns out neither Polars, PyArrow, DeltaLake (python), or Daft could do the job.I mean we should just shake hands all around, pat ourselves on the back … what a wonderful idea! Save money, it’s fun, simple. If we were going to count our chickens before the eggs hatch, this would be the time to do it.

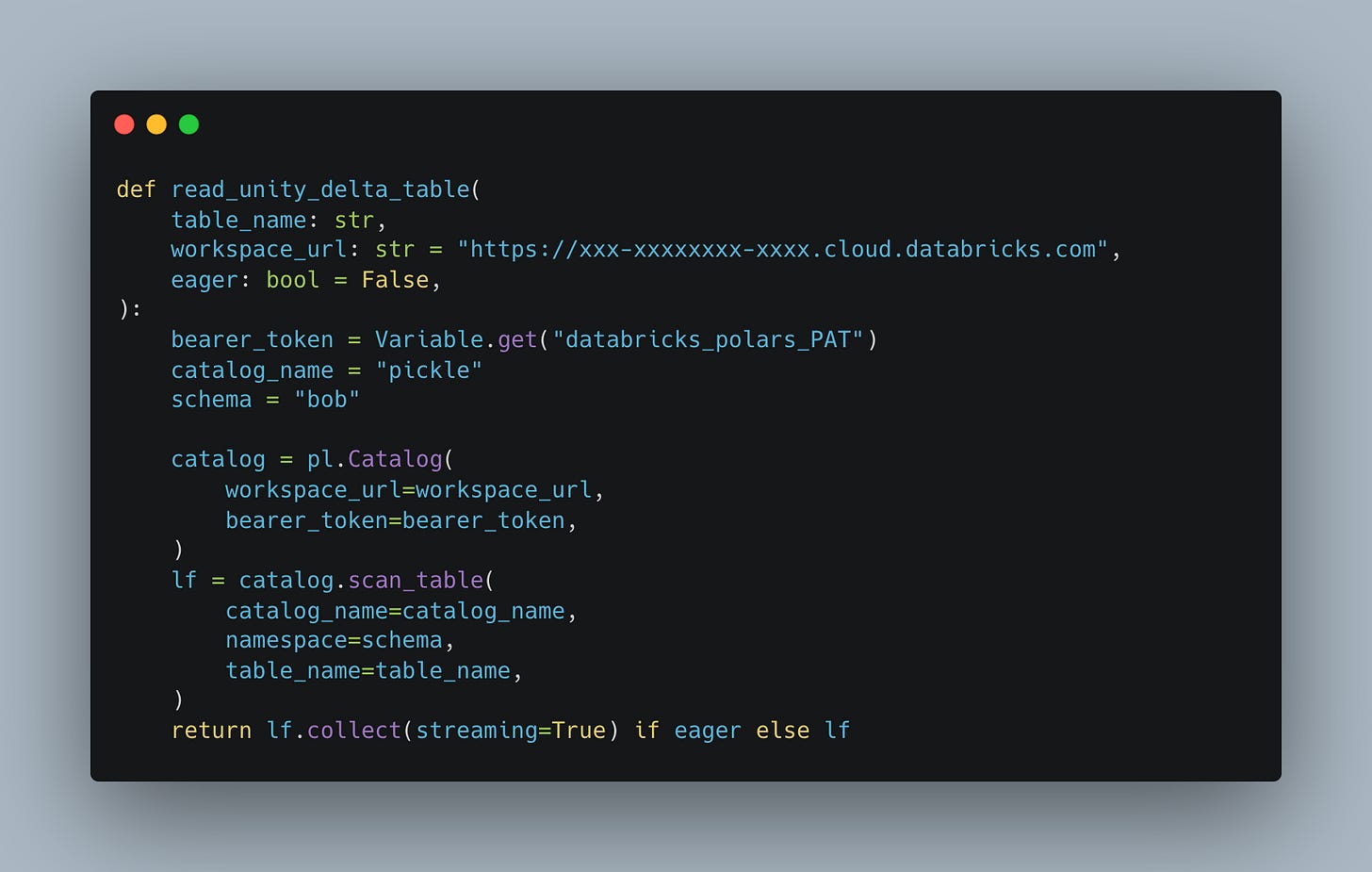

Heck, who can’t appreciate the simple approach of reading even a Unity Catalog Delta table with Polars.

Hot dang. It’s even lazy in nature so not as to blow up memory. At this point we start to whistle a little tune. So wonderful, we can just imagine the cost savings and praise we will receive.

Who would have thought that going against the grain would be so easy??!

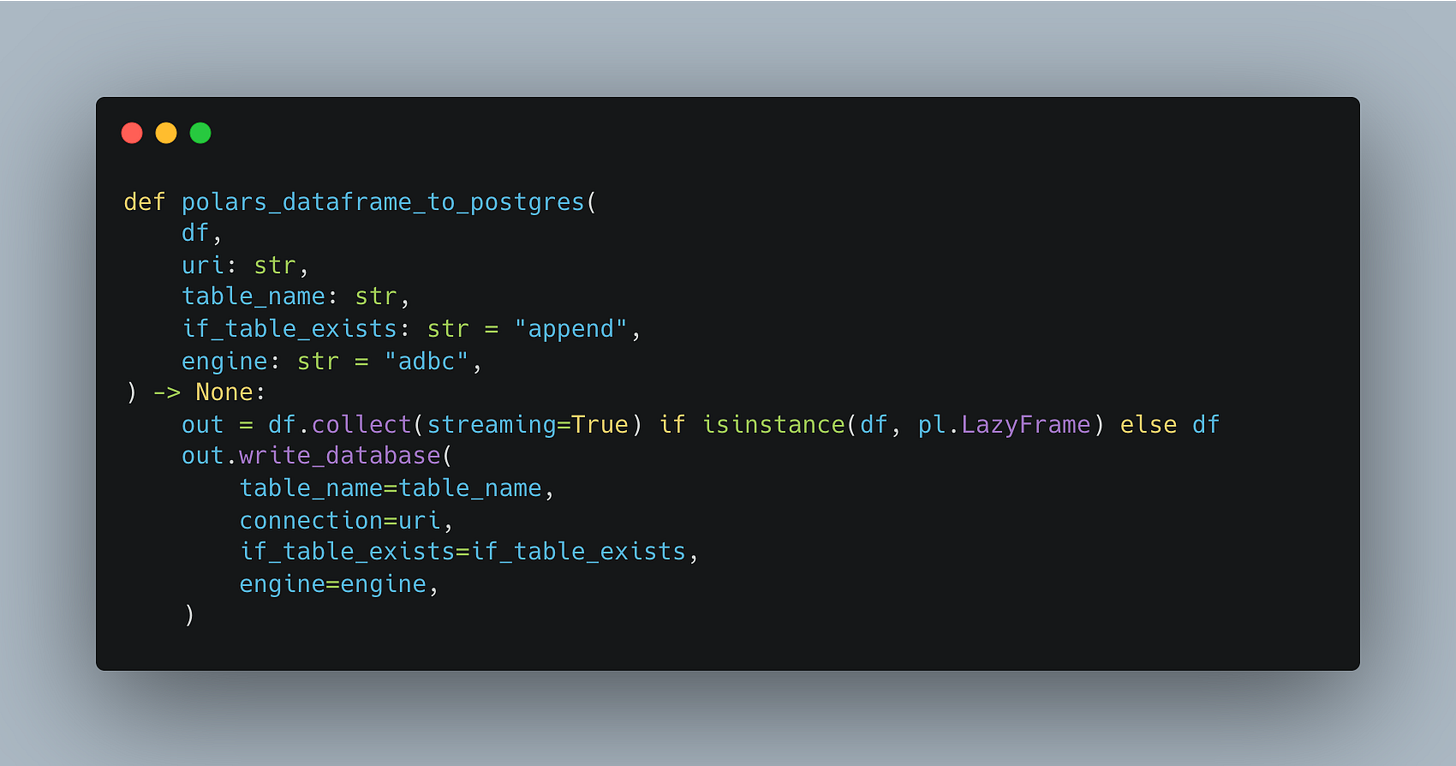

I mean blimey, the write to Postgres is even better.

What could possibly go wrong?

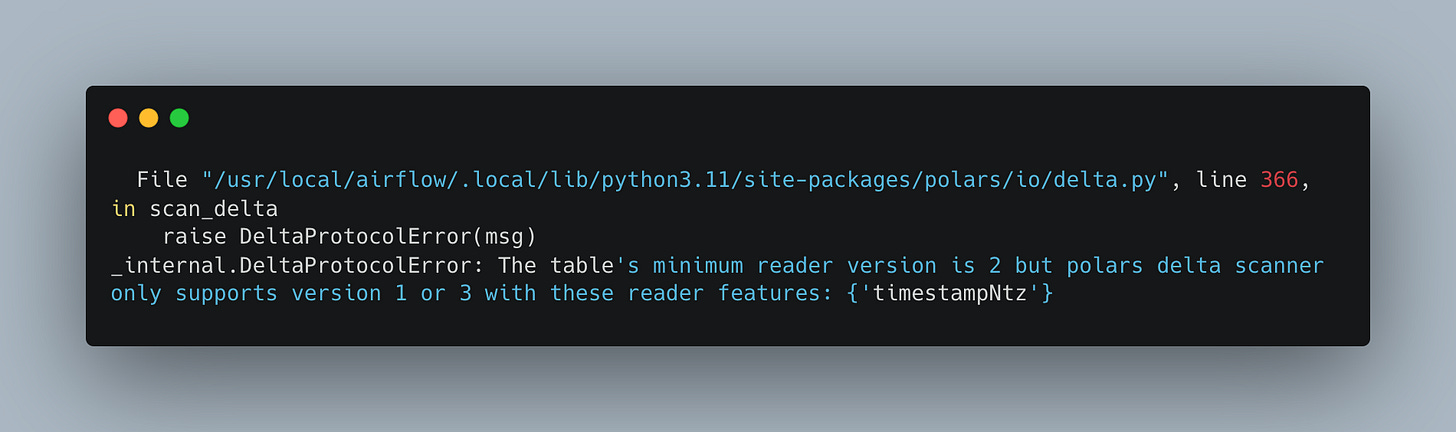

So, off we go to our development environment. Time to test in Airflow with some real live dev tables in Databricks Unity Catalog. Then it happens. What we all knew was coming.

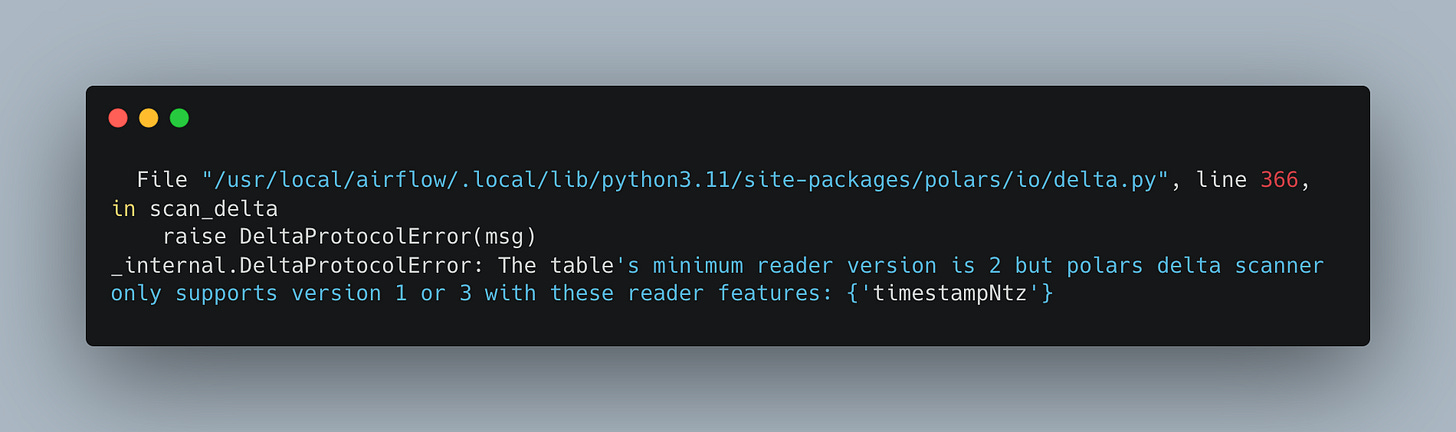

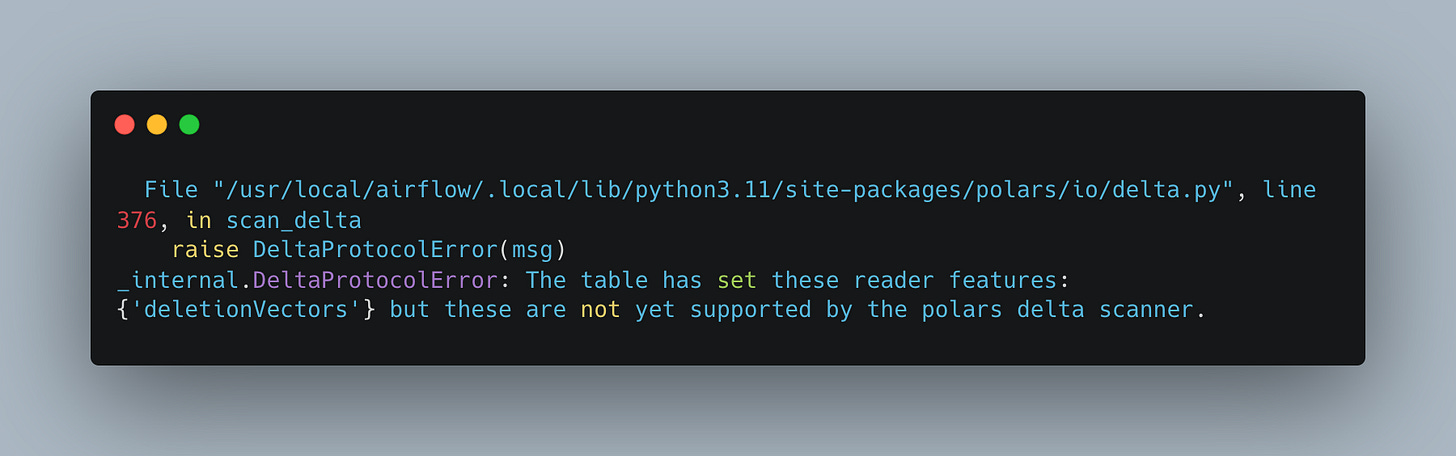

We stare at our screen for awhile, rerun things a few times, of course the error never changes.

🏢 Debugging time.

At this point we get a little annoyed. Never run into these problems with PySpark on Databricks with too many Delta Tables to count, created over many versions of DBRs, different versions of this and that.

It just works.

Polars? Nope.

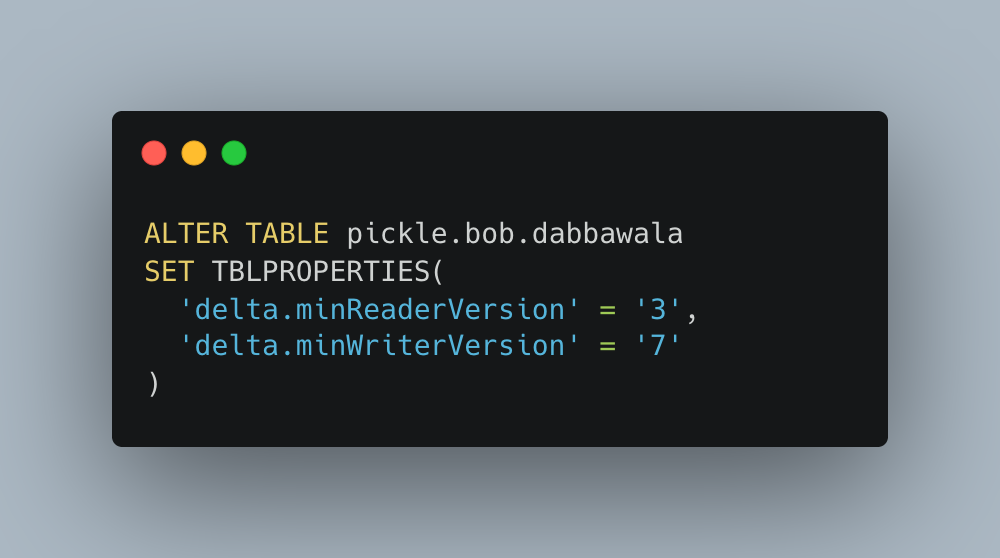

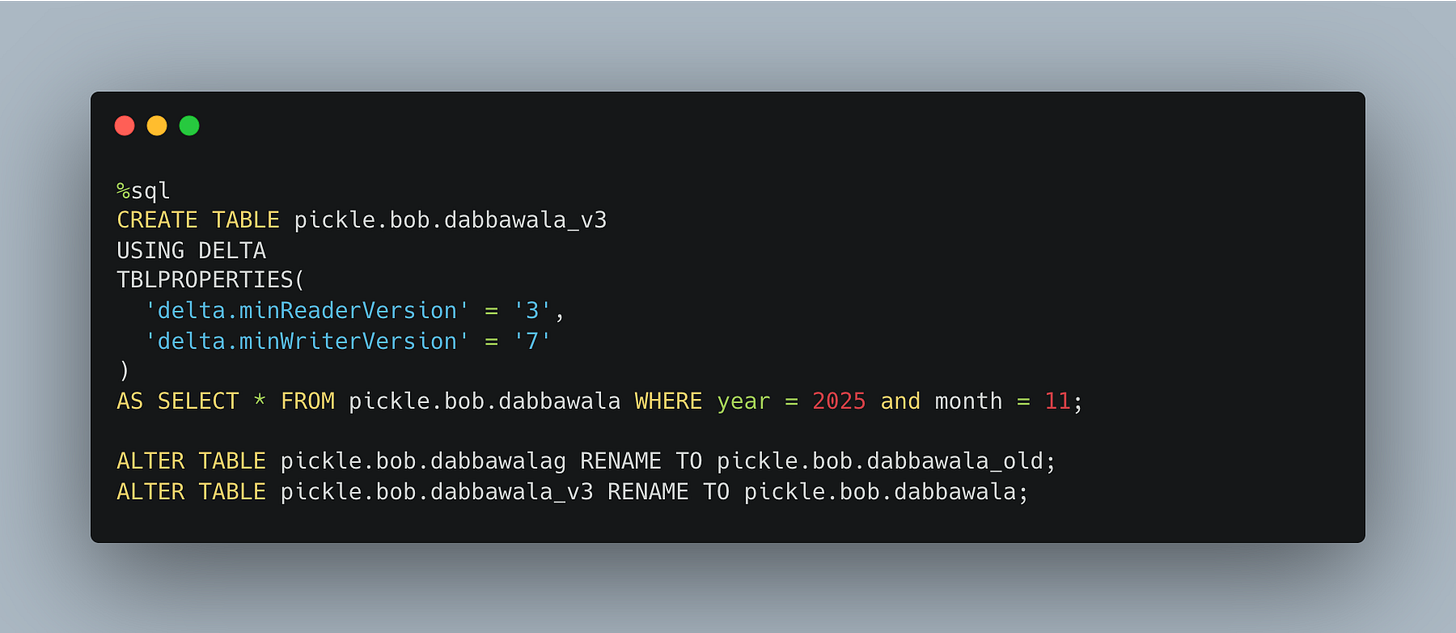

Well ok, let’s just do what the error tells us to do. Upgrade the Delta Table reader version to 3. Of course that will work right?

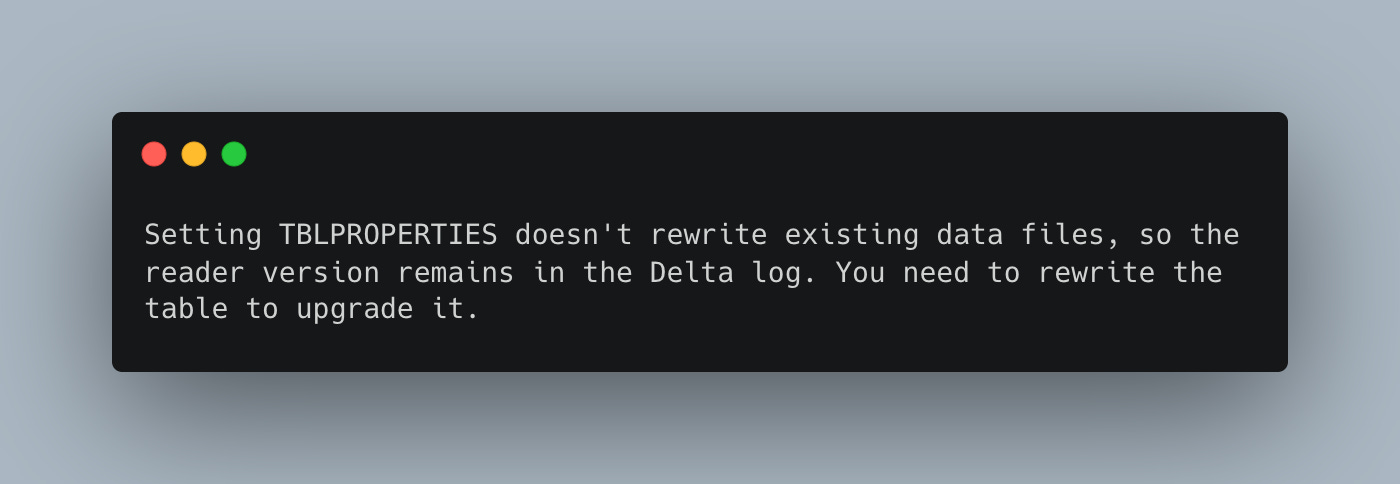

No go. Same error. What??!! I updated the flipping table. What gives?

Well, we could poke around docs for minute I suppose.

Read read. I did notice something about required upgrade required for certain features, is it a coincidence that our timestamp-ntz shows up in the list?

Probably not. What does it mean? How should I know??

What, you think I have unlimited in time both professionally and personally to pick and prod at the minutia of Delta Lake versions and the underpinnings of Polars Delta Lake readers?

Give me a break. After poking around the matrix for a bit, it seems the consensus might be this?

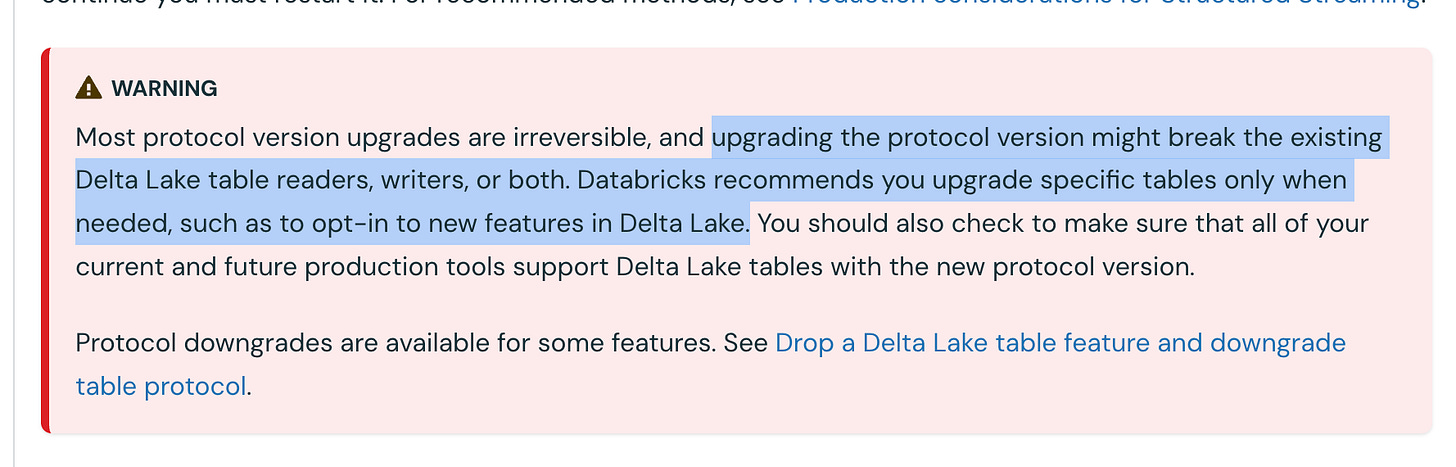

🧬 Lordy, they got a way of keeping a guy nervous about this stuff. Honestly, sometimes I would rather not know. It’s one thing to just fix something that breaks randomly. It’s another thing to be sitting on the edge of your seat waiting for the ball to drop.

Buggers.

Well, what’s for it? Let’s just re-write the table with the new version reader and see if Polars can chomp happily on the Delta Table like that.

Look ma! A new error!!!

Well ain’t this just all sparkles and butterflies? Par for the course I think. The reality of day to day data engineering in real life, when you stray off the well worn path that is.

Then, my friend, the saga got worse.

One would think re-creating the table with …

enableDeletionVectors’ = ‘false’Would do the trick. To no avail. It seems just those magic words DeletionVectors borks Polars, true or false. I suppose in theory this makes sense.

🧰 DeletionVectors are newerish. I had been running these commands on DBR 17 something, what if we wind back the DBR clock to something like DBR 13?

The truth is though, winding back DBR versions, creating tables with maybe ReaderVersion 1 … this isn’t really a true fix. We need our Delta Lake tables to keep up with the times, to grow and mature. You can’t wind back the clock to move ahead.

A strong tool needs an ecosystem of frameworks that all work together in harmony. That’s what Delta Lake used to be back in the day. No more.

Sadly, these other non-Spark tools are lagging behind, these breaking changes makes this difficult, and now we’ve painted ourselves into the proverbial corner.

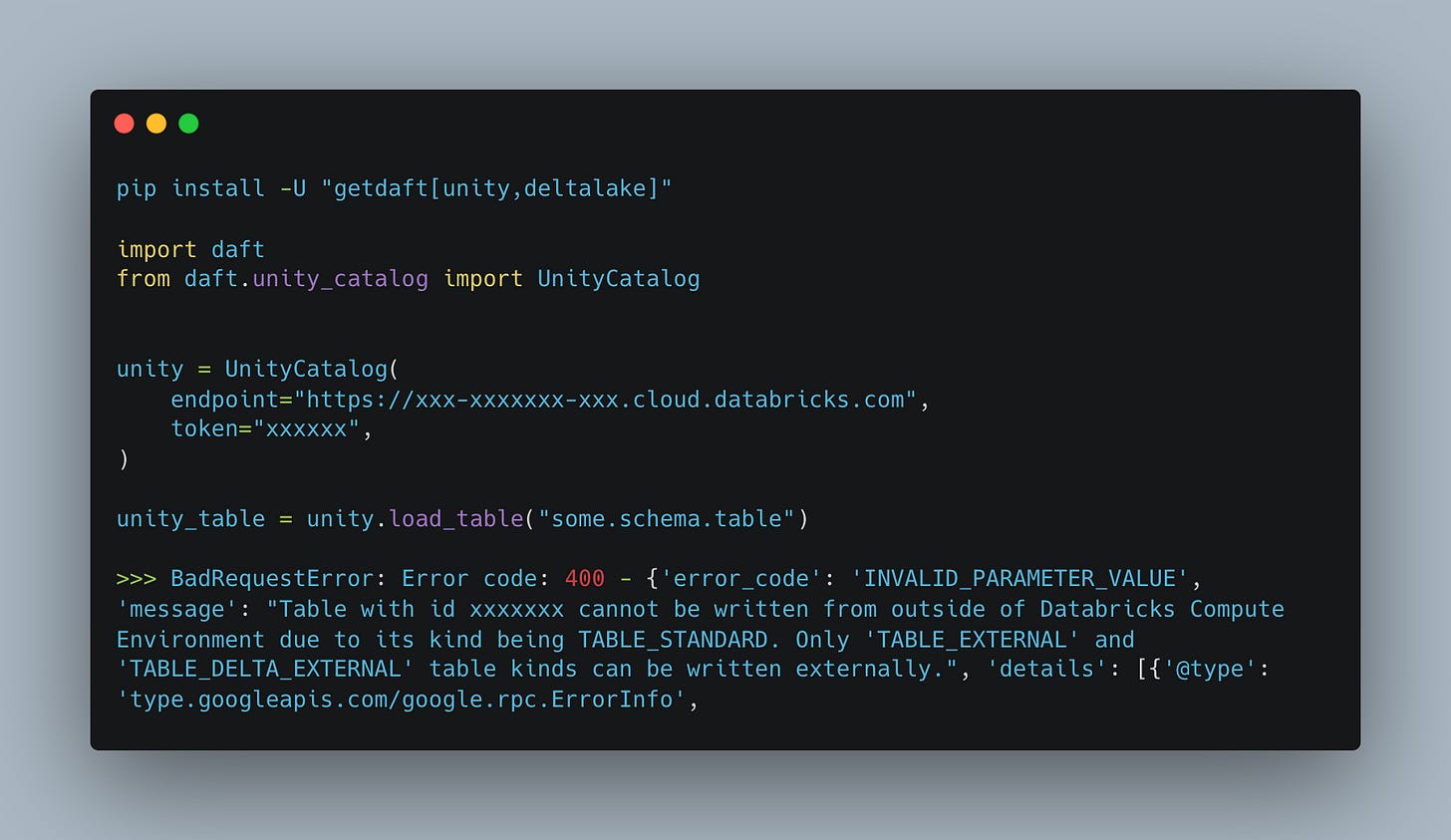

To make matters even worse, or funnier, depending on your point of view , I thought I would try using daft from a Notebook inside Databricks. To read a Delta Lake table. (the pip install instructions are incorrect in the their docs) I’ve done this many times in the past, but, things change. If you catch my drift.

Databricks is saying … not going to happen Sunny Jim, get your dirty little hands off.

🗂️ And yes, external data access on the metastore is turned on.

So, what has happened? Quietly, on purpose, or not, if you’re using Delta Lake tables on Databricks environment with any semblance of recent tooling (DBR, etc). You are getting locked in. You’re Delta Table and DBUs ain’t going anywhere you bugger, lock in for the ride.

Who in their right mind is going to start manually upgrading and downgrading reader and writer versions depending on what tool you want to use??!!

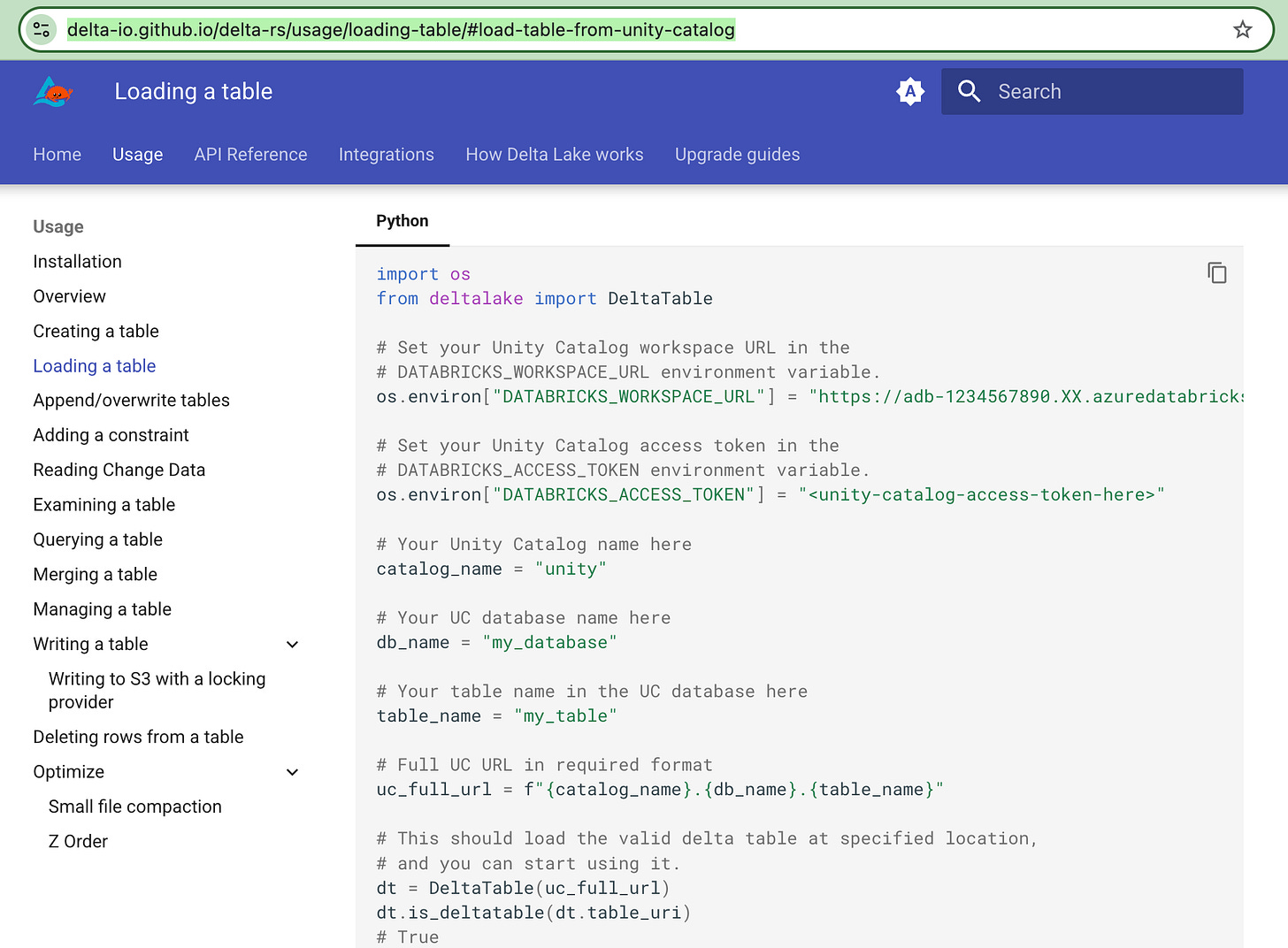

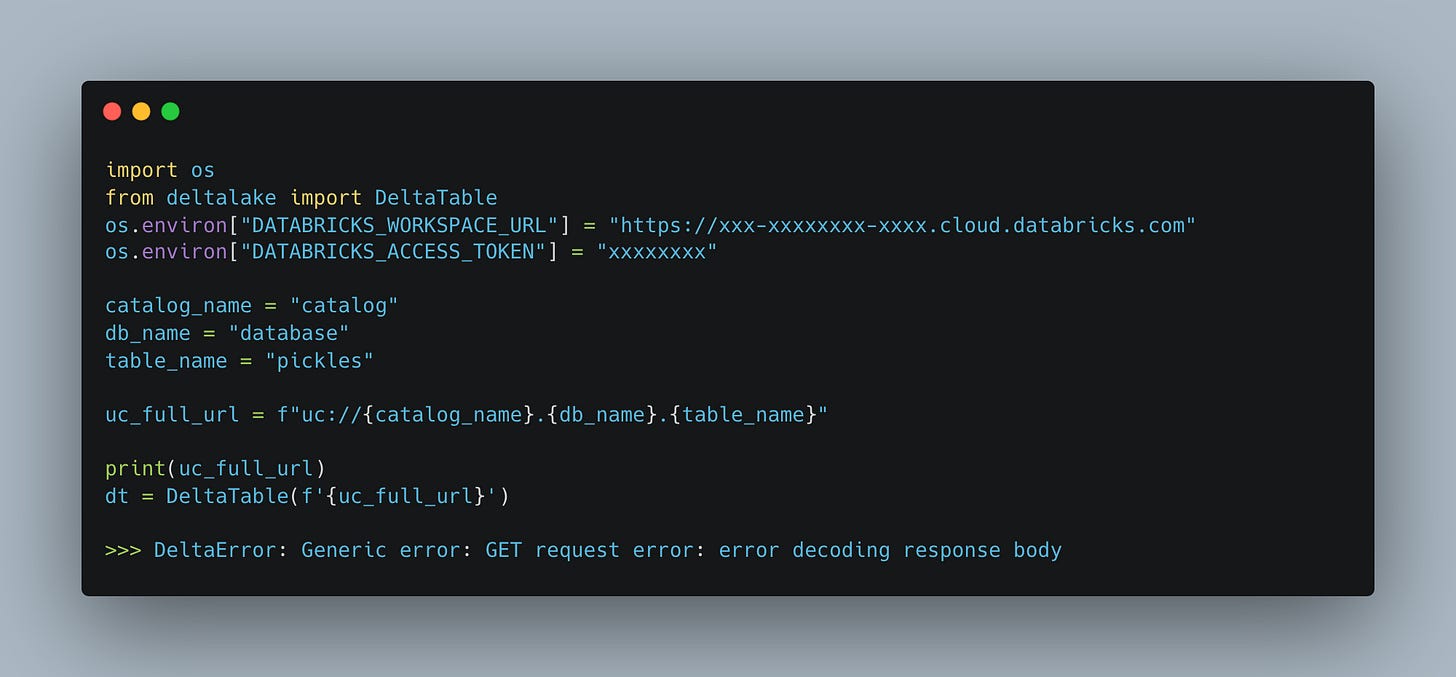

I thought to myself, heck why don’t I use the deltalake Python package from the horses mouth (Databricks) so to speak. THAT, of all tools must work to read me a Delta Lake table these days.

Oh how the mighty have fallen.

Number one, the docs have an incorrect example that might through you off until you go through the verbiage closely. You need a uc:// in front of your full `uc_full_url`. At which point, it breaks again.

This by no means works, and pukes right out.

This is quite the change from even a year or two ago if you ask me. This new state reminds me of Apache Iceberg. Fragmented, many moving parts, nothing works besides the DBU money accumulating version with Spark.

If Apache Iceberg ever had a change to resurrect itself as a good open-source option that can be relied upon and actually works with many frameworks in various situations, this is it.

Databricks and Delta Lake are no longer the innocent bringers of Lake House truth to a broken world. Corrupted and stumbling over dollar signs and corporate mishap.

I have to admit, in my heart of hearts I knew it was coming. But being slowly boiled like a frog in a pot feels good at first. No one thinks it will reach the point where you literally are locked into your Data Platform without zero wiggle room without major antics.

⚙️ The future.

The endless cycle of heartache where the digital rubber meets the road. Nothing is clear and clean cut, decisions are messy and full of nuance. Tools change fast, we change fast, and before we know it, we are in a spot we didn’t expect.

Listen, I don’t claim to be a Databricks or Delta Lake super savant. I’ve been using the stuff for years, but what does that mean? Nothing. So have a lot of other people.

Sure, smarter people than I could tweak and turn knobs and dials and throw salt over their left shoulder at midnight. I work and operate in the real world. I don’t have …

infinite amounts of time to force an issue

simplicity and reduction of complexity is key

things happen in a larger context

I do things I don’t like in the name of “business”

If you think this polemic against Databricks and Delta Lake is uncalled for, take a hike. I’ve written a plethora of wonderful content lauding many of their features, and will continue to do so when warranted.

I also have, and will, continue to preach the truth about the everyday average engineer down in the trenches mucking about in the mud. I will voice concerns and annoying things when I find them.

Many times it’s those on the bottom of the ladder, (I am one of those I assure you), pay the price for the inattention and lack of care from those at the top of digital food chain.

These buggy nuances of versions of this and that, mismatched frameworks not keeping up, they tell a large story. One that is not easily reversed and backed out of. The truth is, the future is probably not brighter but filled with more pain in this regard.

Good staff. I recently used duckb with databricks to save a few bucks everyday and it works just fine

Duckdb can read DeletionVector.