Data Cleansing for Dummies

yeah, that's what I said.

It’s been a while since I did any “back to the basics” posts. I imagine all you lovely readers sitting there with your giant brains picking apart everything I say. Who’s got any time for the simple stuff??

Recently, someone requested I talk(write) about Data Cleaning at a high level, for Data Engineers, Analysts, Scientists, and the like. It seems like a simple topic, but it’s one that's ignored chiefly on Data Teams.

Do you mean to tell me that, living in the Age of AI, SaaS products have reached the zenith of technological advancement, and “Data Quality” tooling is at your fingertips, requiring zero work or setup … and we still have data quality problems?

Of course. Surprised? I hope not.

This week’s newsletter is sponsored by networthtracker.io

Interested in FIRE? Want to kick your boss to the curb? networthtracker.io is a free website where you can simply and easily play with your financial numbers to divine your net worth and where it’s headed.

Back to our regularly scheduled programming.

Data Cleansing - starting from scratch.

Okay, so data quality, data cleaning, and data governance are all the same. Since before time began, it was decreed that all data should henceforth be dirty, confusing, and full of surprises.

Having done this “data thing” for over two decades, with many promises and grand statements from a plethora of talking heads and vendors, we find ourselves in the same place. Data is often considered dirty, and data quality is frequently overlooked and ignored as useless by the majority of Data Teams.

Sure, they might give it some lip service, but lip service is all it is.

I’m going to propose that there are two levels of Data Cleansing (call it Data Quality if you want).

Low Level (DDL, constraints, etc.)

High Level (SaaS tooling, alerts, etc.)

Most people mistakenly assume that to start with data cleansing, one must find the latest DQ SaaS or open-source tool and get to work. This is rarely the case; it is detrimental, ignores the basics, and leads to lackluster results.

“All good data cleansing and data quality work starts with a solid foundation built upon data types and constraints.” - me

So, where should you start?

Foundations of Data Cleansing and Quality

When starting with a new data set, there is likely a plan to ingest that data into a process that will assume or look for certain things within that data.

You must start with the basics.

review columns to settle on Data Types

understand NULLable columns vs not

understand column data (constraints)

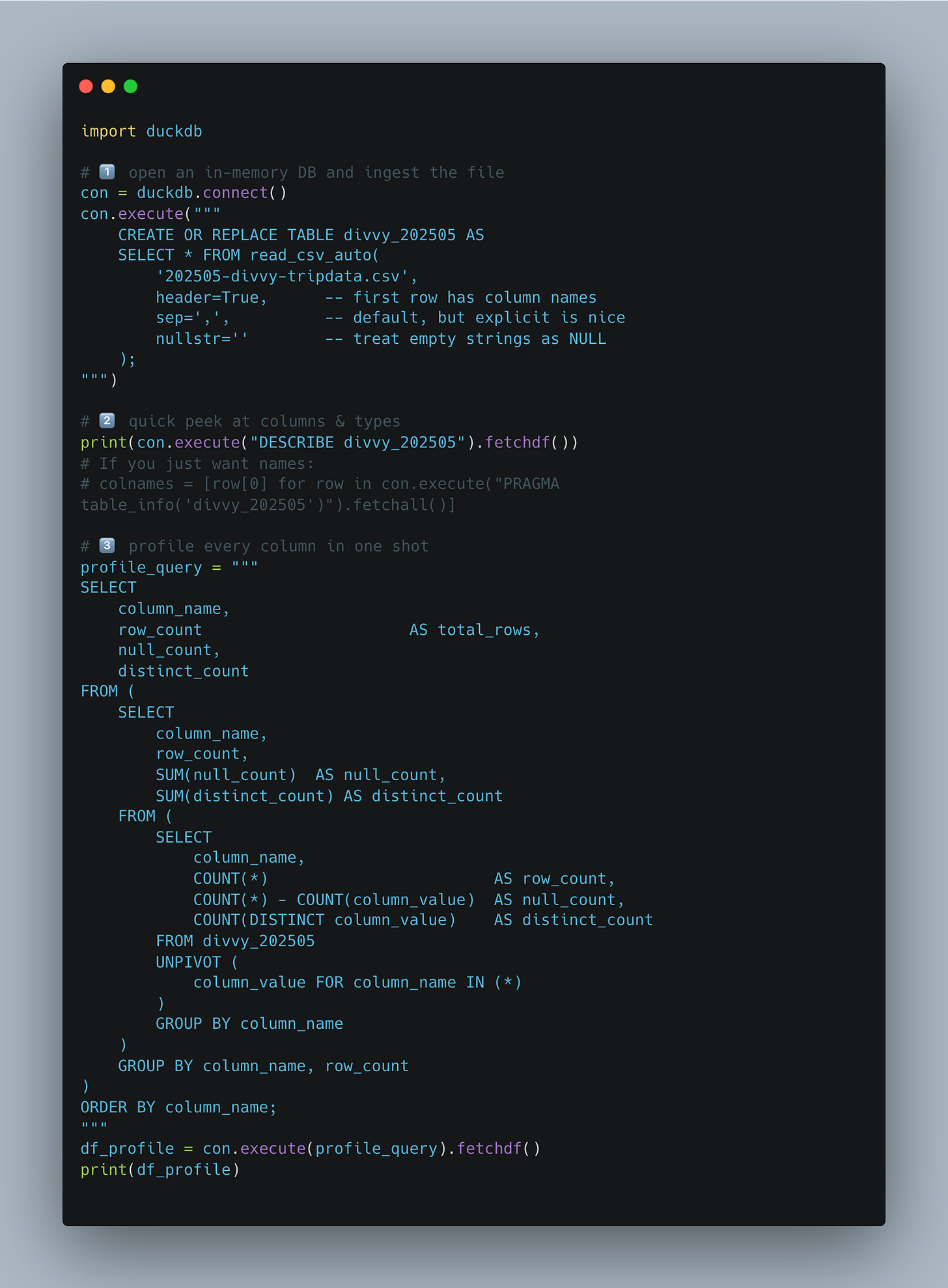

Let’s use the example of Divvy Bike Trip data, and walk through that. If you think this sort of idea of DDL, data types, and constraints is getting ahead of ourselves, but you can’t clean data you don't understand.

Spending time to understand data upfront will make data cleansing make more sense and go smoothly on the backend.

It’s incredible to me that about 1% of data workers when dealing with a new dataset, or working on “data cleansing” … will NOT take the following steps.

decide on DDL/Data Types

understand where constraints apply

understand where NULLs apply

Sounds too easy? Yeah, well, how are you supposed to “clean” datasets that you don’t understand WHAT the data is SUPPOSED to look like??

In our example case, we could say …

This is not rocket science; it's simply understanding the data before us and what we can infer from that data. To avoid having to do this work manually, consider using a tool like DuckDB for your data exploration.

I mean, you are smart folk, you get the picture.

So what's next?

You tell me. Of course, once you have done this sort of data exploration and understand what you are dealing with, the data cleaning and quality part becomes much more apparent, and the puzzle pieces are more likely to fall into place.

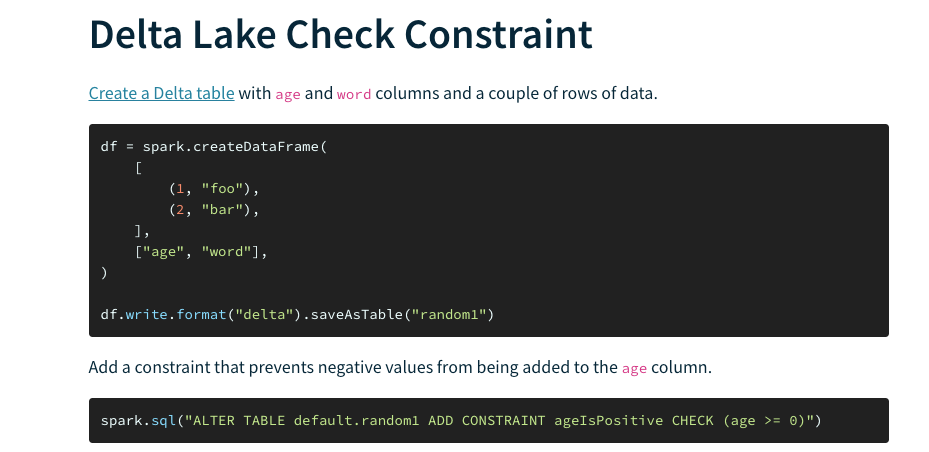

In the Lake House world we live in, using tools like Delta Lake or Iceberg, this sort of data cleaning via constraints and checks has tier 1 support.

The sky is the limit these days; there are plenty of open-source tools that can make data cleaning a fairly easy and fun process. DuckDB, Polars, etc, these tools make munging data straightforward.

What are other low-level data cleaning steps?

What else can we do besides data types, constraints, etc to wrestle the data into submission?

apply obvious and descriptive column headers

DESCRIPTION → product_description

CUR → currency

add descriptions and comments to code and data.

work with Product (or whoever) to understand data more deeply.

Of course, with these basics we are just scratching the surface of data cleaning and quality, the problem is that everyone wants to skip these steps and jump straight to the “fun” and “fancy.”

Fun and Fancy won’t work well if you feed it slop.

Of course, there are always the plethora of data cleaning and quality tools available, some of which I’ve talked about.

More fancier stuff.

Once you have mastered the basics and are ready to go on to glory, the data world is your oyster, and there is no lack of tools like Soda, Great Expectations, DQX, and the like to spend the rest of your life playing with.

Eventually, you would want …

alerting

monitoring

observability

auto-healing

quarantine

etc.

But, to be honest, most people haven’t done the simple stuff we talked about, and you can buy and use whatever fancy tool you want, and your life will be stuck if you don’t use data types, constraints, and checks to control your data cleaning and quality.

Books could, and have, been written on each of those bullet points above. I suggest a more methodical and nuanced approach that begins with tight schemas and proceeds slowly from there.

Best of luck, you bunch of dirty data hobbits.

Thank you Daniel, highly appreciated! Additionally suggest business stakeholder engagement to focus on the problem to be solved, building the model, and how it will be deployed. A shared conceptual understanding on the problem, model, how it will be deployed, and iteration approach before any cycles spent on data cleansing.