I’ve been muddling about this one for a few days now, and I'm not sure what to think. One minute, I think it’s a great idea; the next minute, I turn up my nose to what seems to be another pointless addition to the morass of the Lake House world we live in.

One can only assume, at this point, that the truth lies somewhere in between.

MotherDuck, DuckDB … whatever, same thing, has doubled down and submitted yet another Lake House format to be added to the already crowded field.

Thanks to Astronomer for sponsoring this newsletter! I’ve worked with Apache Airflow for years, and Astronomer’s Astro platform is hands down the best way to run production-grade Airflow. Their commitment to simplifying data orchestration makes content like this possible. Sign up for a free trial of Astro below.

The only thing I can do at this point is to introduce you to this new Lake House tool and provide some realistic commentary on this latest development. I will give it to you like your grandmother’s comments at Christmas, raw and unadulterated.

As I lie here on my back porch watching the rain pitter-patter on the windows, the thought of dealing with and writing about yet another Lake House format has me dreary and about as excited as the wet and soggy maple tree staring back at me.

But, for you, my dear reader, I will do my best.

How did we get here, and what is DuckLake?

The simple fact is that we live in a Lake House world now, and there is no going back. It’s here to stay, at least for the next decade or two.

Like it or not, your CTO has followed the lemmings down the long and winding Lake House + AI road, and there is no turning back. This has all happened fairly quickly and relatively recently, causing a new tech data gold rush of sorts.

I’m sure some bumbling acolytes will disagree with me, but as of today, you have three serious options when it comes to choosing a Lake House Storage format.

“In the context of data platforms, a Lakehouse (or data lakehouse) is a modern data architecture that combines elements of data lakes and data warehouses to support both structured and unstructured data, enabling analytics, machine learning, and BI on a single platform.” - ChatGPT

When you read a simple summary of what a Lake House is, it makes sense why EVERYONE would want to have their dirty little fingers in that pie.

You as a vendor, want to be involved in the compute and STORAGE of the Lake House architectures as they are being built.

It’s not rocket science, folks. The Lake House is where everyone is heading; there is still a lot of migrations happening and back-and-forths.

The question that DuckLake brings to the forefront is …

Is the race to be part of the major Lake House formats finished, or is there room for more movement?

So, DuckLake from DuckDB, can it be a serious contender and addition to the already crowded Lake House storage formats?

This is the question everyone is asking. How much is hype, how much is reality?

Diving into DuckLake from DuckDB

Ok, before we dive into DuckLake itself, I still have a few comments that I think will add some color to this tool, why it is here, and what will happen, or not happen with it.

I’m just going to list them for brevity.

the “Catalog” has become the achilles heel of many Lake House implementations (especially Iceberg).

DuckDB doesn’t play nice with Apache Iceberg (no write support)

Delta Lake is the clear implementation winner right now

(support across broad spectrum of tools for read/write)

AWS + GCP + Cloudflare + Everyone is adding major support for Iceberg.

but it’s very fragmented

they are semi-open-source solutions

My guess is that DuckDB looked at the Lake House format landscape and saw the obvious fragmentation, frustrations with Iceberg catalogs, and air juggling between formats.

I mean, take for example the number of different articles I have written on Lake House storage formats and catalogs. It shows you the current chaos.

Dang, we keep getting side tracked, we will get there eventually.

Oh yeah, DuckLake.

So what IS DuckLake?

“DuckLake is an open Lakehouse format that is built on SQL and Parquet. DuckLake stores metadata in a catalog database, and stores data in Parquet files.” - DuckDB

So it really is just another Lake House format. How is like most other formats? Well, it’s based on parquet files stored wherever you like.

The big differentiator?

The catalog … aka metadata data can be stored in a SQL Database. Why is this a thing? Because everyone … like everyone … probably runs a Postgres database somewhere. DuckLake’s ability to use a SQL database as a catalog makes it approachable and easy to use.

Unlike Iceberg and Delta Lake, you don’t need to get an EC2 instance up and running, install and manage your own Catalog service/API.

This my friends, is what it comes down too. DuckLake is just another Lake House format with parquet storage on the backend. Nothing new there. It’s the catalog and metadata management that DuckDB recognized has been a huge frustration for Lake House users.

“The basic design of DuckLake is to move all metadata structures into a SQL database, both for catalog and table data.”

Databases are the bread and butter of DuckDB are they not? I mean their tagline in their own website they call themselves a “database system.”

Wait … there’s more.

So, is this truly a incredible and ground breaking new way to approach the Lake House and catalog woes from DuckDB? Everyone makes it sound like that, but it isn’t totally true.

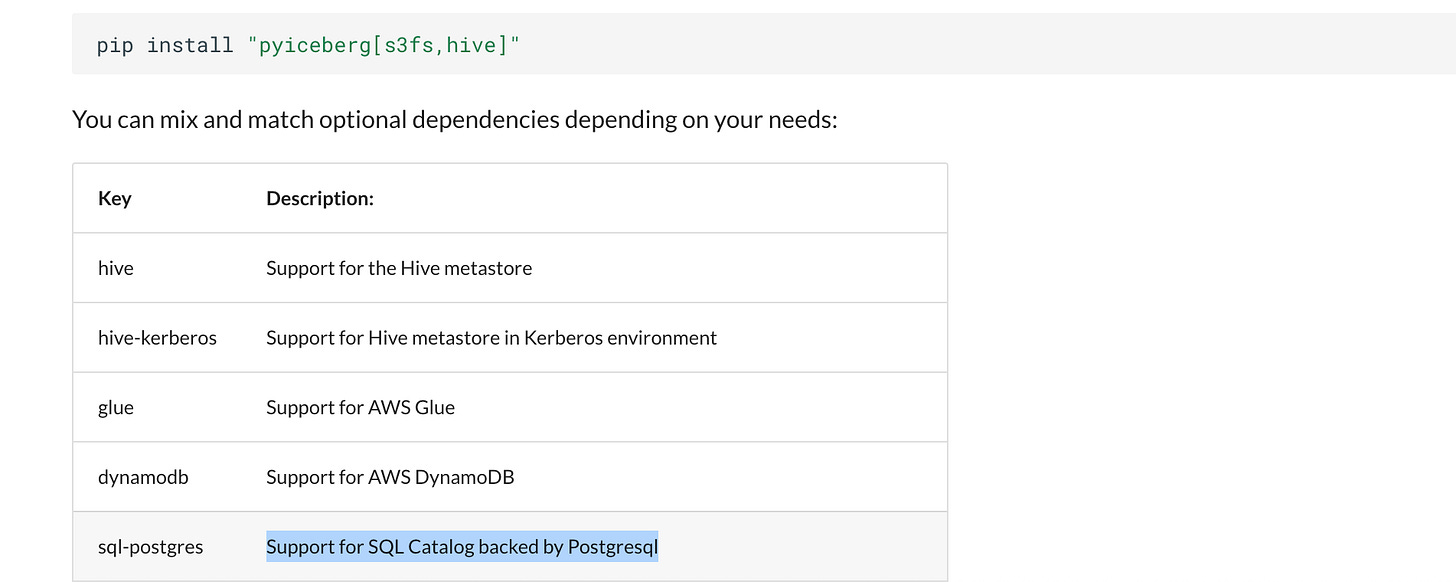

Apache Iceberg appears to long have had support for SQL Catalog backend (in Postgres etc).

Even the pyiceberg website lists Postgres support for a SQL Catalog backend.

So why isn’t everyone doing that?

This is a good question, why is everyone tripping overthemselves about DuckLake and a SQL based catalog when Apache Iceberg already has that option?

The answer is easy and obvious, not ever problem is a Engineering problem, some problems are human.

The answer is because Apache Iceberg has been beaten down and abused by various SaaS vendors for their own use, it’s always lagged behind development wise, from formats like Delta Lake, it’s had a fragmented and divided community.

DuckDB has rightly recognized those problems and decided to offer an alternative solution that WILL BE much easier to use and more developer friendly. Simple as that.

Downsides to DuckLake?

It’s a little early to poke the stick into DuckLake and pontifcate about all it’s shortcomings … here is a bullet list of some my off the cuff reactions when they first released it.

By naming it “DuckLake” they cut their potential users in half.

You think people using Databricks and Snowflake will seriously use something called “DuckLake” in conjunction with non DuckDB compute?

unlikely.

If DuckDB treats and develops DuckLake without the broader community in mind, it will never move past DuckDB users.

(for example there is no Apache Iceberg DuckDB write support today) … (now we know why)

Don’t get me wrong, I think DuckLake is genius idea from DuckDB and is the well deserved stick in the eye of Apache Iceberg for sucking so much when it comes to catalogs.

Should we play with the code?

Not sure if we will do or see anything amazing here, and all this code is available on GitHub in a repo for you to play with, but here goes nothing.

Let’s try to use the setup with DuckDB + DuckLake + Postgres, using Docker and see what happens.

Now for a `docker-compose` setup that will use Postgres as the backend for our DuckLake.

Easy enough eh … now we can write some DuckDB and Python code to install and load DuckLake as well as create a table, and then query the BACKEND of the tables created in Postgres by DuckLake to see what is happening.

A lot of code, but simple enough. Honestly, using DuckLake is as easy as these few lines right here. In typical DuckDB fashion, it could not be simpler.

Work’s like a charm, if you clone my GitHub repo and run `docker-compose up —build` you will get the following print outs, abbreviated here.

Not going to lie, pretty slick and easy to use, a perfect tool for those going all in on the DuckDB bandwagon. They did a better job than Apache Iceberg, like it or not.

If you want people to adopt your Lake House solutions they have to be …

approachable

easy to use

extensible

DuckLake checks all those boxes. They even went out of their way to produce a nice website and documentation.

I don’t know … is DuckLake earth shattering? Once you get past all the hype, which can be hard, no, not really, but yes a little.

In classic DuckDB fashion (taking SQL and making it easy to use and powerful), clearly they have done the same in thing in Lake House format world.

They took a concept that already was here, a Lake House, including a SQL Catalog, and just did it better, with a cleaner and more approachable implementation.

In the end, that is what wins the hearts and minds of Data Engineering users at large. Give us something that works, is powerful, and is clean and simple, and we will use it.

Nice! Power to the duck 🦆

What’s really interesting and emergent in this space is the close association via the guy who did crunchy DB with postgres and duckDB. It ain’t Singlestore but it’s something. Depending on where the crunchy guy goes with Snowflake some more integration seems inevitable. How long now before Postgres writes iceberg with a simple extension? Especially now that Julian’s boring data is out there.

Interesting times.