Lakebase from Databricks.

OLTP meets OLAP

Well, the future is here; it’s swallowed and chewed us up, spit our poor bones out into a steaming pile of bits and bytes (probably in Rust). I’m sure that every author of code, at some point in their troubled existence, has turned their eyes to the sky, wondering when all the new fancy things will stop raining down upon our heads.

When you’ve finally learned to master one new tool or concept, another one sneaks up behind you and gives you the old whack-a-do.

When I thought the Lake House was the latest and greatest concept and technology(s) that wouldn’t be outdone anytime soon, we have yet another new arrival on the deck vying for our mental capacity.

Drumroll … enter … the LakeBase (a Postgres OLTP engine).

Leave it to Databricks to throw a wrench in the smoothly running cogs of data teams around the world, and that they did at the 2025 Data and AI Summit.

I can almost hear the clickety-clack of the callused fingers of a thousand starry-eyed CTOs and middle managers whose bulging heads are about to explode at the thought of adopting a LakeBase and deleting a few decades’ worth of technical detritus.

LakeBase … the background a decade in the making.

Ok, I’m going to do my best to cut through the marketing hype and buzz of excitement rattling around the inter-webs, gather the musing of the masses from Linkedin, read the docs, and serve you up a steaming pile of truth … because … you know … the truth will set you free.

Thanks to Delta for sponsoring this newsletter! I personally use Delta Lake on a daily basis, and I believe this technology represents the future of Data Engineering. Content like this would not be possible without their support. Check out their website below.

Ok, so what is Lakebase … and why do we have people angry about it and others calling it manna from heaven??

“Databricks Lakebase is a fully-managed PostgreSQL OLTP engine that lives inside the Databricks Data Intelligence Platform. You provision it as a database instance (a new compute type) and get Postgres semantics—row-level transactions, indexes, JDBC/psql access—while the storage and scaling are handled for you by Databricks.” - docs

Key capabilities

Postgres–compatible: standard drivers, psql, extensions roadmap.

Managed change-data-capture into Delta Lake so OLTP data stays in sync with BI models.

Unified governance via Unity Catalog roles & privileges.

Lakehouse hooks: can feed Feature Engineering & Serving, SQL Warehouses, Databricks Apps, and RAG pipelines out of the same rows. docs.databricks.com

Elastic scale: separate storage and compute lets you grow read/write throughput without dumping and re-importing data.

Ok, so now we know what Lakebase is … managed, scalable … Postgres inside the Databricks environment. Clam down, I can see the wheels turning in your head.

Is this a huge deal? Yes and no. It depends on who’s asking and what your use case is, like anything else.

To gain context for why Lakebase is a concern that has everyone freaking out, we'll do a brief history lesson. Heck, it’s not that much of a history lesson, as much as a “thing is what happens today.”

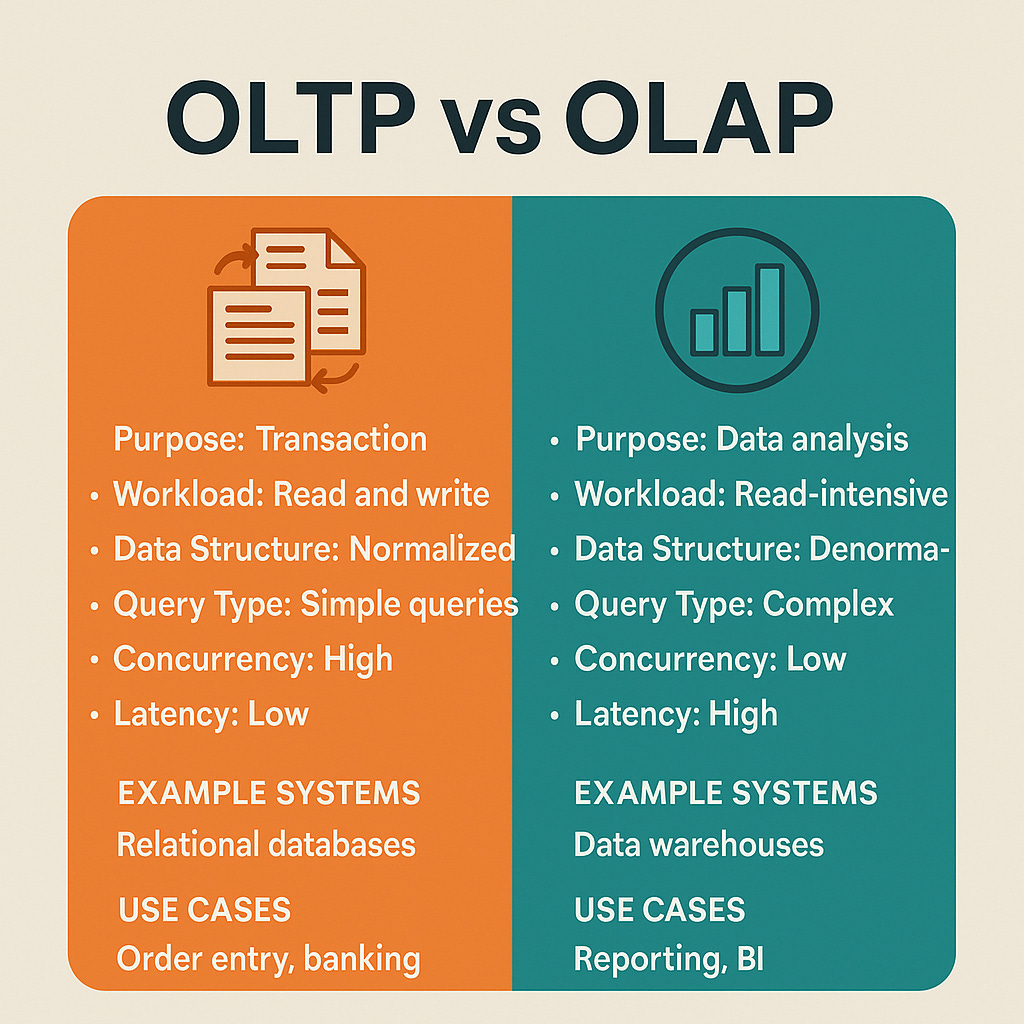

OLAP vs OLTP - always at odds.

The story begins with the advent of RDBMS databases, along with the classic Data Warehouse, the roots of our current Lake House live inside concepts like Kimball’s Data Warehouse Toolkit.

Cira 2007 the world was going running full bore on SQL Server, Oracle, Postgres, and MySQL. OLTP databases were where all transactional processing took place, and we used Data Modeling to shift into an OLAP mindset that was suited to serve up analytics and large-scale analysis.

Only a select few managed to run Hive, Pig, and other HDFS systems, which were more problems than they were solutions.

But it simply wasn’t enough, from either to storage or compute viewpoint.

Enter the Data Lake, where giant dumps of Parquet, Avro, CSV, and JSON files sat in S3, with bespoke Spark, BigQuery, and Athena ground their teeth on TB’s of messy data.

The Lake House saved us from that hell.

What has never really changed over the years? The line between OLAP and OLTP has always been clear, regardless of what new sauce some vendor added to their project.

This sort of stark contrast and separation in the architecture and environment between, say … an RDS Postgres instance … or Aurora cluster for that matter … and the Lake House platform … has been obvious and painful.

We always kept OLTP low latency workloads inside SQL Server, Postgres, etc. They were, and are, the beating heart of many applications and companies.

Lakebase is attempting to narrow that gap.

What OLTP? Want OLAP? They want to give you both, on the same platform … aka in an architectural drawing these boxes set right next to each other so that all sorts of technical barriers are broken down … and innovation increases.

While the acolytes fight, we ponder.

This my fickle friend, is why people are excited about Lakebase. Yes, managed Postgres instances, and clusters have been available for some time.

But honestly, that was never the problem in the first place.

At least that is the idea, and idea is probably is at this point in the marketing cycle.

But, at this point I’m assuming you can imagine WHY the excitement is building inside the Databricks community. Keyword … Databricks community.

If you are a company heavily invested in Databricks, eating up the AI excitement like popcorn, and you also have a few Postgres RDS instances running inside AWS to support your web app … of course you are going to sit up and take notice.

The simplicity of running both your OLTP and OLAP workloads inside a single platform, all with Unity Catalog, the data from each box can almost kiss each other. It’s the ultimate complexity reduction.

The real question to be answered yet is … can it actually perform and deliver in real life? I dont know.

Technical Overview.

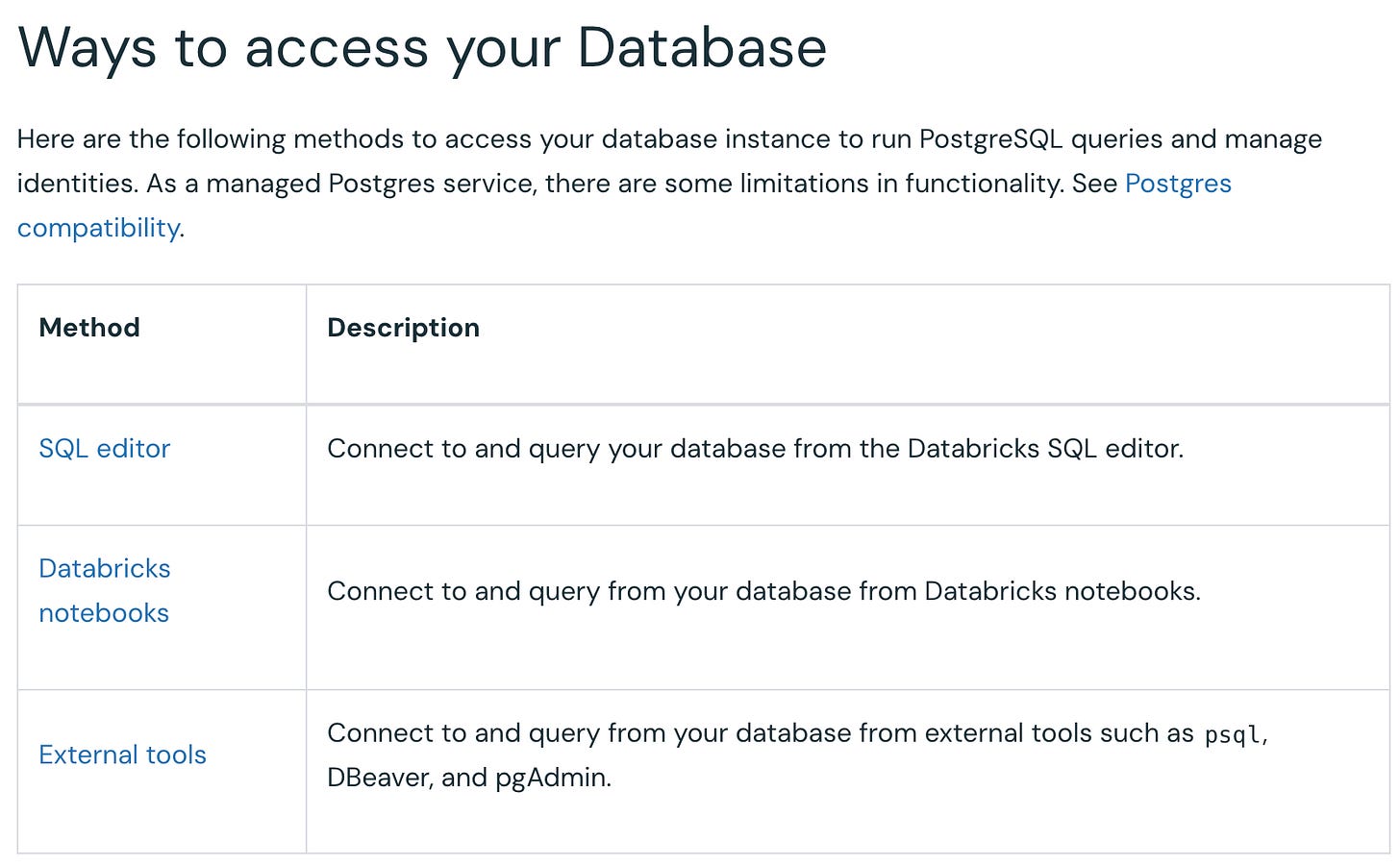

I don’t intend this to be a technical introduction or how-to-guide for Lakebase, you can be sure that is coming soon enough from yours truly, but we can glean over the docs to get some initial insight.

It’s clear that Databricks, as per normal, has integrated this well into their Platform. Right now a “Databricks instance” is just a new type of compute you can select.

They make it easy to spend money don’t they?

Heck, if you want read only nodes and HA setup, they got that too!

The fine print tells us we get a 2TB max size for an instance … which isn’t as big as you think it is, a lot of folk would go over this max. Also, the 1000 concurrent connections will turn some people off, but not the average user.

What is very attractive to many folks is the advent of Synced Tables, the ability for a UC Table to magically be available in your Lakebase Postgres table. Very nice. (simplifies a lot of exiting workflows)

Heck, you know all those SQL junkies will pee their pants when they find out you can use a flipping Notebook to query that Lakebase OLTP database. Imagine the havoc they will cause. They always find a way.

I mean look at this Python example Databricks gives.

Amazing days we live in.

I for one am looking foward to using Lakebase in the future. Anyone on the Databricks platform who also uses an RDS instance, for example, would be crazy not too.

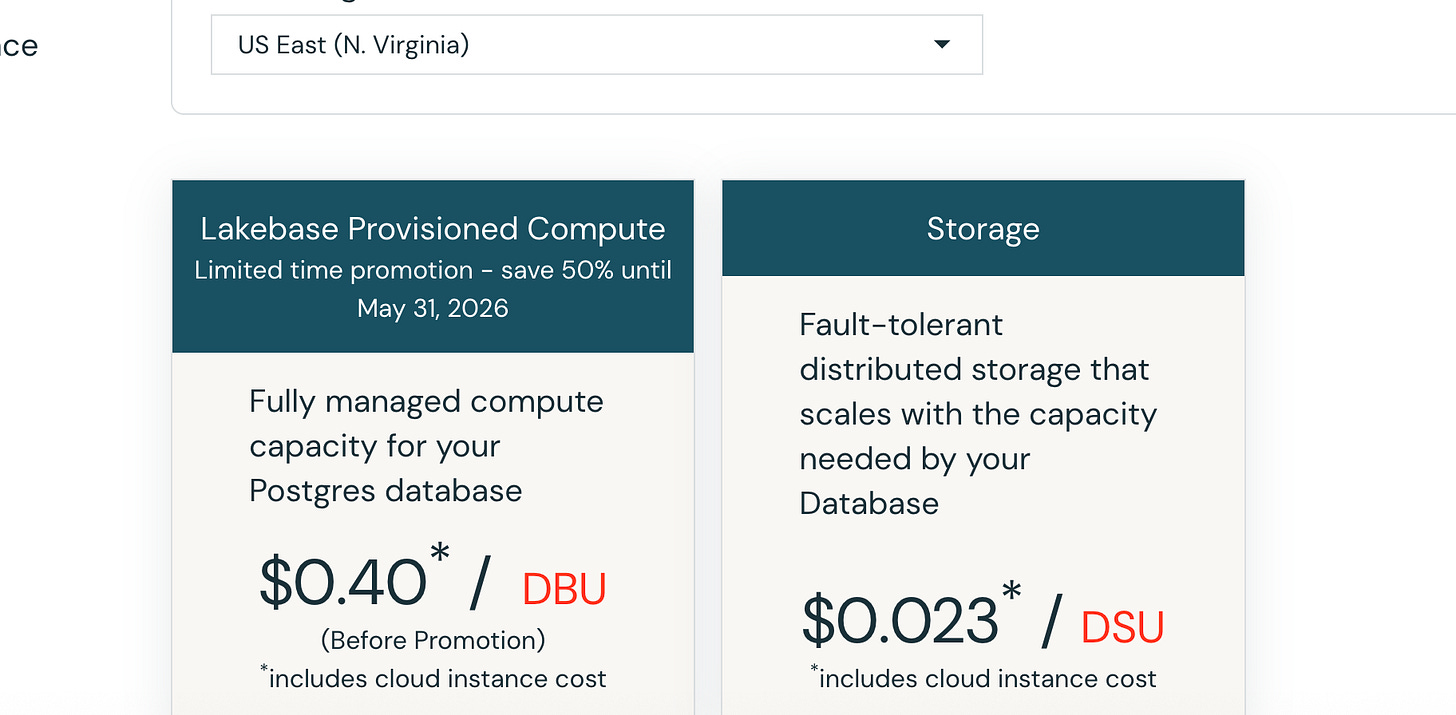

You know the part you aren’t going to like? The price. Ouch. Ain’t no free lunch around these here parts.

But, before all you hobbits go screaming off into the sunset complaining about the price … have a little nuance will you?

Yeah, the cloud and any SaaS is expensive.

Guess what? A lot of CTO’s and Principal Engineers understand that complexity comes at an extremely high cost.

A person must look at the reduction in code, developer hours, breakages, maintenance, and the roadblocks that data silos cause. Can you put a price tag on innovation? What do you GAIN from the expensive toolset that allows you to innovate and do business better than others?

That’s the question that everyone will have to ask themselves. Is Lakebase worth it?

How does it compare to foreign catalog feature?

Its kind of strange, because both Postgres and MSSql have long had OLAP columnstore on top of OLTP (and OLTP indexing on columnstore), and it seems a lot of what people are trying to achieve in Delta Lake, etc is the same thing, starting with OLAP/Parquet.

I am not entirely sure I'm reading this correctly, but I am imagining it is a single way sync back from your lakehouse to a postgres, so a managed postgres instance, with serverless compute and... management-lite reverse-etl? Is that basically it?