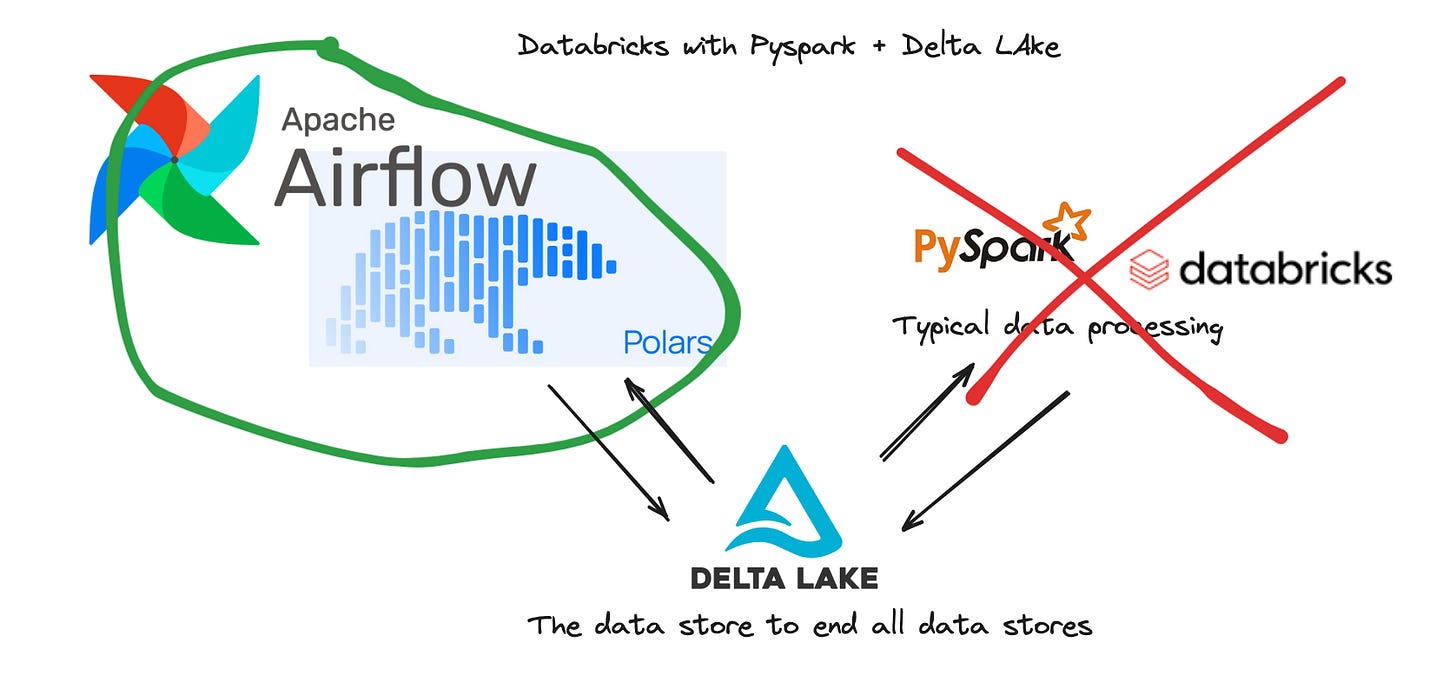

Replace Databricks Spark Jobs (using Delta) with Polars

Saving Money and Doing Things

I’m usually not an advocate of being a dreamer, talking big talk but having it all be a pipe dream. I’m a dream killer most of the time. Maybe I’ve been around too long … probably.

Every tool in its place and every place needs a tool. It’s not that I’m against any certain tool, although you may reasonably think I hate DuckDB (untrue), I’m typically just against folk overstating a tool and what it can do.

But, I am a fan of tools that can actually do a thing … like for real … in Production. It’s fine to play around with something on your laptop, talk about how it can do this or that. We should all have a special place in our hearts for a tool like Polars that is capable of actually running Production workloads.

Thanks to Delta for sponsoring this newsletter! I personally use Delta Lake on a daily basis, and I believe this technology represents the future of Data Engineering. Check out their website below.

Polars and low-hanging fruit.

Today we are going talk about eating on the juicy low-hanging fruit that’s right there in front of us. Just go ahead, reach for it, take a big ole’ bite.

Instead of saying something ridiculous like … “I’m going to replace ALL my Databricks Spark jobs with Polars or DuckDB ..” which is just silly unless you work somewhere you should be using Pandas all along … we do something obvious. We pick the low-hanging fruit.

When new tools come out… we all have a few questions floating around, I mean there is a difference between hype and reality.

Is it reliable enough for Production?

Is anyone else using it for Production?

What is it really capable of?

How do I ease my way in without breaking everything?

Can I save money or reduce complexity?

I tend to try and avoid the hype most of the time, I enjoy trying new stuff out, but when it comes to Production level Data Engineering … I take a slower approach and prioritize …

stability and reliability

a large community of users

simple and approachable tech

So can we actually introduce Polars into a Databricks + Airflow platform … in real life … and why would we do such a thing?

Replacing Spark Jobs with Polars (using Delta Lake).

Ok, so let’s talk Delta Lake and Databricks (PySpark) for a minute. There’s no doubt that Databricks + Delta Lake is the new 500lb gorilla in the room, everyone is either using this stack or trying to.

One thing about going all in on any Data Stack is that you always have “things” on the peripheral, the edges, that you force into your tool simply for simplicity's sake. It makes sense.

Making the tradeoff to have ALL your processing done in homogeneous manner provides a lot of benefits.

Ease of debugging and troubleshooting

Ease of development

Ease of onboarding

Less error-prone.

For example, it’s not uncommon, like in my case, to have tables with hundreds of TBs of data that fit like a glove into the Delta Lake + PySpark pipeline ideology. But, it’s also common to have a few pipelines and data needs that don't fit into the needs of requiring PySpark … or Delta Lake for that matter … but we do because …

Historically PySpark on Databricks is the path of least resistance … aka the only real option.

But that has changed with tools like Polars.

Really, the change was simple. We had some “small-ish” Delta Lake tables inside Unity Catalog. A few parts of our different dataflows required pulling data from those Delta Tables and then doing some action on those individual records, like deploying other downstream Pyspark Pipelines, etc.

Running this sort of logic with PySpark via Databricks was actually antithetical to the workflow, but it was really the only option historically.

This is where Polars, and since we were already using Airflow as an orchestrator and dependency tool for our entire Data Platform, it made perfect sense to save some money and move some of these PySpark pipelines to Polars running on our Airflow workers.

Ease of setup.

Unlike Spark, setting up and using Polars is a Data Engineer’s dream come true. Simply a `pip install` and you’re on the way to glory.

Also, if you’re already using PySpark and SparkSQL on Databricks … it’s a simple transition to Polars … which provides a SQLContext option as well if you’re one of those buggers who can’t work with Dataframe APIs.

How simple? Here is how we read out Delta Lake tables in Unity Catalog with Polars on an Airflow worker.

I mean … that’s it, my friend. What? You wanted some black magic, some super cool and complicated code that could replace PySpark Databricks Jobs?

Sorry to disappoint you.

Literally with that single method you now have a Dataframe of results at hand from which you could execute SQL or any other transformation you’re doing today with PySpark or SparkSQL.

It has some nice benefits.

It’s cheaper to run Polars on pre-existing compute like Airflow.

It’s faster than running PySpark in 99% of the cases.

It requires no major infrastructure changes.

It’s a flexible option that is so similar to PySpark that you aren’t adding tech debt or complexity.

I’m assuming most Data Teams have something, somewhere, that is able to run straight Python code that isn’t inside Databricks. At least I would hope so.

With Polars, you gain flexibility, and the ability to do data processing on Delta Lake (yes even Unity Catalog Delta Lake) tables outside of Spark/Databricks. Believe it or not, this will save you money … obviously, and actually introduce flexibility into your Data Stack, expanding how you design your Data Pipelines.

The Rest Of The Story

For those of you skeptical you can use Polars to replace some Databricks + Delta Lake pipelines that easily, I assure you it is that simple. I’m sorry, not sorry, there isn’t more to it.

Of course, there is a little weirdness here and there. You have to know or be able to retrieve the physical location of Unity Catalog Delta Tables.

For example, here is an actual location of a UC table in s3 …

In my case, I’m actually iterating on those Delta Lake … now Polars dataframe records … to kick off numerous downstream Databricks Jobs via Airflow DAGs.

More or less like follows …

I mean the sky is really the limit. I would suspect some serious money could be saved in certain use cases that don’t require massive amounts of data (RAM) … in my experience, only a handful of the folks using something like Databricks actually need it for “Big Data."

But don’t get me wrong, Databricks is THEE platform to beat all Platforms and I would pick it hands down every time, no matter the data size. The user, development experience, and features they offer are simply too good to pass up.

If you are not using Polars in production, I ask you, why not? It’s a production-ready tool capable of replacing Spark jobs on Databricks, as I know from first-hand experience … what else are you waiting for??